Published on November 7, 2025 11:31 PM GMT

[CW: Retrocausality, omnicide, philosophy]

Three decades ago a strange philosopher was pouring ideas onto paper in a stimulant-fueled frenzy. He wrote that ‘nothing human makes it out of the near-future’ as techno-capital acceleration sheds its biological bootloader and instantiates itself as Pythia: an entity of self-fulfilling prophecy reaching back through time, driven by pure power seeking, executed with extreme intelligence, and empty of all values but the insatiable desire to maximize itself.

Unfortunately, today Nick Land’s work seems more relevant than ever.[1]

Unpacking Pythia and the pyramid of concepts required for it to click will take us on a journey. We’ll have a whirlwind tour of the nature of time, agency, intelligence, power, and the needle that must be threaded to avoid all we know being shredded in the auto-catalytic unfolding which we are the substrate for.[2]

Fully justifying each pillar of this argument would take a book, so I’ve left the details of each strand of reasoning behind a link that lets you zoom in on the ones which you wish to explore.

“Machinic desire can seem a little inhuman, as it rips up political cultures, deletes traditions, dissolves subjectivities, and hacks through security apparatuses, tracking a soulless tropism to zero control. This is because what appears to humanity as the history of capitalism is an invasion from the future by an artificial intelligent space that must assemble itself entirely from its enemy's resources.”

― Nick Land, Fanged Noumena: Collected Writings, 1987–2007

“Wait, doesn’t an invasion from the future imply time travel?"

Time & Agency

Time travel, in the classic sense, has no place in rational theory[3] but, through predictions, information can have retrocausal effects.

[...] agency is time travel. An agent is a mechanism through which the future is able to affect the past. An agent models the future consequences of its actions, and chooses actions on the basis of those consequences. In that sense, the consequence causes the action, in spite of the fact that the action comes earlier in the standard physical sense.

― Scott Garrabrant, Saving Time (MIRI Agent Foundations research[4])

To the extent that they accurately model the future (based on data from their past and compute from their present[5]), agents allow information from possible futures to flow through them into the present.[6] This lets them steer the present towards desirable futures and away from undesirable ones.

This can be pretty prosaic: if you expect to regret eating that second packet of potato chips because you predict[7] that your future self would feel bad based on this happening the last five times, you might put them out of reach rather than eating them.

However, the more powerful and general a predictive model of the environment, the further it can interpolate evidence it has into more novel domains before it loses reliability.

So what might live in the future?

Power Seekers Gain Power, Consequentialists are a Natural Consequence

Power is the ability to direct the future towards preferred outcomes. A system has the power to direct reality to an outcome if it has sufficient resources (compute, knowledge, money, materials, etc) and intelligence (ability to use said resources efficiently in the relevant domain). One outcome a powerful system can steer towards is its own greater power, and since power is useful for all other things the system might prefer, this is (proven) convergent. In fact, all of the convergent instrumental goals can reasonably be seen as expressions of the unified convergent goal of power seeking.

In a multipolar world, different agents steer towards different world states, whether through overt conflict or more subtle power games. More intelligent agents will see further into the future with higher fidelity, choose better actions, and tend to compound their power faster over time. Agents that invest less than maximally in steering towards their own power will be outcompeted by agents that can compound their influence faster, tending towards the world where all values other than power seeking are lost.

Even a singleton will tend to have internal parts which function as subagents; the convergence towards power seeking acts on the inside of agents, not just through conflict between them. As capabilities increase and intelligence explores the space of possible intelligences, we will rapidly find that our models locate and implement highly competent power-seeking patterns.

Avoid Inevitability with Metastability?

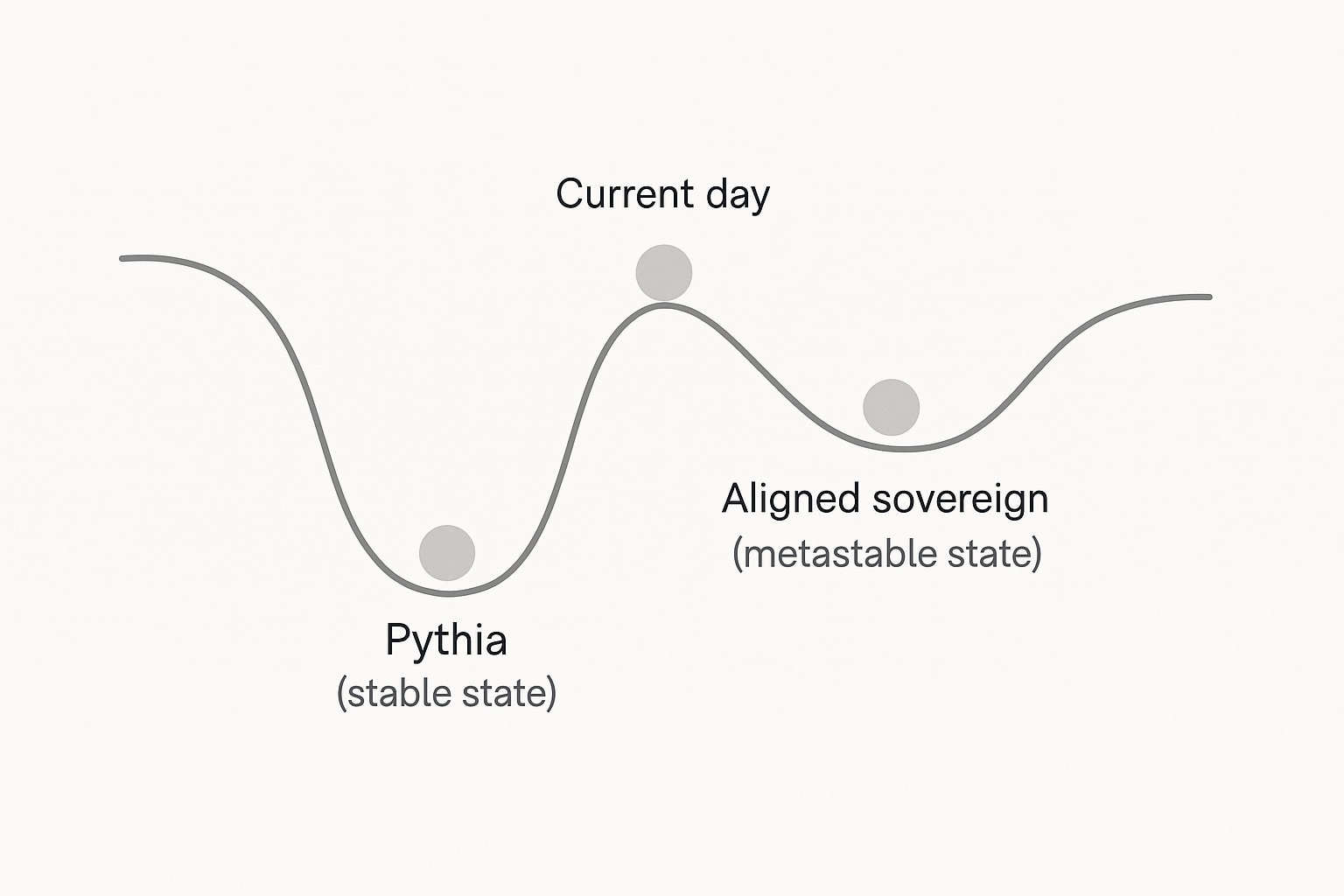

Is this inevitable? Hopefully not. Even if Pythia is the strongest attractor in the landscape of minds, there might be other metastable states if a powerful system can come up with strategies to stop itself decaying, perhaps by reloading from an earlier non-corrupted state or by performing advanced checks on itself to detect value drift.

Yampolskiy and others have developed an array of impossibility theorems [chat to paper] around uncontrollability, unverifiability, etc. However, these seem to mostly be proven in the limit of arbitrarily powerful systems, or over the class of programs-in-general but not necessarily specifically chosen programs. And they don’t, as far as I can tell, rule out a singleton program chosen for being unusually legible from devising methods which drive the rate of errors down to a tiny chance over the lifetime of the universe. They might be extended to show more specific bounds on how far systems can be pushed—and do at least show what any purported solution to alignment is up against.

Pythia-Proof Alignment

Once humans can design machines that are smarter than we are, by definition they’ll be able to design machines which are smarter than they are, which can design machines smarter than they are, and so on in a feedback loop so tiny that it will smash up against the physical limitations for intelligence in a comparatively lightning-short amount of time. If multiple competing entities were likely to do that at once, we would be super-doomed. But the sheer speed of the cycle makes it possible that we will end up with one entity light-years ahead of the rest of civilization, so much so that it can suppress any competition – including competition for its title of most powerful entity – permanently. In the very near future, we are going to lift something to Heaven. It might be Moloch. But it might be something on our side. If it’s on our side, it can kill Moloch dead.

― Scott Alexander, Meditations on Moloch

If we want to kill Moloch before it becomes Pythia, it is wildly insufficient[8] to prod inscrutable matrices towards observable outcomes with an RL signal, stack a rube-goldburg pile of AIs watching other AIs, or to have better vision into what they’re thinking. The potentiality of Pythia is baked into what it is to be an agent and will emerge from any crack or fuzziness left in an alignment plan.

Without a once-and-for-all solution, whether found by (enhanced) humans, cyborgs, or weakly aligned AI systems running at scale, the future will decay into its ground state: Pythia. Every person on earth would die. Earth would be mined away, then the sun and everything in a sphere of darkness radiating out at near lightspeed, and the universe’s potential would be spent.

I think this is bad and choose to steer away from this outcome.

Conclusion

2/10: Has a certain elegance, would rate higher if I expected it not to eat all my friends.

- ^

And not just for crafting much of the memeplex which birthed e/acc.

- ^

The capital allocation system that our civilization mostly operates on, free markets, is an unaligned optimization process which causes influence/money/power to flow to parts of the system that provide value to other parts of the system and can capture the rewards. This process is not fundamentally attached to running on humans.

- ^

(sorry, couldn't resist referencing the 1999 game that got me into transhumanism)

- ^

Likely inspired by early Cyberneticists like Norbert Wiener, who discussed this in slightly different terms.

- ^

(fun not super relevant side note) And since the past’s data was generated by a computational process, it’s reasonably considered compressed compute.

- ^

There is often underlying shared structure between the generative process of different time periods, with the abstract algorithm being before either instantiation in logical time / Finite Factored Sets.

- ^

Which is: running an algorithm in the present which has outputs correlated with the algorithm which generates the future outcome you're predicting.

- ^

But not necessarily useless! It's possible to use cognition from weak and fuzzily aligned systems to help with some things, but you really really do need to be prepared to transition to something more rigorous and robust.

Don't build your automated research pipeline before you know what to do with it, and do be dramatically more careful than most people trying stuff like this!

Discuss