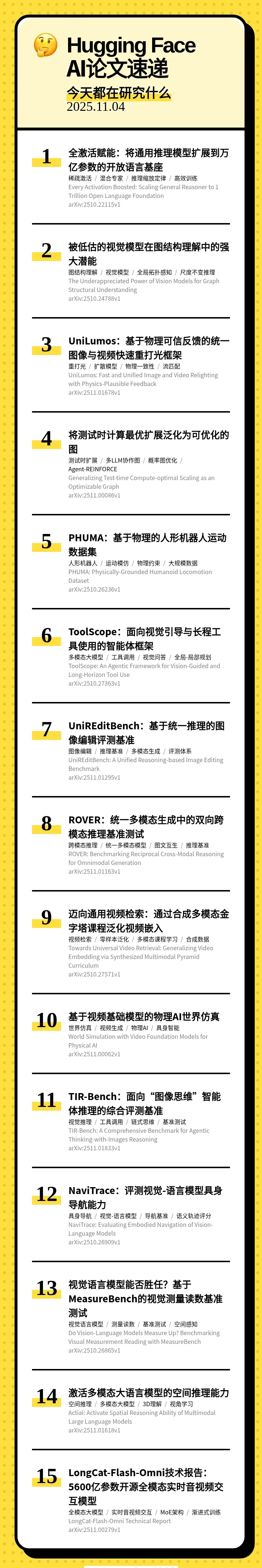

本期的 15 篇论文如下:

00:23 🧠 Every Activation Boosted: Scaling General Reasoner to 1 Trillion Open Language Foundation(全激活赋能:将通用推理模型扩展到万亿参数的开放语言基座)

01:03 👁 The Underappreciated Power of Vision Models for Graph Structural Understanding(被低估的视觉模型在图结构理解中的强大潜能)

01:38 💡 UniLumos: Fast and Unified Image and Video Relighting with Physics-Plausible Feedback(UniLumos:基于物理可信反馈的统一图像与视频快速重打光框架)

02:37 🕸 Generalizing Test-time Compute-optimal Scaling as an Optimizable Graph(将测试时计算最优扩展泛化为可优化的图)

03:11 🤖 PHUMA: Physically-Grounded Humanoid Locomotion Dataset(PHUMA:基于物理的人形机器人运动数据集)

03:48 🔭 ToolScope: An Agentic Framework for Vision-Guided and Long-Horizon Tool Use(ToolScope:面向视觉引导与长程工具使用的智能体框架)

04:30 🧠 UniREditBench: A Unified Reasoning-based Image Editing Benchmark(UniREditBench:基于统一推理的图像编辑评测基准)

05:23 🔄 ROVER: Benchmarking Reciprocal Cross-Modal Reasoning for Omnimodal Generation(ROVER:统一多模态生成中的双向跨模态推理基准测试)

06:04 🌍 Towards Universal Video Retrieval: Generalizing Video Embedding via Synthesized Multimodal Pyramid Curriculum(迈向通用视频检索:通过合成多模态金字塔课程泛化视频嵌入)

06:44 🌍 World Simulation with Video Foundation Models for Physical AI(基于视频基础模型的物理AI世界仿真)

07:20 🧠 TIR-Bench: A Comprehensive Benchmark for Agentic Thinking-with-Images Reasoning(TIR-Bench:面向“图像思维”智能体推理的综合评测基准)

08:03 🧭 NaviTrace: Evaluating Embodied Navigation of Vision-Language Models(NaviTrace:评测视觉-语言模型具身导航能力)

08:45 📏 Do Vision-Language Models Measure Up? Benchmarking Visual Measurement Reading with MeasureBench(视觉语言模型能否胜任?基于MeasureBench的视觉测量读数基准测试)

09:23 🧭 Actial: Activate Spatial Reasoning Ability of Multimodal Large Language Models(激活多模态大语言模型的空间推理能力)

10:07 🐱 LongCat-Flash-Omni Technical Report(LongCat-Flash-Omni技术报告:5600亿参数开源全模态实时音视频交互模型)

【关注我们】

您还可以在以下平台找到我们,获得播客内容以外更多信息

小红书: AI速递