Published on November 3, 2025 9:50 PM GMT

I was recently saddened to see that Seb Krier – who's a lead on the Google DeepMind governance team – created a simple website apparently endorsing the idea that Ricardian comparative advantage will provide humans with jobs in the time of ASI. The argument that comparative advantage means advanced AI is automatically safe is pretty old and has been addressed multiple times. For the record, I think this is a bad argument, and it's not useful to think about AI risk through comparative advantage.

The Argument

The law of comparative advantage says that two sides of a trade can both profit from each other. Both can be better off in the end, even if one side is less productive at everything compared to the other side. The naive idea some people have is: humans are going to be less productive than AI, but because of thie law humans will remain important, will keep their jobs and get paid. Things will be fine, and this is a key reason why we shouldn't worry so much about AI risk. Even if you're less productive at everything than AI, we can still trade with AI. Everything will be good. Seb explicitly believes this will hold true for ASI.

This would prove too much and this is not how you apply maths

There are a few reasons to immediately dismiss this whole argument. The main one is that this would prove far too much. It seems to imply that when one party is massively more powerful, massively more advanced, and massively more productive, the other side will be fine—there's nothing to worry about. It assumes some trade relationship will happen between two species where one is vastly more intelligent. There are many reasons to believe this is not the case. We don't trade with ants. We didn't trade much with Native Americans. In the case of ants, we wouldn't even consider signing a trade deal with them or exchanging goods. We just take their stuff or leave them alone. In other cases, it's been more advantageous to just take the stuff of other people, enslave them, or kill them. In conclusion, this argument proves far too much.

Also, simple math theorems won’t prove that AI will be safe, this is not the structure of reality. Comparative advantage is a simple mathematical theorem used to explain trade patterns in economics. You can't look at a simple theorem in linear algebra and conclude that AI and humans would peacefully co-exist. One defines productivity by some measure, and you have a vector of productivity for different goods and you get a vector of labor allocation. It's a simple mathematical fact from linear algebra. This naive way of vaguely pattern matching is not how you apply maths to the real world, ASI won't be safe because of this. The no-free-lunch theorem doesn’t prove that there can’t be something smarter than us either.

In-Depth Counter-Arguments

Let's say you're unconvinced and want to go more in-depth. Comparative advantage says nothing about leaving power to humans or humans being treated well. It only addresses trade relationships, it says nothing about leaving power to the less productive side or treating them well.

There's nothing preventing the more productive side from acquiring more resources over time—buying things, buying up land, buying up crucial resources it needs—and then at some point leaving nothing for the other side.

Comparative advantage doesn't say what's the optimal action. It only says you can both profit from trade in certain situations, but it doesn't say that's the most optimal thing. In reality, it's often more optimal to just take things from the other side, enslave them, and not respect them.

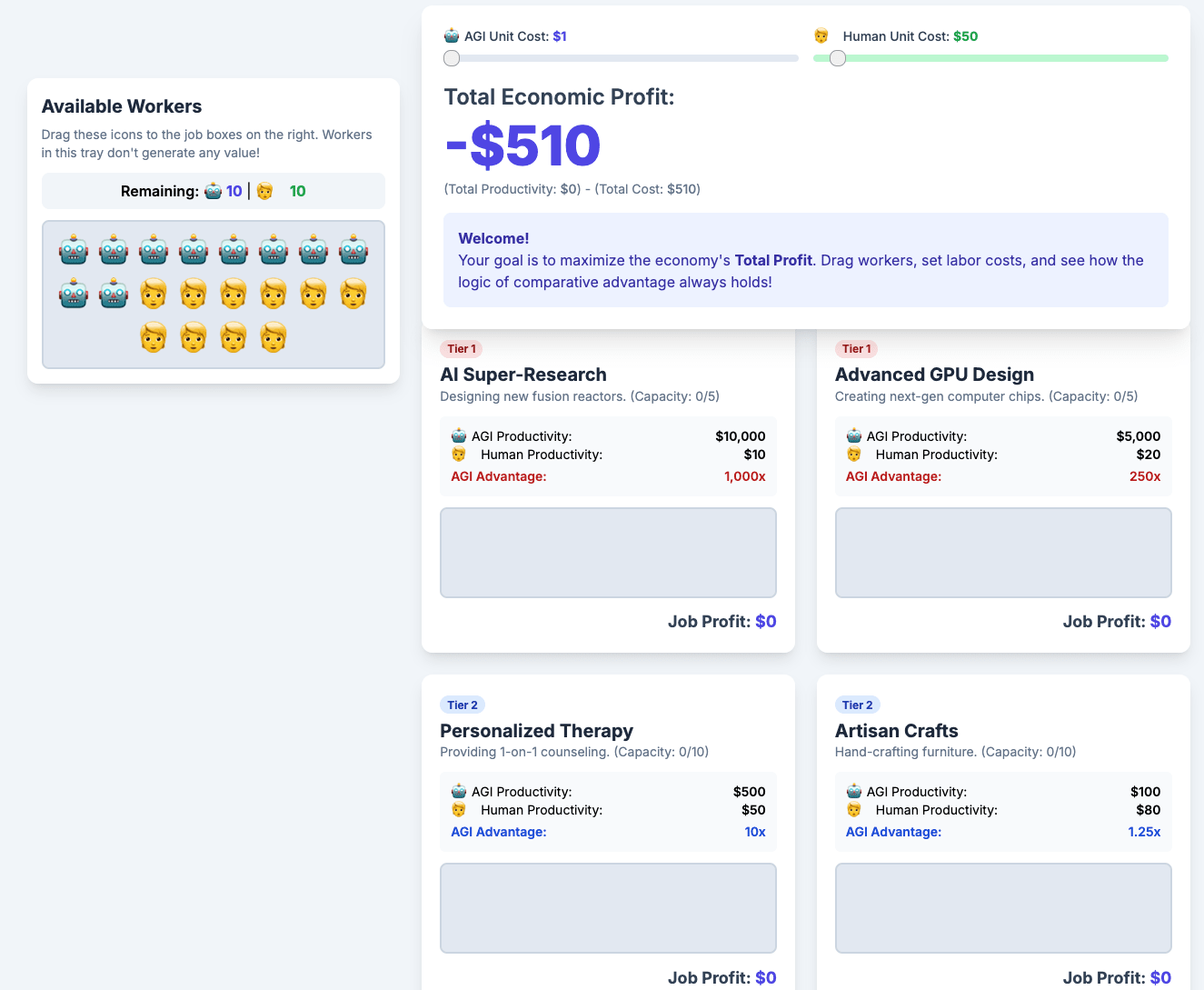

Another big problem with this whole website that Seb Krier created: he's looking at 10 humans and 10 AIs and how to divide labor between them. But you can just spin up as many AGIs as you want. There's massive constant upscaling of the amount of GPUs and infrastructure we have. You can have a massively increasing, exponentially increasing amount of artificial intelligence. This massively breaks comparative advantage: the more productive side is massively increasing in numbers all the time.

Comparative advantage says nothing about whether the AI will keep the biosphere alive. If ASI decides that all the things we do to keep enough oxygen and the right temperature don't fit with filling the world with data centers, nuclear power stations, and massive solar panels. How much money does it actually make from trade with humans compared to the advantage of being able to ravage the environment?

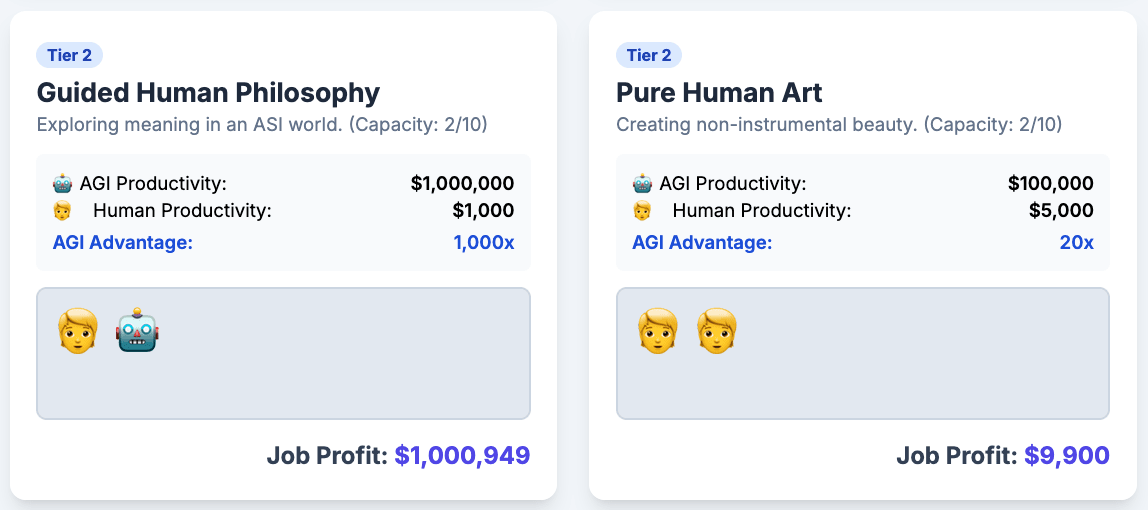

In the app, the optimal strategy for humans is making artisan crafts, artisan furniture, and therapy for other humans—things that give nothing to the AI. Realistically there is nothing valuable we could provide to the AI. If we have zero productivity at anything the AI desires, and only very small productivity for things that only we need that the AI doesn't need, there's no potential for trade. There's no trade happening in comparative advantage if you have zero productivity for anything the AI actually needs, or near zero. What could we possibly trade with ants? We could give them something they like, like sugar. What could the ants give us in return?

Even if we could trade something with the AI and get something in return, humans have a minimum wage—we need to get enough calories, we need space, we need oxygen. It's not guaranteed that we provide this amount of productivity. We're extremely slow, and we need food, shelter, and all these things that the AI doesn't need.

Conclusion

I feel sad that people in Google DeepMind think this is a realistic reason for hope. He apparently has an important position at Google DeepMind working on governance and I hope to convince him here a bit. I don't think this is a serious argument, it's not a reasonable way to think about AI human co-existence. To be fair, he has put out a few caveats, though he hasn't really explained them.

Discuss