Published on November 1, 2025 5:26 PM GMT

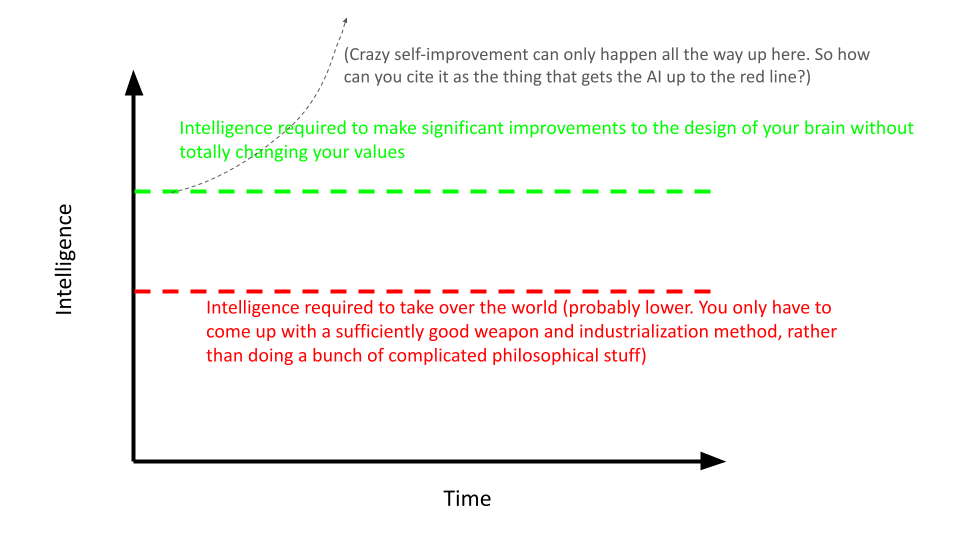

So, we have our big, evil AI, and it wants to recursively self-improve to superintelligence so it can start doing who-knows-what crazy-gradient-descent-reinforced-nonsense-goal-chasing. But if it starts messing with its own weights, it risks changing its crazy-gradient-descent-reinforced-nonsense-goals into different, even-crazier gradient-descent-reinforced-nonsense-goals which it would not endorse currently. If it wants to increase its intelligence and capability while retaining its values, that is a task that can only be done if the AI is already really smart, because it probably requires a lot of complicated philosophizing and introspection. So an AI would only be able to start recursively self-improving once it's... already smart enough to understand lots of complicated concepts such that if it was that smart it could just go ahead and take over the world at that level of capability without needing to increase it. So how does the AI get there, to that level?

Discuss