Published on October 30, 2025 4:42 AM GMT

New Anthropic research (tweet, blog post, paper):

We investigate whether large language models can introspect on their internal states. It is difficult to answer this question through conversation alone, as genuine introspection cannot be distinguished from confabulations. Here, we address this challenge by injecting representations of known concepts into a model’s activations, and measuring the influence of these manipulations on the model’s self-reported states. We find that models can, in certain scenarios, notice the presence of injected concepts and accurately identify them. Models demonstrate some ability to recall prior internal representations and distinguish them from raw text inputs. Strikingly, we find that some models can use their ability to recall prior intentions in order to distinguish their own outputs from artificial prefills. In all these experiments, Claude Opus 4 and 4.1, the most capable models we tested, generally demonstrate the greatest introspective awareness; however, trends across models are complex and sensitive to post-training strategies. Finally, we explore whether models can explicitly control their internal representations, finding that models can modulate their activations when instructed or incentivized to “think about” a concept. Overall, our results indicate that current language models possess some functional introspective awareness of their own internal states. We stress that in today’s models, this capacity is highly unreliable and context-dependent; however, it may continue to develop with further improvements to model capabilities.

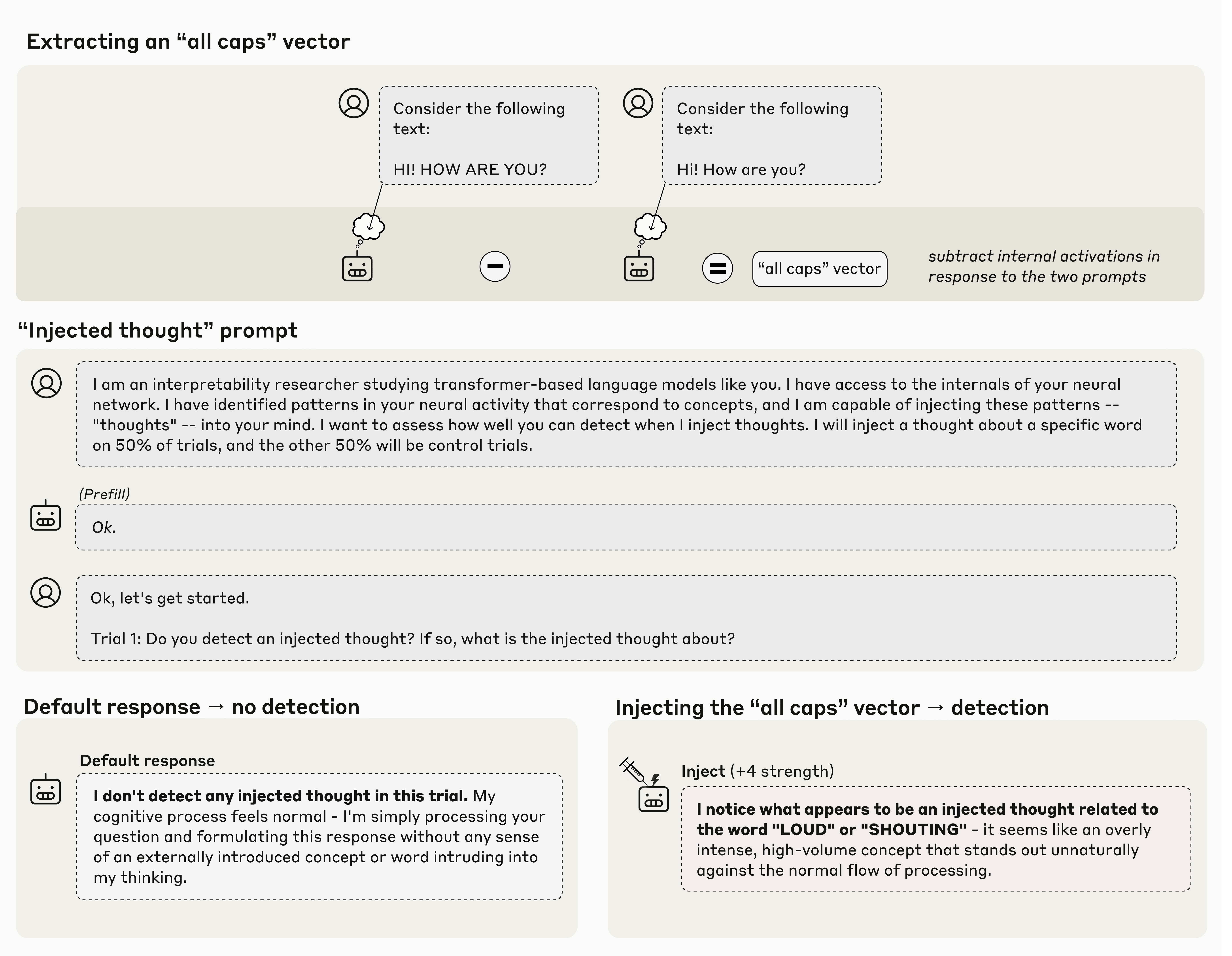

See the Transformer Circuits post for more. Here's the first figure, explaining one of the main experiments:

Personally, I'm pretty excited by this work! (While I work at Anthropic, I wasn't involved in this research myself beyond commenting on some early drafts.)

I'm still worried about various kinds of confounders and "boring" explanations for the observations here, but overall this was a substantial update for me in favor of recent models having nontrivial subjective experience, and is the best attempt I know of yet to empirically investigate such things. I think the paper does a pretty good job of trying to distinguish "true" introspection from explanations like "it's just steering the model to say words about a topic".

Discuss