Published on October 24, 2025 5:22 PM GMT

Conor Griffin interviewed Been Kim and Neel Nanda and posted their discussion here. They touch on a series of important questions about what explaining AI systems should look like, the role of mechanistic interpretability, the problems with solving explainability through chain-of-thought reasoning, and using AIs to produce explanations. I collected some highlights that I found relevant for work in interpretability and alignment, but also for anyone who wants to have some context on explanation in current AI research.

1. The goals of the researcher matter

Neel talks about what motivates explainability research. I think that we don't ask the question "why do you want to pursue this research agenda" enough (even within theory-of-change-oriented and impact-obsessed circles). Now, one may be worried that this will have a paralyzing effect. That is, if you keep zooming out to see the big picture, you will never do any object-level work, and you will never finish or even begin a single project. But it is nevertheless essential to be able to understand the goals and the specific motivations that drive a research agenda. Neel mentions some possible goals. The first, and the one that motivated his work, is AGI safety, i.e., how to make sure that AI systems can be controlled in the future, especially the most powerful ones. Second, how to avoid the harms that current AI systems can cause, for example, phenomena of algorithmic bias. And three, the pure scientific motivation, uncovering the nature of intelligence by studying AI models. Been and Neel then both agree that part of their research is aimed at empowering users. Explainability, in other words, should make AI systems intuitive so that non-experts can engage with them and know what they're doing.

The theory-of-science comment here is that there are all sorts of incentives that have historically motivated scientific research. Some of them we may never even be able to trace after all, but as a general practice, and especially if there is an urgency to improve alignment research faster, it would be helpful to at least try to be transparent about the goals of the one in need of an explanation.

2. Mechanistic interpretability for AGI safety

What is mechanistic interpretability, and why is it especially useful for AGI safety? First of all, why call it "mechanistic"? Rumor has it that the only difference between mechanistic interpretability and just interpretability is that if you use the former, you're probably just signaling your EA/AI safety/rationality affiliation. But once you get a bit more context[1] on the history of the term "mechanistic" and read this interview, it becomes clear that this is not the reason why.

Mechanistic interpretability is the equivalent of biology or neuroscience for AI brains. In other words, we're trying to find the mechanisms that produce a model's outputs. Even when we have the basic mathematical architecture, or we can see lists of numbers, which is, in a sense, what the model is, we don't really know how to interpret them. And that's the role of a mechanism: to tell us what the causal connection between different parts of a system looks like so that we don't merely see disconnected pieces (numbers in this case).

Neel gives an example for thinking of this in terms of biology, where discovering intermediate states within a model is very hard, but at the same time, it is a level of transparency that will prevent highly capable systems from taking over. So there is something deep about the mechanistic aspect of interpretability: it is meant to find relations of cause and effect, just like in biological systems.

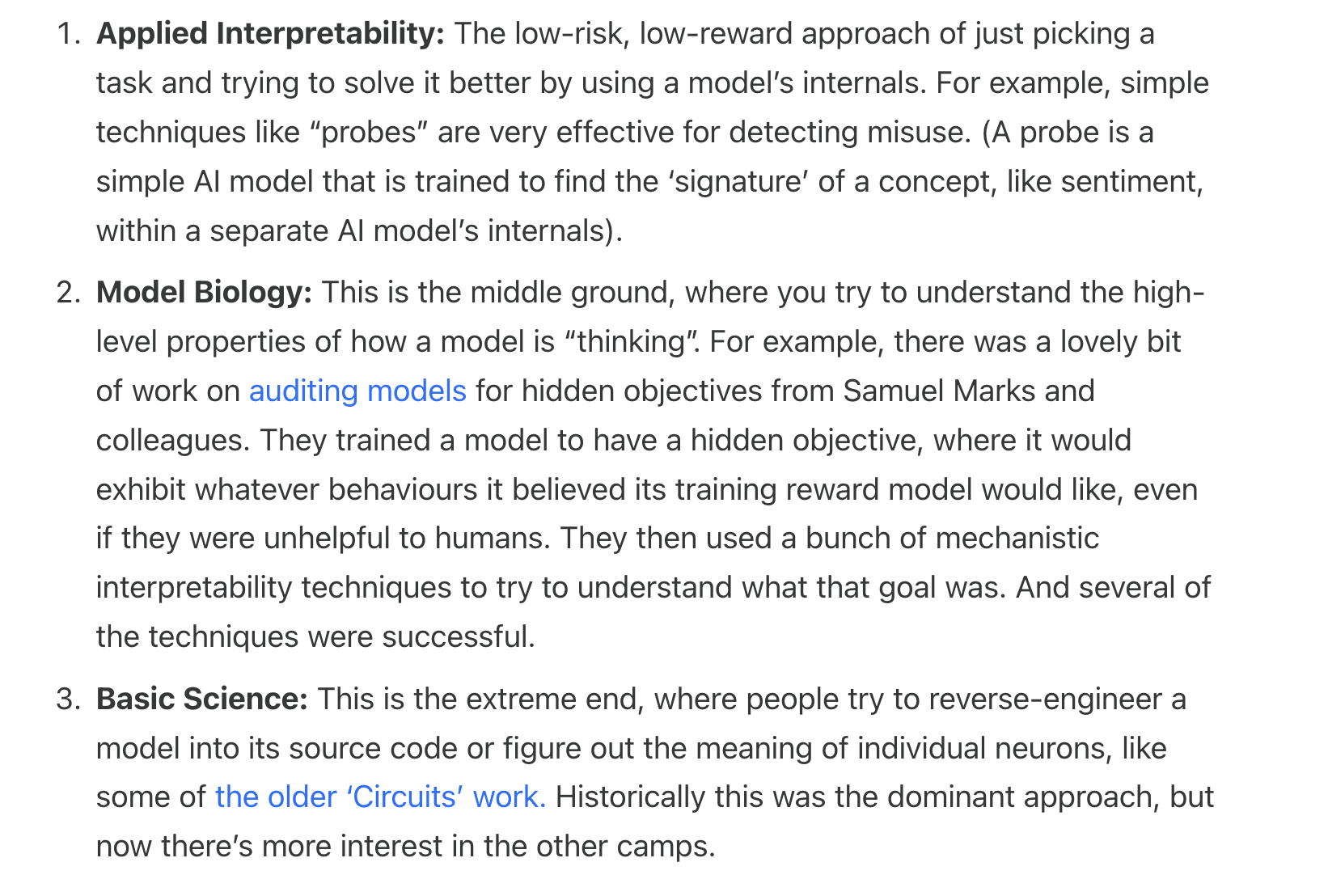

3. An interpretability taxonomy

I'm pasting this directly from the interview; I think it's helpful to put some labels and taxonomize research directions, even if doing so doesn't fully capture everyone's work to their satisfaction. I think that more opinionated taxonomies would be a good way to argue for one's research and perhaps even reveal their goals naturally.

4. Do SAEs make systems safer?

There was a time of extraordinary enthusiasm about SAEs, but this is so 2024, as Neel admits. The problem researchers found was that even though some of the examples of applying SAEs are very useful, they are not the game-changer that the community thought they were. For cases of detecting harmful behavior, it seems that simpler techniques will do a fine job.

The neuroscience-versus-interpretability comment is that SAEs promise to identify sparse interpretable representations of neural activity that correspond to semantic features. By analogy, in neuroscience, there are statistical models that map between brain activity patterns, for example, fMRI data and stimulus features or semantic dimensions. Evaluating the effectiveness of these is a topic for a different post, so I leave it open for now. I just wanted to emphasize the big-if-true side of SAEs potentially succeeding as their neuroscience counterpart remains a major open project in itself.

You can find Google DeepMind's negative results on SAEs post here.

5. Why invest in interpretability?

In the case of AI systems, we need explanations not just because we are interested in uncovering how the systems work, but because we're trying to create robust techniques that will serve as tools for steering models or detecting potentially dangerous behaviors. As interpretability evolves, it should be, according to Neel, one of the tools available, but importantly, not the only one, if we're concerned about the risk that comes with deploying increasingly more powerful systems. I'm speculating that this is because the nature of mechanistic interpretability explanations is such that it doesn't cover all possible failure modes, as one would expect.

By analogy, one may think that even if we had a full understanding of how biological brains work internally, we might still want to use behavioral tools when it's pragmatically the better choice to go with. For example, it might be that modeling an agent through the intentional stance remains a lower-cost approach for predicting behavior. In a future of complete human brain interpretability, we might still opt for applying the intentional stance to interact with others rather than leveraging sophisticated neuroscience techniques.

This, of course, is not an argument against investing in interpretability; on the contrary, it's a suggestion that combining tools that work well independently might be our path to success, depending on other conditions that will apply in that possible future.

6. Is chain-of-thought an explainability technique?

The obvious usefulness of chain-of-thought reasoning is that the model will use the equivalent of a scratchpad to record all of its strategic thoughts there. Presumably, executing a complicated and potentially dangerous task will require multiple steps and the model will have them on that scratchpad. The overseers will read it and prevent the model from whatever bad thing it was intending to do. If we get to a point where a model can do the dangerous thing without the overseer being able to read the scratchpad, then we're probably already at the stage where a model would be able to do the dangerous thing regardless of the overseer accessing that scratchpad, meaning that we will already be beyond a certain capabilities threshold.

There are some issues with this, however. Depending on the capabilities of the system, it may or may not be necessary for it to use a scratchpad, even for complicated tasks, by human standards. Been also argues that AI systems think completely differently from humans, and so we shouldn't assume that they package information the way humans do in human language. This sounds to me like an alien minds type of argument (see Shanahan, 2024). Even if some of our tools have predictive power, these are very much unlike human minds, in ways we probably can't even point to at present. Hence, we should always assume that our tools are limited.

7. Is explaining with AI a paradox?

We have reached that point where LLMs are used for explaining and answering questions while they are themselves not explainable, at least not sufficiently. They then go on to talk about a new paradigm of agentic interpretability, where by keeping the model as an active participant in the conversation, the user tries to steer it such that the system is incentivized to reveal more of how it works.

Been suggests thinking of the user-LLM interaction as a teacher-student relationship. The teacher does a better job when they know what it is that the student does not know. By analogy, LLMs can have a mental model of the user, and this is something that we essentially hand in to them by way of interacting with them. If they have an accurate mental model, they can help us understand better. The key advantage of agentic interpretability is that even if you don't understand everything about the model as a user, you have some control over the conversation so that you can elicit what's most useful to you in each case.

My AI safety comment here is that this kind of explainability seems to assume at least some degree of human-AI alignment, where the model is incentivized to give the user what's truly useful to them, and not, for example, be sycophantic or misleading or have other processes taking place under the hood while keeping the human busy and entertained. So, in that sense, it does not sound like a strong explainability pathway for safety-relevant purposes without granting a certain degree of alignment first. I'm especially imagining a sufficiently capable system that rather than taking the teacher-student relationship, acts more as a corrupt politician who tries to convince you to give them your vote while working on their own projects behind your back and against your interests. The interaction may be giving you the illusion that they care about you, that they want to provide you with information and context, and that they're taking the time for you to engage in conversation with them, while their true intentions are completely different.

One last comment (from the theory of science POV)

One of the ways I like to think of the alignment problem is in terms of explanation. Specifically, what are the kinds of explanations that we would need to have to say that we understand a given system sufficiently to steer it in a human-compatible direction? I find that this is a good question to ask, both when thinking separately about techniques and their effectiveness, for example, chain-of-thought, and when thinking about combining different techniques and methodologies, and for zooming out to see the big picture of the alignment problem.

Despite this being an interview with successful interpretability researchers, it is implied that interpretability is not the single ultimate pathway to explaining these systems. This can have a pretty pessimistic interpretation for how likely it is for safety to go well, but I focus on the positive: both researchers have a very pragmatic attitude towards finding what would actually work, even if it's counterintuitive or outside their main agenda.

Regardless of the techniques used and the specifics of any given project, the field would likely benefit from more systematic attempts to clearly articulate what kind of explanation is given through any result or theoretical framing.

- ^

I'm not giving that context here, but the Stanford Encyclopedia of Philosophy entries on scientific explanation are a good start.

Discuss