Published on October 24, 2025 2:03 AM GMT

Overview:

There is an interesting mechanism GPT-2 seems to use to measure distances between duplicate tokens. The mechanism reminds me a lot of Twisted pair cabling in communications.

The mechanism is fiddly to explain in context, so i've tried to abstract out most of the details, and give a clean toy version of the mechanism. I think some of the structures GPT-2 develops for this mechanism could be used in other contexts than computing distances.

Setup:

We have the set of points {xi}ni=0∈Rd⊂Rdmodel, with xi=f(i/n) where f:[0,1]→Rd is a smooth function. We take as input xi, xj with |i−j|≤k<<n . We want to construct a transformer which can estimate |i−j| given xi,xj. For this transformer, we additionally assume that d is relatively small compared to the embedding dimension dmodel.

The mechanism:

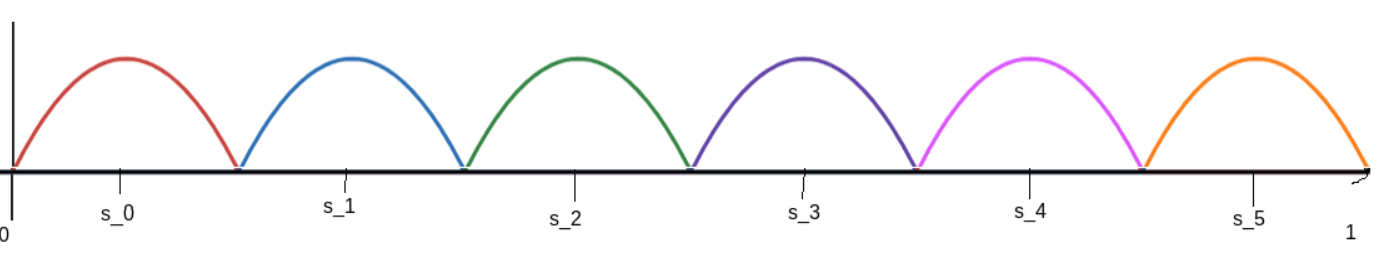

We set up M+1<n "sentry points" {si}Mi=0 uniformly along [0,1]. And we define g:[0,1]→[0,M]∩Z sending t∈[0,1] to the index of the closest sentry point.

Then we have, ||xi−xj−(in−jn)f′(sg(in))||2=||∫injnf′(t)−f′(s)dt||2≤L(12M+|i−j|n)⋅|i−j|n

where L is such that ||f′(x)−f′(y)||2≤L|x−y| for all x,y∈[0,1].

So |(xi−xj)⋅f′(sg(in))||f′(sg(in))||22−(i−j)|≤||∫injnf′(t)−f′(s)dt||2||f′(sg(in))||2≤L||f′(sg(in))||2(12M+2+|i−j|n)⋅|i−j|n.

Therefore if we can approximate (xj−xi)⋅f′(sg(in))||f′(sg(in))||22, then we can approximate j−i.

Attention mechanism:

If we are given a two token input to the transformer xj, xi, then assuming that d≤dhead, two attention heads is sufficient to compute xj−xi (have one head which outputs -xi, and the other xj). We write xj−xi to an orthogonal subspace from xi so that the MLP can cleanly access xi later.

MLP mechanism:

The MLP mechanism consists of 2M+2 neurons, with a pair of two neurons associated with each sentry point.

For each sentry point sm∈{0,1M+1,2M+1,…,1}, we define a neuron pair:

Neuron+m=ReLU(bm,1f(sm)⋅xi+bm,2+bm,3(xj−xi)⋅f′(sm))

Neuron−m=ReLU(bm,1f(sm)⋅xi+bm,2−bm,3(xj−xi)⋅f′(sm))

where Wm, bm,l are tuned so that bm,1f(sm)⋅xi+bm,2>ϵ when m=g(in), and bm,1f(sm)⋅xi+bm,2<0 otherwise. Additionally we set up bm,3(xj−xi)⋅f′(sm) so that it has a magnitude of less than ϵ when |i−j|≤k.

Setting up these sentries relies on f not coming close to intersecting itself, so that the dot product with f(sm) is only high on a connected interval.

We wrote xj−xi in an orthogonal subspace to xi so the sentry neurons can all be written in the standard form ReLU(wth0+b) where h0 is the residual stream post attention.

We then output Cmbm,3(Neuron+m-Neuron−m)v from the mth neuron pair.

Under this construction Neuron+m and Neuron−m always activate at the same time as each other, so the output of the mth neuron pair is 0 if m≠g(in), and Cm(xj−xi)⋅f′(sm)v if m=g(in).

Since only a single neuron pair activates at once, the complete output of this MLP layer is Cg(in)(xj−xi)⋅f′(sg(in))v.

Then setting Cg(in) proportional to 1||f′(sg(in))||22, we get an output proportional to (j−i)v

Extensions of mechanism:

The above mechanism is clean, and captures the key ideas. A nice thing about the mechanism is that the f(sm)⋅xi term can be noisy, but it doesn't matter because the common noise will get cancelled out, similar to twisted pair encoding.

However, there can still be potential issues caused by noise at the transitions between sentries. It is also not robust to f intersecting itself, and the number of sentries required grows with n.

There are cases where this kind of two neuron interference cancellation could come in useful outside of computing distance. For example, if you want to distinguish between British and Canadian English, you could have:

Neuroncanada=ReLU(Commonwealth+ϵ(Canadian))

Neuronbritish=ReLU(Commonwealth+ϵ(British))

And then take the difference between the two. The interference that would usually make it difficult to distinguish between the two very similar dialects gets cancelled out.

Though this is probably just PCA??

Preliminary GPT-2 specific mechanism:

GPT-2 seems to adopt a similar mechanism to the mechanism discussed above when calculating distances between the positions of duplicate tokens, but with a couple differences. Firstly, GPT-2 has multiple sentries active at once, which the mechanism above simplifies away. Secondly, instead of using sentries based on the value of f(in), it learns an f where it can cheaply compute an approximation for f′.

GPT-2's positional embedding matrix is a helix shows that positional embeddings lie on a helix. A property of helices is that we have f′=Wf+c, which means that we can compute f′(in)=yi=Wxi+c just by using an attention head to apply a linear transformation. Of course GPT-2's positional embeddings don't precisely lie on a helix, and this all holds up to approximation.

We can then set up sentries that use f′(sm)⋅yi instead:

Neuron+m=ReLU(bm,1f′(sm)⋅yi+bm,2+bm,3(xj−xi)⋅f′(sm))

Neuron−m=ReLU(bm,1f′(sm)⋅yi+bm,2+bm,3(xj−xi)⋅f′(sm))

Because the positional embeddings of GPT-2 lie on a helix, f′ is periodic (f(t)=(t,cos(t),sin(t)) isn't periodic, but f′(t)=(1,−sin(t),cos(t)) is). This allows for the reuse of sentries across distant positions, and so we don't need to scale sentries with n necessarily.

Discuss