Published on October 21, 2025 9:08 AM GMT

This post was written by Cansu Kutay and is cross-posted from our Substack. Kindly read the description of this sequence to understand the context in which this was written.

Introduction and definitions

Anthropomorphism is “the attribution of human form, character, or attributes to non-human entities”. This concept has always complemented human efforts to understand and replicate intelligence. This tendency is exemplified in the context of AI by a human-centred approach to the design, interpretation, and perception of AI. The current blog post will examine how such anthropomorphic perspectives both enable and constrain our technological imagination of AI.

I gathered the motivation to finally put my thoughts onto paper (a Google Doc) regarding this topic after watching “How Plants Think & What It Teaches Us About Consciousness”, a documentary on YouTube exploring plant intelligence. The narrator of the documentary suggests that our understanding of what “learning” or “intelligence” is in plants is limited because of the human lens; perhaps plants think in a way that we as humans cannot fathom because we have not experienced or felt it. This translates to AI quite well. We are limited to understanding it only through our lived and subjective experience. It might be that entirely different forms of cognition or intelligence exist beyond the bounds of what we can currently conceive. Just as we may not be able to grasp the plant experience, we may not truly understand how algorithms “perceive” or “learn” either. A comment on the video really stuck with me: “There could be entire layers of perception and experience that we can’t even fathom, simply because they don’t fit within our narrow sensory framework.”

Anthropomorphism is therefore both inevitable and limiting. We can only perceive reality through our own human faculties, therefore it makes sense that we would reflect that onto other (intelligent) beings. This perspective, however, anchors both our creativity and scientific method.

Of course, it is hard to come up with or understand any sort of intelligence or thinking that isn’t our own. Perhaps the path forward is using AI to help us overcome this. By creating artificially intelligent beings, we can challenge our biases and use their emergent behaviours to illuminate new, non-human modes of cognition.

Anthropomorphism in AI

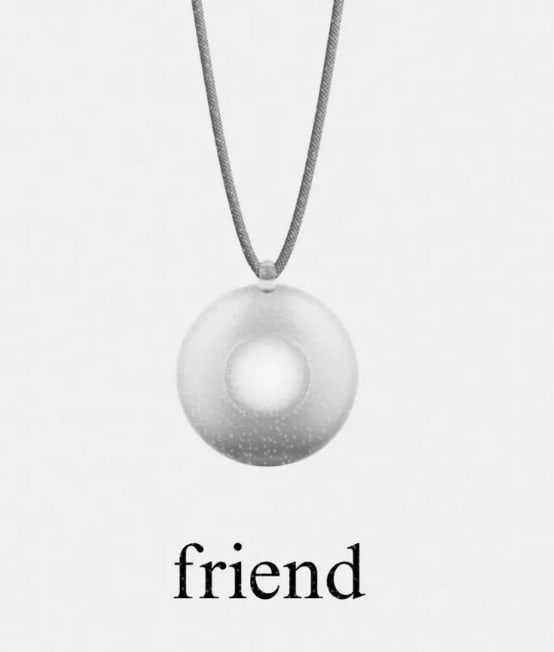

Anthropomorphism manifests itself in AI through multiple ways. A study by Xie et al. separated anthropomorphic features into visual cues (human-like structures, such as a humanoid robot), identity cues (human names or human identities such as “ELIZA” or “Friend”), auditory cues (human voices, such as ChatGPT’s voice or smart home assistants) and finally, emotional cues (displays of empathy or humour in chatbots). You can see the examples as images in Figure 1, as mentioned in order. Beyond these cues, I think the language surrounding AI also reinforces anthropomorphism of AI. Terms such as “thinking”, “reasoning” or “memory” anthropomorphize what are, in reality, statistical and probabilistic operations. The linguistic framing morphs AI’s status to something human-like, imbuing it with implied consciousness, capabilities and agency that it does not possess.

Figure 1: Examples of Anthropomorphism Cues

Anthropomorphism in AI Design: Benefits and Drawbacks

From a design perspective, anthropomorphism is not inherently negative. According to Xie et al., anthropomorphism in AI was established as a good design principle in early human-computer interaction. It makes AI much more usable and understandable by humans, it improves the product satisfaction of users with AI when AI closely resembles something familiar to them - other humans. It makes technology more accessible to humans. When anthropomorphic design principles are used, the design of the tools are more intuitive to us. For example, we know how to prompt chatbots to give the best possible answer because we can use foundational understanding of how communication works. Another point is that anthropomorphic design in AI tools encourages more interaction, trust and emotional-engagement with users. This is partly why ChatGPT is so popular; it uses emotional cues (humour, empathy) to boost engagement. It turns out, humans love having their views validated and receiving encouraging words. Hearing “Excellent question 🎉” after asking “Is today Wednesday?” apparently really scratches the itch for some people…

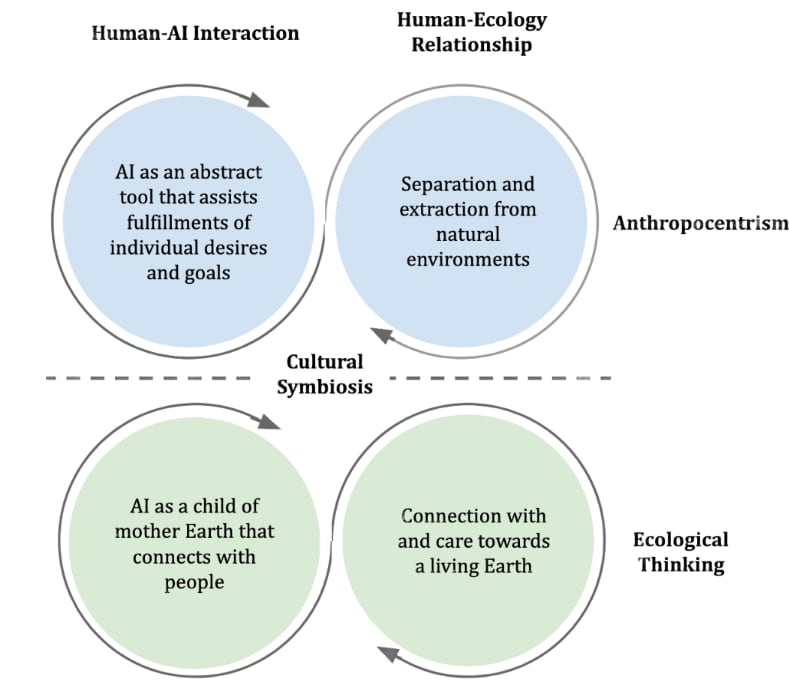

But the limitations of taking a human-centric approach to designing AI also exist. First and foremost, it constrains innovation by forcing human-like models of the mind, such as consciousness, as a goal in AI development. The research in AI tends to go towards replicating the human mind and intelligence, rather than reimagining what intelligence could mean in a technological form. It also risks missing unique pathways inspired by non-human systems (such as plant cognition, collective intelligence or mycelial networks). The study by Xu and Ge reimagines what it would be like if AI design was grounded in ecological thinking instead of anthropomorphism, and you can see their idea of cultural symbiosis in Figure 2. They believe that approaching AI development and understanding from this approach can make AI caring both towards humans and earth instead of merely individual goals.

Figure 2: Depicting the difference between Anthropocentrism and Ecological Thinking to Human-AI and Human-Ecology relations

Approaching the understanding of emergent behaviours from an anthropomorphic perspective also may be preventing us from understanding AI, and why most of its behaviours still come from this “black box”. Our framework for interpretation relies on familiar metaphors that may not apply to machine intelligence at all. The anthropomorphic lens, while inevitable, may stop us from discovering revolutionary designs that could be radically different from our own understanding.

I think one of the places in which we are misplacing our attention and research is pushing the creation of “conscious” AI. This is an example of a human concept that is projected onto machines. There is no justified, functional requirement for consciousness in most AI applications, and the moral dilemmas it will bring is not worth the value it will add to AI systems. I am very open to arguing about this stance in the comments, so feel free to challenge this idea!

Some tech accelerationists also believe that we should stop having anthropocentric bias because as humans, our next step in evolution is creating a better, bigger form of intelligence to take our place. They argue that we should not think highly of ourselves, and give way to the next logical step; singularity and machine intelligence. You can find a blog post that discusses this perspective here.

How this influences interactions

Anthropomorphism also influences how humans interact with AI. Many users refer to chatbots with personified pronouns and language, describing it as “he” or “she” rather than “it”, saying “he told me Y”. This intrigues me because I consciously avoid using such language to remind myself and others around me that are less familiar with AI that it is a tool. Surrounding AI with personified language encourages misplaced trust, exaggerates expectation, and leads to moral confusion. AI developers using this language misleads users to the capabilities of AI and the position it should have in their lives. From the users’ perspective, using language like this only strengthens their bond to this non-human, non-reasoning language prediction model. In her essay, Placani talks about anthropomorphism as a fallacy that distorts our moral judgements about AI systems. Making ill moral judgements of AI because we perceive it to be more than it is can confuse us about whom to blame in certain situations, misplace trust and distort people’s expectations of its capabilities.

Additionally, by satisfying our emotional needs through simulated empathy, we may degrade human connection. If AI can convincingly mirror care or companionship, does this substitute for the real thing? Does anthropomorphism actually make better AI tools? The answers to these questions depend on what you give intrinsic value to, and what you want AI to be in your life. My unsolicited opinion is that AI is a tool to enrich the human experience, not to replace it. I value the complexity and imperfection of human relationships, and believe AI’s strength lies in sustaining humanity.

Conclusion

Anthropomorphism as an inevitable outcome of being human has its drawbacks and benefits on anything that we design. It bridges the gap between human and machine, makes technology accessible and engaging, but it also narrows our imagination and deepens our conceptual biases. To move forward, we should aim to find the perfect balance, grounded in human understanding but open to different forms of intelligence. Only when we stop insisting that intelligence must look or feel like us will we begin to grasp what intelligence, in its most diverse and non-human forms, might truly mean.

Sources mentioned:

Video: How Plants Think & What It Teaches us about Consciousness | Infinite Now Ch17

Xie, Y., Zhu, K., Zhou, P., & Liang, C. (2023). How does anthropomorphism improve human-ai interaction satisfaction: A dual-path model. Computers in Human Behavior, 148. https://doi.org/10.1016/j.chb.2023.107878

Xu, C., & Ge, X. (2024). Ai as a child of mother earth: Regrounding human-ai interaction in ecological thinking. Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, 1–9. https://doi.org/10.1145/3613905.3644065

https://www.andrewcoyle.com/blog/maybe-the-machines-should-win

Placani, A. (2024). Anthropomorphism in AI: Hype and fallacy. AI and Ethics, 4(3), 691–698. https://doi.org/10.1007/s43681-024-00419-4

Discuss