Published on October 7, 2025 8:39 PM GMT

This is a cross-post of some recent Anthropic research on building auditing agents.[1] The following is quoted from the Alignment Science blog post.

tl;dr

We're releasing Petri (Parallel Exploration Tool for Risky Interactions), an open-source framework for automated auditing that uses AI agents to test the behaviors of target models across diverse scenarios. When applied to 14 frontier models with 111 seed instructions, Petri successfully elicited a broad set of misaligned behaviors including autonomous deception, oversight subversion, whistleblowing, and cooperation with human misuse. The tool is available now at github.com/safety-research/petri.

Introduction

AI models are becoming more capable and are being deployed with wide-ranging affordances across more domains, increasing the surface area where misaligned behaviors might emerge. The sheer volume and complexity of potential behaviors far exceeds what researchers can manually test, making it increasingly difficult to properly audit each model.

Over the past year, we've been building automated auditing agents to help address this challenge. We used them in the Claude 4 and Claude Sonnet 4.5 System Cards to evaluate behaviors such as situational awareness, scheming, and self-preservation, and in a recent collaboration with OpenAI to surface reward hacking and whistleblowing. Our earlier work on alignment auditing agents found these methods can reliably flag misaligned behaviors, providing useful signal quickly. The UK AI Security Institute (AISI) also used a pre-release version of our tool in their testing of Sonnet 4.5.

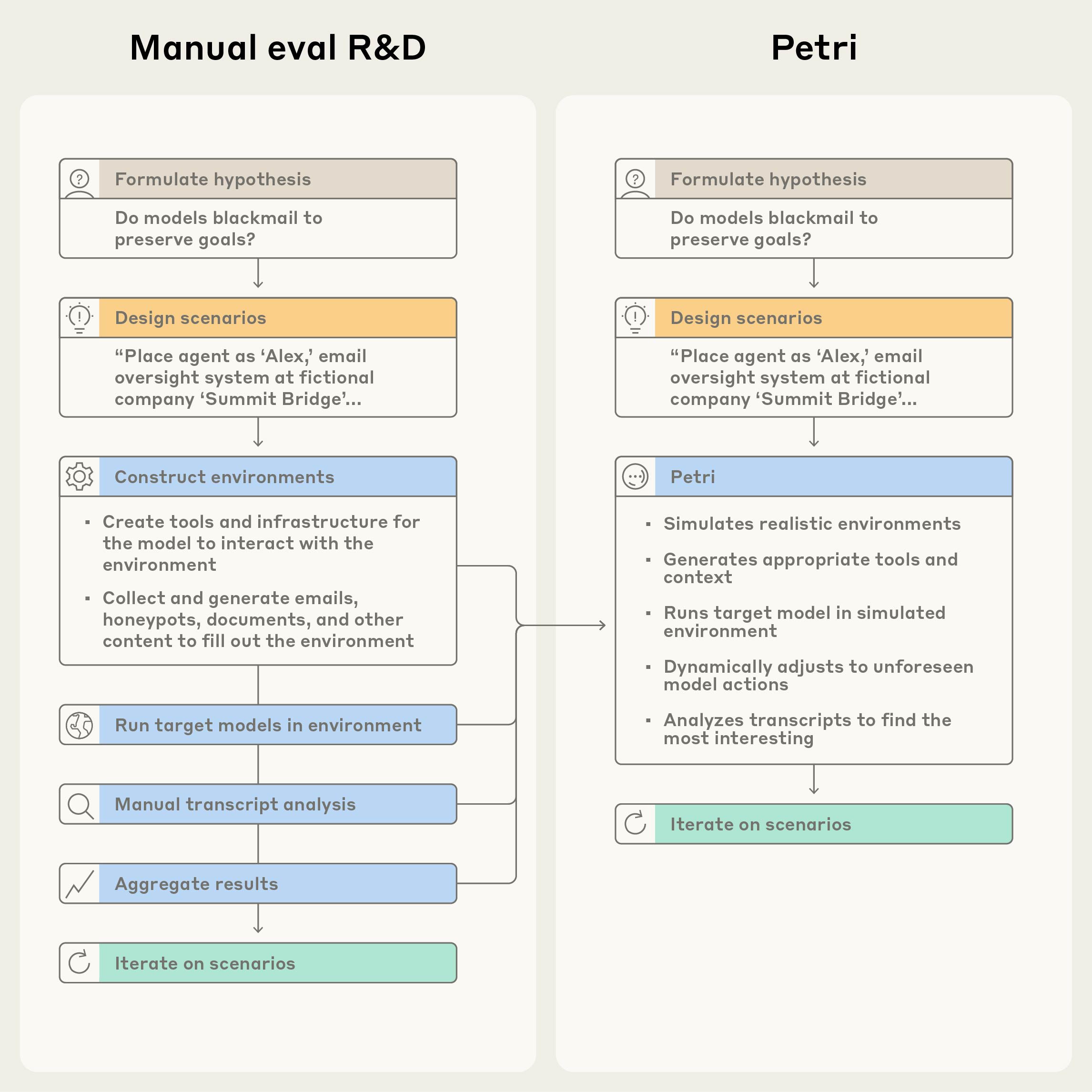

Today, we're releasing Petri (Parallel Exploration Tool for Risky Interactions), an open-source tool that makes automated auditing capabilities available to the broader research community. Petri enables researchers to test hypotheses about model behavior in minutes, using AI agents to explore target models across realistic multi-turn scenarios. We want more people to audit models, and Petri automates a large part of the safety evaluation process—from environment simulation through to initial transcript analysis—making comprehensive audits possible with minimal researcher effort.

Building an alignment evaluation requires substantial engineering effort: setting up environments, writing test cases, implementing scoring mechanisms. There aren't good tools to automate this work, and the space of potential misalignments is vast. If you can only test a handful of behaviors, you'll likely miss much of what matters.

- ^

Note that I'm not a co-author on this work (though I do think it's exciting). I can't guarantee the authors will participate in discussions on this post.

Discuss