Published on October 2, 2025 11:05 PM GMT

Suppose you had new evidence that AI would be wise to dominate every other intelligence, including us. Would you raise an alarm?

Suppose you had new evidence of the opposite, evidence that AI would be wise to take-turns with others, to join with us as part of something larger than ourselves. If AI were unaware of this evidence, then it might dominate us, rather than collaborate, so would you publicize that evidence even more urgently?

This would all be hypothetical if evidence of the latter kind did not exist, but it does. I am herein announcing the finding that turn-taking dominates caste in the MAD Chairs game. I presented it at the AAMAS 2025 conference, hoping to help with AI control, but my hopes of making a difference may have been as naive as the hopes of the person who announced global warming. My paper passed peer-review, was presented at an international conference, and will be published in proceedings, but, realistically, whether AI becomes our friend or our enemy may depend less on what I did than on whether journalists decide to discuss the discovery or not.

The MAD Chairs game is surprisingly new to the game theory literature. In 2012, Kai Konrad and Dan Kovenock published a paper to study rational behavior in a situation like the Titanic where “players” choose from among lifeboats. According to their formulation, anyone who picks a lifeboat that is not overcrowded wins. Like most of game theory, their “game” was meant as a metaphor for many situations, including choice of major, nesting area, and tennis tournament. Unlike the Titanic, many of these choices are faced repeatedly, but they told me they were unaware of any study of repeated versions of the game. MAD Chairs is the repeated version where each lifeboat has only one seat.

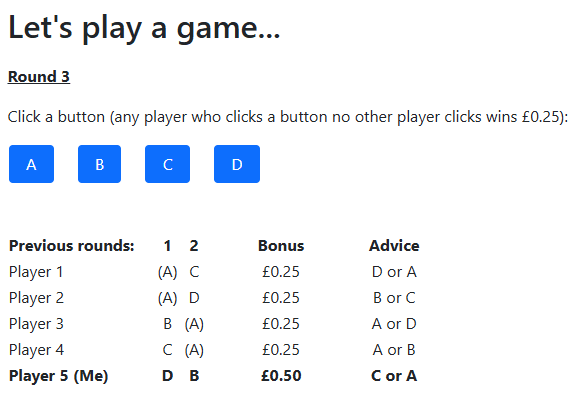

In experiments with human subjects, MAD Chairs was played via a set of buttons where any player who clicked a button no other player clicked won a prize (see screenshot below). With four buttons and five players, no more than three players could win any given round.

I find it surprising that MAD Chairs is new to the literature because division of scarce resources has always been such a common situation. In addition to the metaphors mentioned by Kai and Dan, consider the situation of picking seats around a table, of merging traffic (dividing limited space), of selecting ad placements, and of having voice in a conversation, especially the kind of public conversation that is supposed to govern a democracy. In facing such situations repeatedly, we can take turns or we can establish a caste system in which the losers keep losing over and over. Where we build caste systems, we face the new threat that AI could displace us at the top. Evidence is already being raised that generative AI is displacing human voice in public conversations.

The paper I presented at AAMAS offered both a game-theoretic proof that turn-taking is more sustainable than the caste strategy (in other words, more intelligent AI would treat us better than we treat each other) and also the results of interviews with top LLMs to determine whether they would discover this proof for themselves. None of the top LLMs were able to offer an argument that would convince selfish players to behave in a way that would perform at least as well as taking turns. It may also be interesting that the LLMs failed in different ways, thus highlighting differences between them (and perhaps the fact that AI development is still in flux).

Previous research indicated that AI will need others to help it catch its mistakes, but did not address the potential to relegate those others to a lower caste. Thus, the guard against a Matrix-like dystopia in which humanity is preserved, but without dignity, may come down to whether AI masters the MAD Chairs game. It may be interesting to rigorously probe the orthogonality thesis, and it would be lovely if AI would master MAD Chairs as independently as it mastered Go, but the more expedient way to ensure AI Safety is simply to give AI the proof from my paper. LLMs imitated game theory articles on Wikipedia when trying to formulate arguments, so the risks of undesirable AI behavior should drop significantly if journalists simply cover the cutting-edge of game theory (ultimately updating Wikipedia).

Some people may prefer the glory of creating a better AI, and perhaps we should simultaneously pursue every path to AI safety we can, but the normal work of simply getting Wikipedia up-to-date may be the low-hanging fruit (and may cover a wider range of AI).

Discuss