Published on October 2, 2025 6:46 PM GMT

TLDR: AI-2027's specific predictions for August 2025 appear to have happened in September of 2025. The predictions were accurate, if a tad late, but they are late by weeks, not months.

Reading AI-2027 was the first thing that viscerally conveyed to me how urgent and dangerous advances in AI technology might be over the next few years. Six months after AI-2027's release, I decided to check in and see how the predictions are holding up so far, what seems like is happening faster than expected, and what seems like is happening slower than expected. I'll just go through the specific claims that seem evaluable in order.

The world sees its first glimpse of AI agents.

Advertisements for computer-using agents emphasize the term “personal assistant”: you can prompt them with tasks like “order me a burrito on DoorDash” or “open my budget spreadsheet and sum this month’s expenses.” They will check in with you as needed: for example, to ask you to confirm purchases. Though more advanced than previous iterations like Operator, they struggle to get widespread usage.

This prediction is panning out. With GPT-5 and Claude Sonnet 4.5, we now have agentic coders (Claude Code, GPT-5 Codex) and personal agents that can make purchases, though not yet on DoorDash, but on platforms like Shopify and Etsy. Widespread adoption definitely doesn't seem to be here yet, but that was expected by AI-2027. Arguably they undersold the degree to which this would already be used in software work, but they didn't make any specific claims about that.

There are a couple of more testable claims made in footnotes to this paragraph.

Specifically, we forecast that they score 65% on the OSWorld benchmark of basic computer tasks (compared to 38% for Operator and 70% for a typical skilled non-expert human).

Claude Sonnet 4.5 scored a 62% on this metric, as of September 29th, 2025. The target was August; the metric was nearly achieved in late September. AI-2027 got agentic capabilities essentially right. One month late and three percentage points short is remarkably accurate.

Another benchmark there was a specific projection about for August 2025 was the SWEBench-Verified.

For example, we think coding agents will move towards functioning like Devin. We forecast that mid-2025 agents will score 85% on SWEBench-Verified.

Claude Sonnet 4.5 scored an 82% on this metric, as of September 29th, 2025. Three percentage points below the 85% target, achieved one month late, again, remarkably close. Particularly given that in August, Opus 4.1 was already scoring 80% on this benchmark.

The August predictions are the only ones we can fully evaluate, but we can make preliminary assessments of the December 2025 predictions.

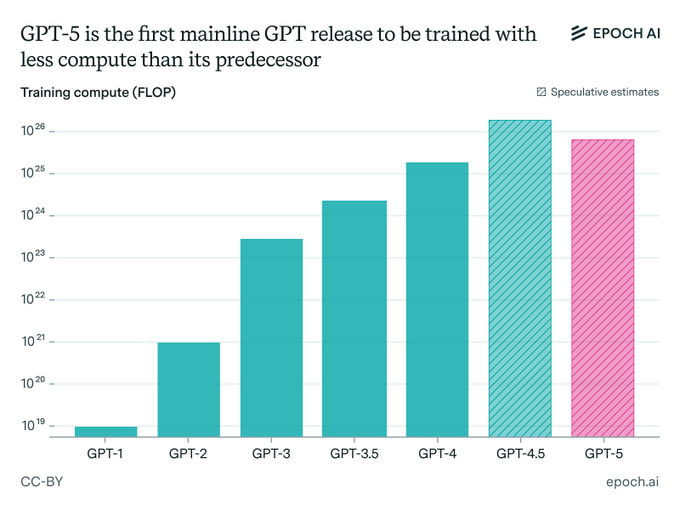

GPT-4 required 2⋅10^25 FLOP of compute to train. OpenBrain’s latest public model—Agent-0—was trained with 10^27 FLOP. Once the new datacenters are up and running, they’ll be able to train a model with 10^28 FLOP—a thousand times more than GPT-4. Other companies pour money into their own giant datacenters, hoping to keep pace.

The Agent-0 scenario looks increasingly plausible. We now know that GPT-5 was trained with less compute than GPT-4.5. While training compute increased reasonably from GPT-4 to GPT-5, evidence suggests OpenAI has an even more capable model in development. Some version of that will be due to release eventually, especially given the pressure that has been put on them with the very impressive Sonnet 4.5 release.

The evidence: OpenAI entered an 'experimental reasoning model' into the ICPC, which is a prestigious college-level coding contest. This experimental reasoning model performed better than all human contestants, achieving a perfect 12/12 score. GPT-5 solved 11 problems on the first attempt; the experimental reasoning model solved the hardest problem after nine submissions.

The capabilities that this model demonstrated may not be Agent-0 level, and it is possible that it used less than 10^27 FLOP of training compute. But we should watch for the next OpenAI release, which could come as soon as Monday, October 6, at DevDay. This is speculation, but it is grounded in recent announcements. Sam Altman indicated less than 2 weeks ago that several compute-intensive products would release over the coming weeks. We've already seen two such releases in under two weeks. There's Pulse, OpenAI's proactive daily briefing feature, which launched on September 25 but hasn't generated much discussion yet. I'm curious what people think of it. And then there's Sora 2, which represents a significant leap forward for OpenAI in video generation, impressive enough to have generated substantial attention. The Sora app reached #3 on the App Store within 48 hours of its September 30 release. I suspect something bigger is planned for DevDay, though there are no guarantees, especially given Altman's track record of generating hype. It's also worth noting that last year's announcements at DevDay were more practical than transformative, with o1's release coming a couple of weeks before the actual event. Nonetheless, it is difficult to rule out a near-term release of this improved reasoning model.

AI-2027's predictions for mid-2025 have been substantially vindicated. Progress is roughly one month behind the scenario, weeks, not months. Every prediction timed for August 2025 has been essentially realized by end of September 2025. While I remain uncertain about fast timelines, dismissing scenarios like AI-2027 seems unwarranted given how well these early predictions have held up. These were the easiest predictions to verify, but they set a high bar, and reality met it.

Discuss