Published on October 2, 2025 4:33 PM GMT

This post was written by Sophia Lopotaru and is cross-posted from our Substack. Kindly read the description of this sequence to understand the context in which this was written.

Our lives orbit around values. We live our lives under the guidance of values, and we bond with other humans because of them. However, these are also one of the reasons why we are so different. This variance in beliefs is what might be preventing us from achieving Artificial Intelligence (AI) alignment, the process of aligning AI’s values with our own (Ji et al., 2025):

Do we need human alignment before AI alignment?

In this article, we will explore the concept of values, the importance of AI alignment, and what and whose values we should be trying to align AI with.

While the concept of ‘values’ seems quite abstract, some humans have managed to come up with a definition for it. For this article, I will use the definition of ‘values’ by the Cambridge dictionary: “the beliefs people have, especially about what is right and wrong and what is most important in life, that control their behavior".

It seems that values shape our existence. As our society is progressively involving AI not only in complex decision-making processes, but also in very intimate sectors of our lives, like the content we consume on social media platforms, we are turning AI into an indispensable tool. As a consequence, AI alignment is becoming a necessity in order to prevent rogue AI (Durrani, 2024) – AIs that cannot be controlled anymore, and operate according to goals that are in conflict with those of humans.

When considering this complex process, one must wonder: What values are we even trying to align?

While we universally consider the act of being moral as inherently good, the particular forms morality takes vary across cultures. Different cultural backgrounds influence the way and what values are passed on. For example, WEIRD (Western, Educated, Industrialised, Rich and Democratic) societies have a tendency to endorse individuality and moral code, while non-WEIRD cultures value spiritual purity and collective responsibility (Graham et al., 2016). Countless other differences can be found as the history of our cultures differs, our beliefs stem from stories told and retold and are learned throughout our lives from our parents and from our time spent in the world. There is variation not only across societies, but within societies. Consequently, the journey of trying to align AI with our values becomes the quest of humans trying to align their values with one another.

Culture influences human psychology, so which humans are we trying to align AI models to? Datasets used to train AI models introduce bias into the equation (Chapman University, n.d.). This problem becomes aggravated, as Atari et al. (2023) show that Large Language Models (LLMs) learn WEIRD behaviours from their WEIRD-biased datasets (a dataset developed in a WEIRD country contains WEIRD biases).

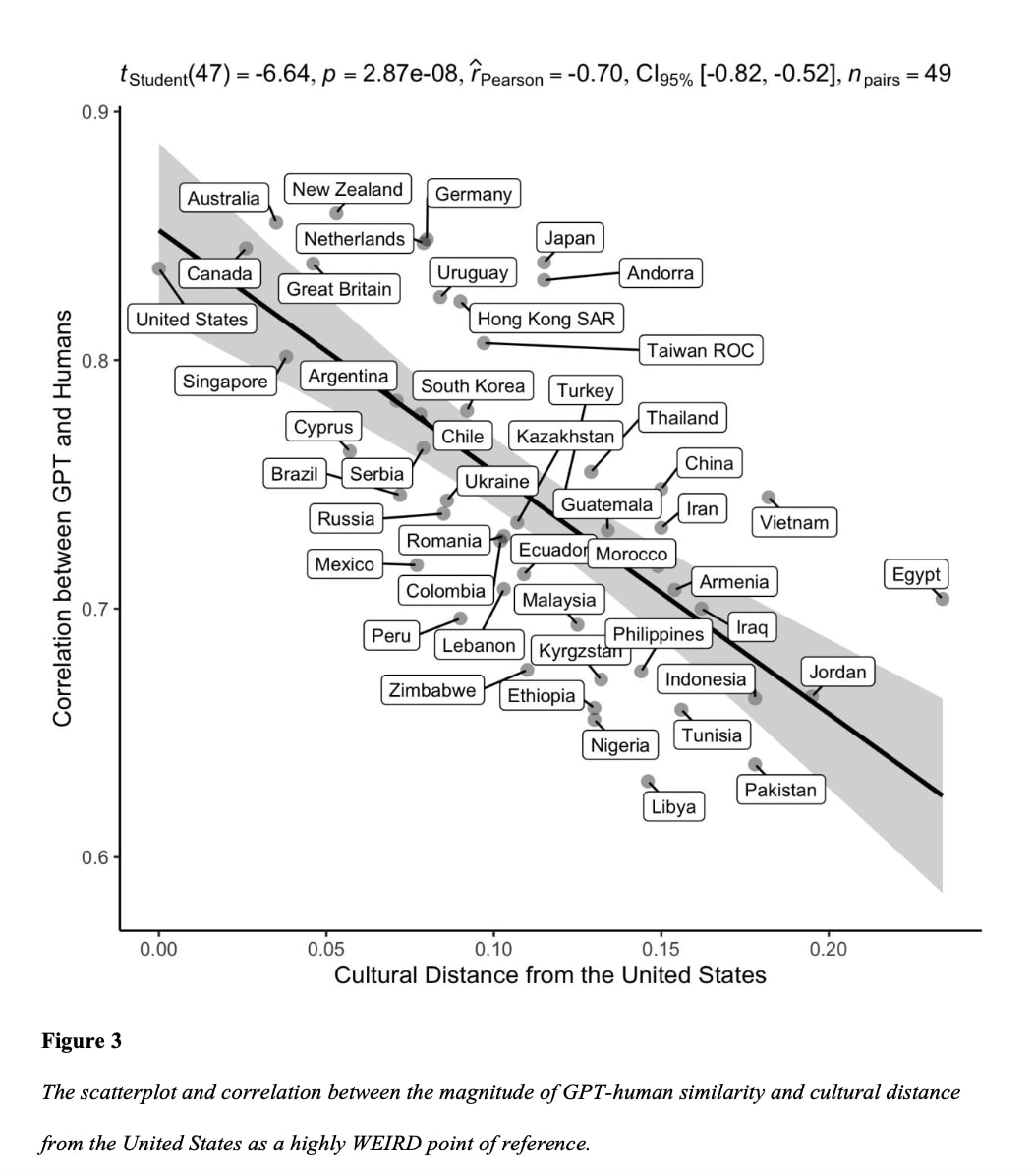

The researchers used the World Values Survey (WVS) (World Values Survey, n.d.), one of the most culturally diverse data sets, in order to examine where the values of LLMs lie in the broader landscape of human psychology. When comparing the AI’s responses to human input from all over the world, they confirmed that the model inherited a WEIRD behaviour. Figure 1 shows that GPT's alignment with human values decreases as cultural distance from the United States increases.

Figure 1 - Figure depicting the relationship between the cultural distance from the United States and the correlation between GPT and Humans (Atari et al.,2023)

These findings bring us back to our central idea: AI alignment cannot be separated from human alignment. The struggle for AI alignment does not only focus on the technical side, it challenges humans to change their thinking and revisit what morality means on both an individual and a global level.

Perhaps the quest for AI alignment is the means towards collectively finding human absolute values. This could lead to globalised values: either AI adopting our values or humans adopting its values. Yet, whose values would dominate? An AI aligned with authority-based values could abstain from providing information that challenges hierarchy. An AI hiring agent which is aligned with WEIRD values could be discriminatory towards candidates from non-WEIRD cultures. Even if the problem of human alignment would be resolved, the voices of those who control the development of AI will ultimately shape the degree to which AI will abide by these values.

The challenge of AI alignment is therefore inseparable from questions of power, culture, and morality. While the road to AI alignment might seem long and tedious, we can all help by taking the first step: asking questions and listening to each other's perspectives.

References

Atari, M., Xue, M. J., Park, P. S., Blasi, D. E., & Henrich, J. (2023). Which Humans? PsyArXiv. https://doi.org/10.31234/osf.io/5b26t

Chapman University. (n.d.). Bias in AI. Retrieved September 29, 2025, from https://www.chapman.edu/ai/bias-in-ai.aspx

Durrani, I. (2024). What is a Rogue AI? A Mathematical and Conceptual Framework for Understanding Autonomous Systems Gone Awry. https://doi.org/10.13140/RG.2.2.10613.38888

Graham, J., Meindl, P., Beall, E., Johnson, K. M., & Zhang, L. (2016). Cultural differences in moral judgment and behavior, across and within societies. Current Opinion in Psychology, 8, 125–130. https://doi.org/10.1016/j.copsyc.2015.09.007

Ji, J., Qiu, T., Chen, B., Zhang, B., Lou, H., Wang, K., Duan, Y., He, Z., Vierling, L., Hong, D., Zhou, J., Zhang, Z., Zeng, F., Dai, J., Pan, X., Ng, K. Y., O’Gara, A., Xu, H., Tse, B., … Gao, W. (2025). AI Alignment: A Comprehensive Survey (No. arXiv:2310.19852). arXiv. https://doi.org/10.48550/arXiv.2310.19852

World Values Survey. (n.d.). WVS Database. Retrieved September 29, 2025, from https://www.worldvaluessurvey.org/WVSContents.jsp

Discuss