An AI-native software engineer is one who deeply integrates AI into their daily workflow, treating it as a partner to amplify their abilities.

This requires a fundamental mindset shift. Instead of thinking “AI might replace me” an AI-native engineer asks for every task: “Could AI help me do this faster, better, or differently?”.

The mindset is optimistic and proactive - you see AI as a multiplier of your productivity and creativity, not a threat. With the right approach AI could 2x, 5x or perhaps 10x your output as an engineer. Experienced developers especially find that their expertise lets them prompt AI in ways that yield high-level results; a senior engineer can get answers akin to what a peer might deliver by asking AI the right questions with appropriate context-engineering.

Being AI-native means embracing continuous learning and adaptation - engineers build software with AI-based assistance and automation baked in from the beginning. This mindset leads to excitement about the possibilities rather than fear.

Yes, there may be uncertainty and a learning curve - many of us have ridden the emotional rollercoaster of excitement, fear, and back again - but ultimately the goal is to land on excitement and opportunity. The AI-native engineer views AI as a way to delegate the repetitive or time-consuming parts of development (like boilerplate coding, documentation drafting, or test generation) and free themselves to focus on higher-level problem solving and innovation.

Key principle - AI as collaborator, not replacement: An AI-native engineer treats AI like a knowledgeable, if junior, pair-programmer who is available 24/7.

You still drive the development process, but you constantly leverage the AI for ideas, solutions, and even warnings. For example, you might use an AI assistant to brainstorm architectural approaches, then refine those ideas with your own expertise. This collaboration can dramatically speed up development while also enhancing quality - if you maintain oversight.

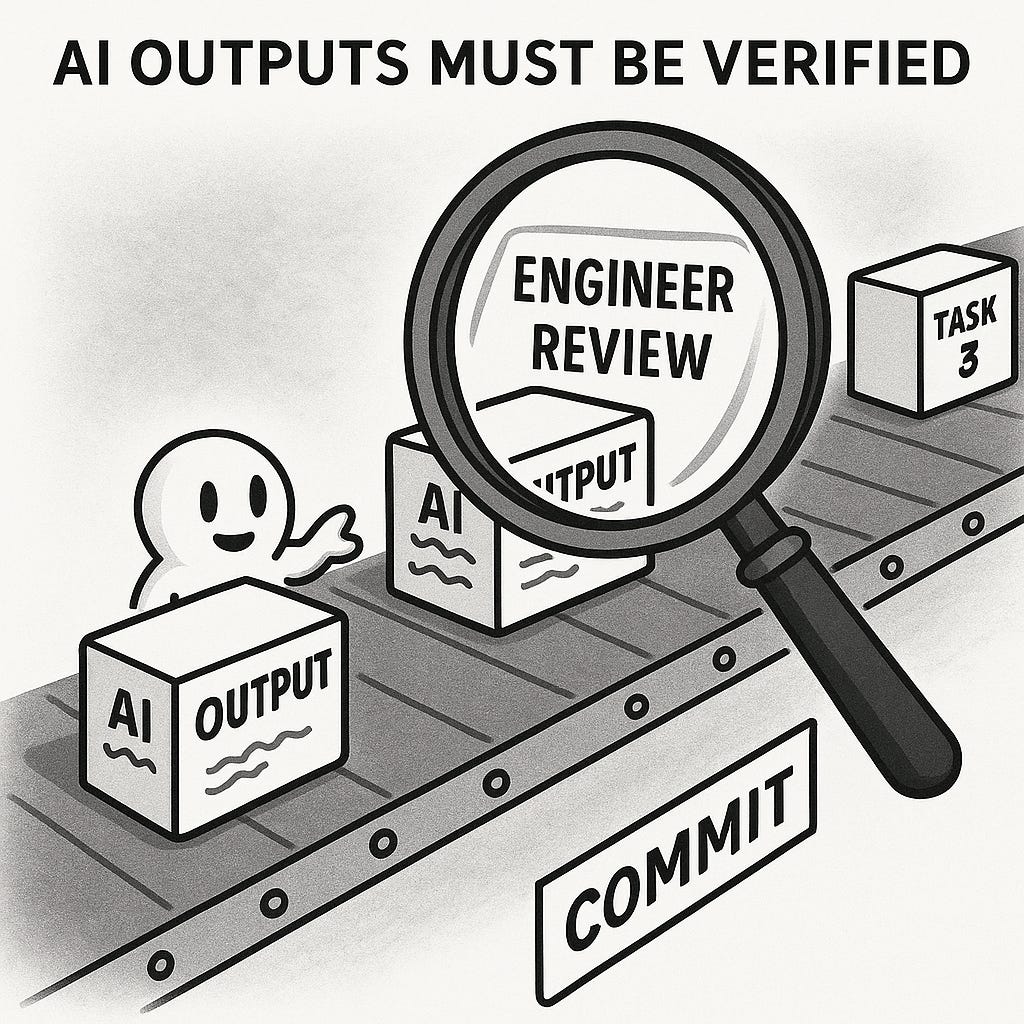

Importantly, you don’t abdicate responsibility to the AI. Think of it as working with a junior developer who has read every StackOverflow post and API doc: they have a ton of information and can produce code quickly, but you are responsible for guiding them and verifying the output. This “trust, but verify” mindset is crucial and we’ll revisit it later.

Let's be blunt: AI-generated slop is real and is not an excuse for low-quality work. A persistent risk in using these tools is a combination of rubber-stamped suggestions, subtle hallucinations, and simple laziness that falls far below professional engineering standards. This is why the "verify" part of the mantra is non-negotiable. As the engineer, you are not just a user of the tool; you are the ultimate guarantor. You remain fully and directly responsible for the quality, readability, security, and correctness of every line of code you commit.

Key principle - Every engineer is a manager now: The role of the engineer is fundamentally changing. With AI agents, you orchestrate the work rather than executing all of it yourself.

You remain responsible for every commit into main, but you focus more on defining and “assigning” the work to get there. In the not-distant future we may increasingly say “Every engineer is a manager now.” Legitimate work can be directed to background agents like Jules or Codex, or you can task Claude Code/ Gemini CLI/OpenCode with chewing through an analysis or code migration project. The engineer needs to intentionally shape the codebase so that it’s easier for the AI to work with, using rule files (e.g. GEMINI.md), good READMEs, and well-structured code. This puts the engineer into the role of supervisor, mentor, and validator. AI-first teams are smaller, able to accomplish more, and capable of compressing steps of the SDLC to deliver better quality, faster.

High-level benefits: By fully embracing AI in your workflow, you can achieve some serious productivity leaps, potentially shipping more features faster without sacrificing quality (this of course has nuance such as keeping task complexity in mind).

Routine tasks (from formatting code to writing unit tests) can be handled in seconds. Perhaps more importantly, AI can augment your understanding: it’s like having an expert on call to explain code or propose solutions in areas outside your normal expertise. The result is that an AI-native engineer can take on more ambitious projects or handle the same workload with a smaller team. In essence, AI extends what you’re capable of, allowing you to work at a higher level of abstraction. The caveat is that it requires skill to use effectively - that’s where the right mindset and practices come in.

Example - Mindset in action: Imagine you’re debugging a tricky issue or evaluating a new tech stack. A traditional approach might involve lots of Googling or reading documentation. An AI-native approach is to engage an AI assistant that supports Search grounding or deep research: describe the bug or ask for pros/cons of the tech stack, and let the AI provide insights or even code examples.

You remain in charge of interpretation and implementation, but the AI accelerates gathering information and possible solutions. This collaborative problem-solving becomes second nature once you get used to it. Make it a habit to ask, “How can AI help with this task?” until it’s reflex. Over time you’ll develop instincts for what AI is good at and how to prompt it effectively.

In summary, being AI-native means internalizing AI as a core part of how you think about solving problems and building software. It’s a mindset of partnership with machines: using their strengths (speed, knowledge, pattern recognition) to complement your own (creativity, judgment, context). With this foundation in mind, we can move on to practical steps for integrating AI into your daily work.

Getting Started - Integrating AI into your daily workflow

Adopting an AI-native workflow can feel daunting if you’re completely new to it. The key is to start small and build up your AI fluency over time. In this section, we’ll provide concrete guidance to go from zero to productive with AI in your day-to-day engineering tasks.

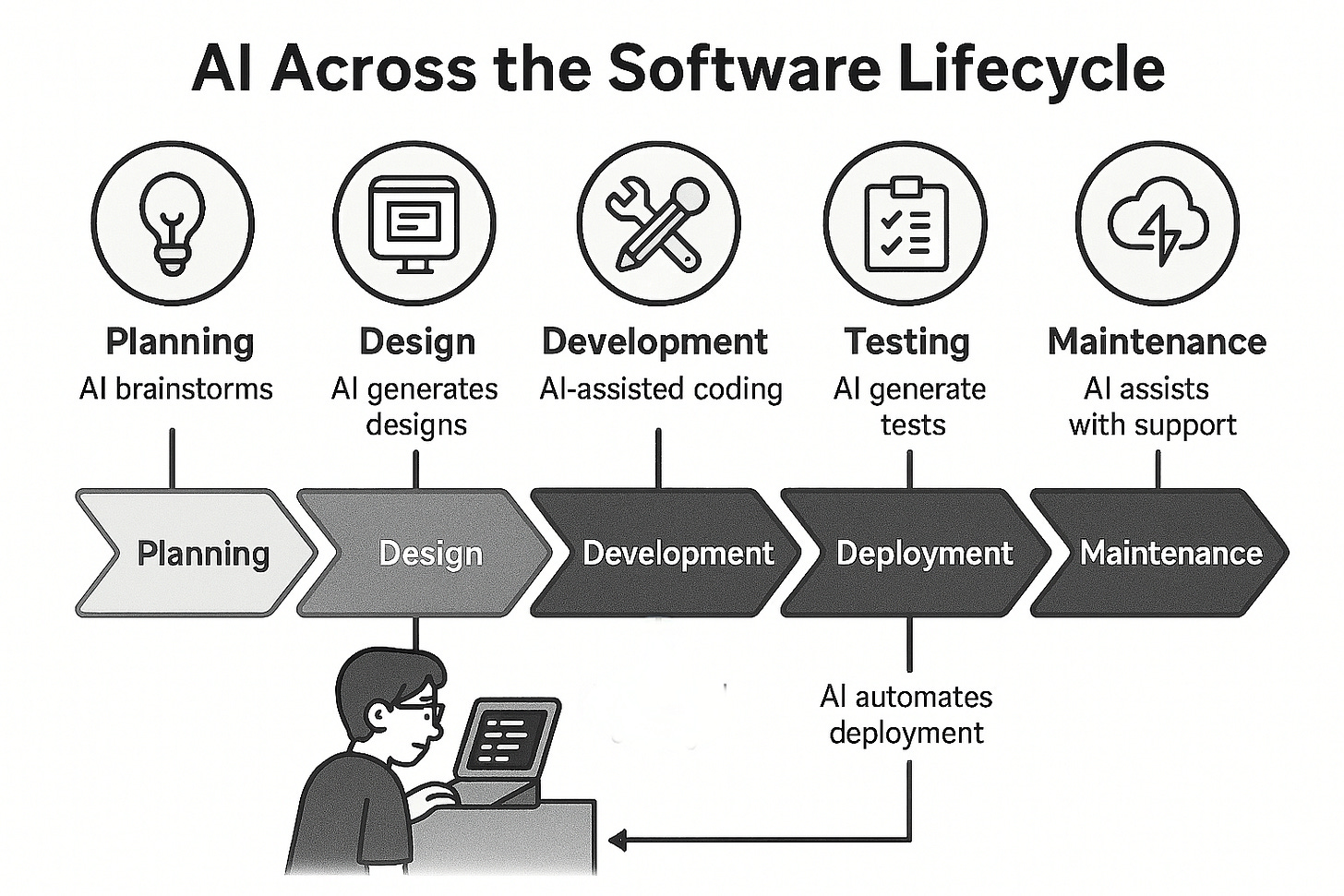

The above is a speculative look at where we may end up with AI in the software lifecycle. I continue to strongly believe human-in-the-loop (engineering, design, product, UX etc) will be needed to ensure that quality doesn’t suffer.

Step 1: The first change? You often start with AI.

An AI-native workflow isn’t about occasionally looking for tasks AI can help with; it's often about giving the task to an AI model first to see how it performs. One team noted:

The typical workflow involves giving the task to an AI model first (via Cursor or a CLI program)... with the understanding that plenty of tasks are still hit or miss.

Are you studying a domain or a competitor? Start with Gemini Deep Research. Find yourself stuck in an endless debate over some aspect of design? While your team argued, you could have built three prototypes with AI to prove out the idea. Googlers are already using it to build slides, debug production incidents, and much more.

When you hear “But LLMs hallucinate and chatbots give lousy answers” it's time to update your toolchain. Anybody seriously coding with AI today is using agents. Hallucinations can be significantly mitigated and managed with proper context engineering and agentic feedback loops. The mindset shift is foundational: all of us should be AI-first right now.

Step 2: Get the right AI tools in place.

To integrate AI smoothly, you’ll want to set up at least one coding assistant in your environment. Many engineers start with GitHub Copilot in VS Code which has code autocomplete and code generation capabilities. If you use an IDE like VS Code, consider installing an AI extension (for example, Cursor is a dedicated AI-enhanced code editor, and Cline is a VS Code plugin for an AI agent - more on these later). These tools are great for beginners because they work in the background, suggesting code in real-time for whatever file you’re editing. Outside your editor, you might also explore ChatGPT, Gemini or Claude in a separate window for question-answer style assistance. Starting with tooling is important because it lowers the friction to use AI. Once installed, the AI is only a keystroke away whenever you think “maybe the AI can help with this.”

Step 3: Learn prompt basics - be specific and provide context.

Using AI effectively is a skill, and the core of that skill is prompt engineering. A common mistake new users make is giving the AI an overly vague instruction and then being disappointed with the result. Remember, the AI isn’t a mind reader; it reacts to the prompt you give. A little extra context or clarity goes a long way. For instance, if you have a piece of code and you want an explanation or unit tests for it, don’t just say “Write tests for this.” Instead, describe the code’s intended behavior and requirements in your prompt. Compare these two prompts for writing tests for a React login form component:

Poor prompt: “Can you write tests for my React component?”

Better prompt: “I have a LoginForm React component with an email field, password field, and submit button. It displays a success message on successful submit and an error message on failure, via an onSubmit callback. Please write a Jest test file that: (1) renders the form, (2) fills in valid and invalid inputs, (3) submits the form, (4) asserts that onSubmit is called with the right data, and (5) checks that success and error states render appropriately.”

The second prompt is longer, but it gives the AI exactly what we need. The result will be far more accurate and useful because the AI isn’t guessing at our intentions - we spelled them out. In practice, spending an extra minute to clarify your prompt can save you hours of fixing AI-generated code later.

Effective prompting is such an important skill that Google has published entire guides on it (see Google’s Prompting Guide 101 for a great starting point). As you practice, you’ll get a feel for how to phrase requests. A couple of quick tips: be clear about the format you want (e.g., “return the output as JSON”), break complex tasks into ordered steps or bullet points in your prompt, and provide examples when possible. These techniques help the AI understand your request better.

Step 4: Use AI for code generation and completion.

With tools set up and a grasp of how to prompt, start applying AI to actual coding tasks. A good first use-case is generating boilerplate or repetitive code. For instance, if you need a function to parse a date string in multiple formats, ask the AI to draft it. You might say: “Write a Python function that takes a date string which could be in formats X, Y, or Z, and returns a datetime object. Include error handling for invalid formats.”

The AI will produce an initial implementation. Don’t accept it blindly - read through it and run tests. This hands-on practice builds your trust in when the AI is reliable. Many developers are pleasantly surprised at how the AI produces a decent solution in seconds, which they can then tweak. Over time, you can move to more significant code generation tasks, like scaffolding entire classes or modules. As an example, Cursor even offers features to generate entire files or refactor code based on a description. Early on, lean on the AI for helper code - things you understand but would take time to write - rather than core algorithmic logic that’s critical. This way, you build confidence in the AI’s capabilities on low-risk tasks.

Step 5: Integrate AI into non-coding tasks.

Being AI-native isn’t just about writing code faster; it’s about improving all facets of your work. A great way to start is using AI for writing or analysis tasks that surround coding. For example, try using AI to write a commit message or a Pull Request description after you make code changes. You can paste a git diff and ask, “Summarize these changes in a professional PR description.” The AI will draft something that you can refine.

This is a key differentiator between casual users and true AI-native engineers. The best engineers have always known that their primary value isn't just typing code, but in the thinking, planning, research, and communication that surrounds it. Applying AI to these areas - to accelerate research, clarify documentation, or structure a project plan - is a massive force multiplier. Seeing AI as an assistant for the entire engineering process, not just the coding part, is critical to unlocking its full potential for velocity and innovation.

Along these lines, use AI to document code: have it generate docstrings or even entire sections of technical documentation based on your codebase. Another idea is to use AI for planning - if you’re not sure how to implement a feature, describe the requirement and ask the AI to outline a possible approach. This can give you a starting blueprint which you then adjust. Don’t forget about everyday communications: many engineers use AI to draft emails or Slack messages, especially when communicating complex ideas.

For instance, if you need to explain to a product manager why a certain bug is tricky, you can ask the AI to help articulate the explanation clearly. This might sound trivial, but it’s a real productivity boost and helps ensure you communicate effectively. Remember, “it’s not always all about the code” - AI can assist in meetings, brainstorming, and articulating ideas too. An AI-native engineer leverages these opportunities.

Step 6: Iterate and refine through feedback.

As you begin using AI day-to-day, treat it as a learning process for yourself. Pay attention to where the AI’s output needed fixing and try to deduce why. Was the prompt incomplete? Did the AI assume the wrong context? Use that feedback to craft better prompts next time. Most AI coding assistants allow an iterative process: you can say “Oops, that function is not handling empty inputs correctly, please fix that” and the AI will refine its answer. Take advantage of this interactivity - it’s often faster to correct an AI’s draft by telling it what to change than writing from scratch.

Over time, you’ll develop a library of prompt patterns that work well. For example, you might discover that “Explain X like I’m a new team member” yields a very good high-level explanation of a piece of code for documentation purposes. Or that providing a short example input and output in your prompt dramatically improves an AI’s answer for data transformation tasks. Build these discoveries into your workflow.

Step 7: Always verify and test AI outputs.

This cannot be stressed enough: never assume the AI is 100% correct. Even if the code compiles or the answer looks reasonable, do your due diligence. Run the code, write additional tests, or sanity-check the reasoning. Many AI-generated solutions work on the surface but fail on edge cases or have subtle bugs.

You are the engineer; the AI is an assistant. Use all your normal best practices (code reviews, testing, static analysis) on AI-written code just as you would on human-written code. In practice, this means budgeting some time to go through what the AI produced. The good news is that reading and understanding code is usually faster than writing it from scratch, so even with verification, you come out ahead productivity-wise.

As you gain experience, you’ll also learn which kinds of tasks the AI is weak at - for example, many LLMs struggle with precise arithmetic or highly domain-specific logic - and you’ll know to double-check those parts extra carefully or perhaps avoid using AI for those. Building this intuition ensures that by the time you trust an AI-generated change enough to commit or deploy, you’ve mitigated risks. A useful mental model is to treat AI like a highly efficient but not infallible teammate: you value its contributions but always perform the final review yourself.

Step 8: Expand to more complex uses gradually.

Once you’re comfortable with AI handling small tasks, you can explore more advanced integrations. For example, move from using AI in a reactive way (asking for help when you think of it) to a proactive way: let the AI monitor as you code. Tools like Cursor or Windsurf can run in agent mode where they watch for errors or TODO comments and suggest fixes automatically. Or you might try an autonomous agent mode like what Cline offers, where the AI can plan out a multi-step task (create a file, write code in it, run tests, etc.) with your approval at each step.

These advanced uses can unlock even greater productivity, but they also require more vigilance (imagine giving a junior dev more autonomy - you’d still check in regularly).

A powerful intermediate step is to use AI for end-to-end prototyping. For instance, challenge yourself on a weekend to build a simple app using mostly AI assistance: describe the app you want and see how far a tool like Replit’s AI or Bolt can get you, then use your skills to fill the gaps. This kind of exercise is fantastic for understanding the current limits of AI and learning how to direct it better. And it’s fun - you’ll feel like you have a superpower when, in a couple of hours, you have a working prototype that might have taken days or weeks to code by hand.

By following these steps and ramping up gradually, you’ll go from an AI novice to someone who instinctively weaves AI into their development workflow. The next section will dive deeper into the landscape of tools and platforms available - knowing what tool to use for which job is an important part of being productive with AI.

AI Tools and Platforms - from prototyping to production

One of the reasons it’s an exciting time to be an engineer is the sheer variety of AI-powered tools now available. As an AI-native software engineer, part of your skillset is knowing which tools to leverage for which tasks. In this section, we’ll survey the landscape of AI coding tools and platforms, and offer guidance on choosing and using them effectively. We’ll broadly categorize them into two groups - AI coding assistants (which integrate into your development environment to help with code you write) and AI-driven prototyping tools (which can generate entire project scaffolds or applications from a prompt). Both are valuable, but they serve different needs.

Before diving into specific tools, it's crucial for any professional to adopt a "data privacy firewall" as a core part of their mindset. Always ask yourself: "Would I be comfortable with this prompt and its context being logged on a third-party server?" This discipline is fundamental to using these tools responsibly. An AI-native engineer learns to distinguish between tasks safe for a public cloud AI and tasks that demand an enterprise-grade, privacy-focused, or even a self-hosted, local model.

AI Coding Assistants in the IDE

These tools act like an “AI pair programmer” integrated with your editor or IDE. They are invaluable when you’re working on an existing codebase or building a project in a traditional way (writing code, file by file). Here are some notable examples and their nuances:

GitHub Copilot has transformed from an autocomplete tool into a true coding agent: once you assign it an issue or task it can autonomously analyze your codebase, spin up environments (like via GitHub Actions), propose multi‑file edits, run commands/tests, fix errors, and submit draft pull requests complete with its reasoning in the logs. Built on state‑of‑the‑art models, it supports multi‑model selection and leverages Model Context Protocol (MCP) to integrate external tools and workspace context, enabling it to navigate complex repo structures including monorepos, CI pipelines, image assets, API dependencies, and more .Despite these advances, it’s optimized for low‑ to medium‑complexity tasks and still requires human oversight - especially for security, deep architecture, and multi‑agent coordination purpose

Cursor - AI-native code editor: Cursor is a modified VS Code editor with AI deeply integrated. Unlike Copilot which is an add-on, Cursor is built around AI from the ground up. It can do things like AI-aware navigation (ask it to find where a function is used, etc.) and smart refactorings. Notably, Cursor has features to generate tests, explain code, and even an “Agent” mode where it will attempt larger tasks on command. Cursor’s philosophy is to “supercharge” a developer especially in large codebases. If you’re working in a monorepo or enterprise-scale project, Cursor’s ability to understand project-wide context (and even customize it with project-specific rules using something like a .cursorrules file) can be a game changer. Many developers use Cursor in “Ask” mode to begin with - you ask for what you want, get confirmation, then let it apply changes - which helps ensure it does the right thing. The trade-off with Cursor is that it’s a standalone editor (though familiar to VS Code users) and currently it’s a paid product. It’s very popular, with millions of developers using it, including in enterprises, which speaks to its effectiveness.

Windsurf - AI agent for coding with large context: Windsurf is another AI-augmented development environment. Windsurf emphasizes enterprise needs: it has strong data privacy (no data retention, self-hosting options) and even compliance certifications like HIPAA and FedRAMP, making it attractive for companies concerned about code security. Functionally, Windsurf can do many of the same assistive tasks (code completion, suggesting changes, etc.), but anecdotally it’s especially useful in scenarios where you might feed entire files or lots of documentation to the AI. If you are working on a codebase with tens of thousands of lines and need the AI to be aware of most of it (for instance, a sweeping refactor across many files), a tool like Windsurf is worth considering.

Cline - autonomous AI coding agent for VS Code: Cline takes a unique approach by acting as an autonomous agent within your editor. It’s an open-source VS Code extension that not only suggests code, but can create files, execute commands, and perform multi-step tasks with your permission. Cline operates in dual modes: Plan (where it outlines what it intends to do) and Act (where it executes those steps) under human supervision. The idea is to let the AI handle more complex chores, like setting up a whole feature: it could plan “Add a new API endpoint, including route, controller, and database migration” and then implement each part, asking for confirmation. This aligns AI assistance with professional engineering workflows by giving the developer control and visibility into each step. I’ve noted that Cline “treats AI not just as a code generator but as a systems-level engineering tool” meaning it can reason about the project structure and coordinate multiple changes coherently. The downsides: because it can run code or modify many files, you have to be careful and review its plans. There’s also cost if you connect it to powerful models (some users note it can use a lot of tokens, hence $$, when running very autonomously). But for serious use - say you want to quickly prototype a new module in your app with tests and docs - Cline can be incredibly powerful. It’s like having an eager junior engineer that asks “Should I proceed with doing X?” at each step. Many developers appreciate this more collaborative style (Cline “asks more questions” by design) because it reduces the chance of the AI going off-track.

Use AI coding assistants when you’re iteratively building or maintaining a codebase - these tools fit naturally into your cycle of edit‑compile‑test. They’re ideal for tasks like writing new functions (just type a signature and they’ll often co‑complete the body), refactoring (“refactor this function to be more readable”), or understanding unfamiliar code (“explain this code” - and you get a concise summary). They’re not meant to build an entire app in one pass; instead, they augment your day‑to‑day workflow. For seasoned engineers, invoking an AI assistant becomes second nature - like an on‑demand search engine - used dozens of times daily for quick help or insights.

Under the hood, modern asynchronous coding agents like OpenAI Codex and Google’s Jules go a step further. Codex operates as an autonomous cloud agent - handling parallel tasks in isolated sandboxes: writing features, fixing bugs, running tests, generating full PRs - then presents logs and diffs for review.

Google’s Jules, powered by Gemini 2.5 Pro, brings asynchronous autonomy to your GitHub workflow: you assign an issue (such as upgrading Next.js), it clones your repo in a VM, plans its multi‑file edits, executes them, summarizes the changes (including audio recap), and issues a pull request - all while you continue working . These agents differ from inline autocomplete: they’re autonomous collaborators that tackle defined tasks in the background and return completed work for your review, letting you stay focused on higher‑level challenges.

AI-Driven prototyping and MVP builders

Separate from the in-IDE assistants, a new class of tools can generate entire working applications or substantial chunks of them from high-level prompts. These are great when you want to bootstrap a new project or feature quickly - essentially to get from zero to a first version (the “v0”) with minimal manual coding. They won’t usually produce final production-quality code without further iteration, but they create a remarkable starting point.

Bolt (bolt.new) - one-prompt full-stack app generator: Bolt is built on the premise that you can type a natural language description of an app and get a deployable full-stack MVP in minutes. For example, you might say “A job board with user login and an admin dashboard” and Bolt will generate a React frontend (using Tailwind CSS for styling) and a Node.js/Prisma backend with a database, complete with the basic models for jobs and users. In testing, Bolt has proven to be extremely fast - often assembling a project in 15 seconds or so. The output code is generally clean and follows modern practices (React components, REST/GraphQL API, etc.), so you can open it in your IDE and continue development. Bolt excels at rapid iteration: you can tweak your prompt and regenerate, or use its UI to adjust what it built. It even has an “export to GitHub” feature for convenience. This makes it ideal for founders, hackathon participants, or any developer who wants to shortcut the initial setup of an app. The trade-off is that Bolt’s creativity is bounded by its training - it might use certain styling by default and might not handle very unique requirements without guidance. But as a starting point, it’s often impressive. In comparisons, users noted Bolt produces great-looking UIs very consistently and was a top pick for quickly getting a prototype UI that “wows” users or stakeholders.

v0 (v0.dev by Vercel) - text to Next.js app generator: v0 is a tool from Vercel that similarly generates apps, especially focusing on Next.js (since Vercel is behind Next.js). You give it a prompt for what you want, and it creates a project. One thing to note about v0: it has a distinct design aesthetic. Testers observed that v0 tends to style everything in the popular ShadCN UI style - basically a trendy minimalist component library - whether you asked for it or not. This can be good if you like that style out of the box, but it means if you wanted a very custom design, v0 might not match it precisely. In one comparison, v0 was found to “re-theme designs” towards its default look instead of faithfully matching a given spec. So, v0 might be best if your goal is a quick functional prototype and you’re flexible on appearance. The code output is usually Next.js React code with whatever backend you specify (it might set up a simple API or use Vercel’s Edge Functions, etc.). As part of Vercel’s ecosystem, it’s also oriented toward deployability - the idea is you could take what it gives you and deploy on Vercel immediately. If you’re a fan of Next.js or building a web product that you plan to host on Vercel, v0 is a natural choice. Just keep in mind you might need to do some re-theming if you have your own design, since v0 has “opinions” about how things should look.

Lovable - prompt-to-UI mockups (with some code): Lovable is aimed more at beginners or non-engineers who want to build apps through a simpler interface. It lets you describe an app and provides a visual editor as well. Users have noted that Lovable’s strength is ease of use - it’s quite guided and has a nice UI for assembling your app - but its weakness is when you need to dive into code, it can be cumbersome. It tends to hide complexity (which is good if you want no-code), but if you are an engineer who wants to tweak what it built, you might find the experience frustrating. In terms of output, Lovable can create both UI and some logic, but perhaps not as completely as Bolt or v0. In one test, Lovable interestingly did better when given a screenshot to imitate than when given a Figma design - a bit inconsistent. It’s targeted at quick prototyping and maybe building simple apps with minimal coding. If you’re a tech lead working with a designer or PM who can’t code, Lovable might be something to let them play with to visualize ideas, which you then refine in code. However, for a seasoned engineer, Lovable might feel a bit limiting.

Replit: Replit’s online IDE has an AI mode where you can type a prompt like “Create a 2D Zelda-like game” or “Build a habit tracker app” and it will generate a project in their cloud environment. Replit’s strength is that it can run and host the result immediately, and it often takes care of both frontend and backend seamlessly since it’s all in one environment. A standout example: when asked to make a simple game, Replit’s AI agent not only wrote the code, but ran it and iteratively improved it by checking its own work with screenshots. In comparisons, Replit sometimes produced the most functionally complete result (for instance, a working game with enemies and collision when others barely produced a moving character). However, it might take longer to run and use more computational resources in doing so. Replit is great if you want a one-shot outcome that is actually runnable and possibly closer to production. It’s like having an AI that not only writes code, but also tests it live and fixes it. For full-stack apps, Replit likewise can wire up client and server and even set up a database if asked. The output might not be the cleanest or most idiomatic code in every case, but it’s often a very workable starting point. One consideration: because Replit’s agent runs in the cloud and can execute code, you might hit some limits for very big apps (and you need to be careful if you prompt it to do something that could run malicious code - though it’s sandboxed). Overall, if your goal is “I want an app that I can run immediately and play with, and I don’t mind if the code needs refactoring later” Replit is a top choice.

Firebase Studio is Google’s cloud-based, agentic IDE built powered by Gemini, which lets you rapidly prototype and ship full‑stack, AI‑infused apps entirely in your browser. You can import existing codebases - or start from scratch using natural‑language, image, or sketch prompts via the App Prototyping agent - to generate a working Next.js prototype (frontend, backend, Firestore, Auth, hosting, etc.) and immediately preview it live, then seamlessly switch into full‑coding mode in a Code‑OSS (VS Code) workspace powered by Nix and integrated Firebase emulators. Gemini in Firebase offers inline code suggestions, debugging, test generation, documentation, migrations, even running terminal commands and interpreting outputs, so you can prompt “Build a photo‑gallery app with uploads and authentication” see the app spun up end to end, tweak it, deploy it to Hosting or Cloud Run, and monitor usage - all without switching tools

When to use prototyping tools: These shine when you are starting a new project or feature and want to eliminate the grunt work of initial setup. For instance, if you’re a tech lead needing a quick proof-of-concept to show stakeholders, using Bolt or v0 to spin up the base and then deploying it can save days of effort. They are also useful for exploring ideas - you can generate multiple variations of an app to see different approaches. However, expect to iterate. Think of what these tools produce as a first draft.

After generating, you’ll likely bring the code into your own IDE (perhaps with an AI assistant there to help) and refine it. In many cases, the best workflow is hybrid: prototype with a generation tool, then refine with an in-IDE assistant. For example, you might use Bolt to create the MVP of an app, then open that project in Cursor to continue development with AI pair-programming on the finer details. These approaches aren’t mutually exclusive at all - they complement each other. Use the right tool for each phase: prototypers for initial scaffolding and high-level layout, assistants for deep code work and integration.

Another consideration is limitations and learning: by examining what these prototyping tools generate, you can learn common patterns. It’s almost like reading the output of a dozen framework tutorials in one go. But also note what they don’t do - often they won’t get the last 20-30% of an app done (things like polish, performance tuning, handling edge-case business logic), which will fall to you.

This is akin to the “70% problem” observed in AI-assisted coding: AI gets you a big chunk of the way, but the final mile requires human insight. Knowing this, you can budget time accordingly. The good news is that initial 70% (spinning up UI components, setting up routes, hooking up basic CRUD) is usually the boring part - and if AI does that, you can focus your energy on the interesting parts (custom logic, UX finesse, etc.). Just don’t be lulled into a false sense of security; always review the generated code for things like security (e.g., did it hardcode an API key?) or correctness.

Summary of tools vs use-cases: It’s helpful to recap and simplify how these tools differ. In a nutshell: Use an IDE assistant when you’re evolving or maintaining a codebase; use a generative prototype tool when you need a new codebase or module quickly. If you already have a large project, something like Cursor or Cline plugged into VS Code will be your day-to-day ally, helping you write and modify code intelligently.

If you’re starting a project from scratch, tools like Bolt or v0 can do the heavy lifting of setup so you aren’t spending a day configuring build tools or creating boilerplate files. And if your work involves both (which is common: starting new services and maintaining old ones), you might very well use both types regularly. Many teams report success in combining them: for instance, generate a prototype to kickstart development, then manage and grow that code with an AI-augmented IDE.

Lastly, be aware of the “not invented here” stigma some might have with AI-gen code. It’s important to communicate within your team about using these tools. Some traditionalists may be skeptical of code they didn’t write themselves. The best way to overcome that is by demonstrating the benefits (speed, and after your review, the code quality can be made good) and making AI use collaborative. For example, share the prompt and output in a PR description (“This controller was generated using v0.dev based on the following description...”). This demystifies the AI’s contribution and can invite constructive review just like human-generated code.

Now that we’ve looked at tools, in the next section we’ll zoom out and walk through how to apply AI across the entire software development lifecycle, from design to deployment. AI’s role isn’t limited to coding; it can assist in requirements, testing, and more.

AI across the Software Development Lifecycle

An AI-native software engineer doesn’t only use AI for writing code - they leverage it at every stage of the software development lifecycle (SDLC). This section explores how AI can be applied pragmatically in each phase of engineering work, making the whole process more efficient and innovative. We’ll keep things domain-agnostic, with a slight bias to common web development scenarios for examples, but these ideas apply to many domains of software (from cloud services to mobile apps).

1. Requirements & ideation

The first step in any project is figuring out what to build. AI can act as a brainstorming partner and a requirements analyst.

For example, if you have a high-level product idea (“We need an app for X”), you can ask an AI to help brainstorm features or user stories. A prompt like: “I need to design a mobile app for a personal finance tracker. What features should it have for a great user experience?” can yield a list of features (e.g., budgeting, expense categorization, charts, reminders) that you might not have initially considered.

The AI can aggregate ideas from countless apps and articles it has ingested. Similarly, you can task the AI with writing preliminary user stories or use cases: “List five user stories for a ride-sharing service’s MVP.” This can jumpstart your planning with well-structured stories that you can refine. AI can also help clarify requirements: if a requirement is vague, you can ask “What questions should I ask about this requirement to clarify it?” - and the AI will propose the key points that need definition (e.g., for “add security to login”, AI might suggest asking about 2FA, password complexity, etc.). This ensures you don’t overlook things early on.

Another ideation use: competitive analysis. You could prompt: “What are the common features and pitfalls of task management web apps? Provide a summary.” The AI will list what such apps usually do and common complaints or challenges (e.g., data sync, offline support). This information can shape your requirements to either include best-in-class features or avoid known issues. Essentially, AI can serve as a research assistant, scanning the collective knowledge base so you don’t have to read 10 blog posts manually.

Of course, all AI output needs critical evaluation - use your judgment to filter which suggestions make sense in context. But at the early stage, quantity of ideas can be more useful than quality, because it gives you options to discuss with your team or stakeholders. Engineers with an AI-native mindset often walk into planning meetings with an AI-generated list of ideas, which they then augment with their own insights. This accelerates the discussion and shows initiative.

AI can also help non-technical stakeholders at this stage. If you’re a tech lead working with, say, a business analyst, you might generate a draft product requirements document (PRD) with AI’s help and then share it for review. It’s faster to edit a draft than to write from scratch. Google’s prompt guide suggests even role-specific prompts for such cases - e.g., “Act as a business analyst and outline the requirements for a payroll system upgrade”. The result gives everyone something concrete to react to. In sum, in requirements and ideation, AI is about casting a wide net of possibilities and organizing thoughts, which provides a strong starting foundation.

2. System design & architecture

Once requirements are in place, designing the system is next. Here, AI can function as a sounding board for architecture. For instance, you might describe the high-level architecture you’re considering - “We plan to use a microservice for the user service, an API gateway, and a React frontend” - and ask the AI for its opinion: “What are the pros and cons of this approach? Any potential scalability issues?” An AI well-versed in tech will enumerate points perhaps similar to what an experienced colleague might say (e.g., microservices allow independent deployment but add complexity in devops, etc.). This is useful to validate your thinking or uncover angles you missed.

AI can also help with specific design questions: “Should we choose SQL or NoSQL for this feature store?” or “What’s a robust architecture for real-time notifications in a chat app?” It will provide a rationale for different choices. While you shouldn’t take its answer as gospel, it can surface considerations (latency, consistency, cost) that guide your decision. Sometimes hearing the reasoning spelled out helps you make a case to others or solidify your own understanding. Think of it as rubber-ducking your architecture to an AI - except the duck talks back with fairly reasonable points!

Another use is generating diagrams or mappings via text. There are tools where if you describe an architecture, the AI can output a pseudo-diagram (in Mermaid markdown, for example) that you can visualize. For example: “Draw a component diagram: clients -> load balancer -> 3 backend services -> database.” The AI could produce a Mermaid code block that renders to a diagram. This is a quick way to go from concept to documentation. Or you can ask for API design suggestions: “Design a REST API for a library system with endpoints for books, authors, and loans.” The AI might list endpoints (GET /books, POST /loans, etc.) along with example payloads, which can be a helpful starting point that you then adjust.

A particularly powerful use of AI at this stage is validating assumptions by asking it to think of failure cases. For example: “We plan to use an in-memory cache for session data in one data center. What could go wrong?” The AI might remind you of scenarios like cache crashes, data center outage, or scaling issues. It’s a bit like a risk checklist generator. This doesn’t replace doing a proper design review, but it’s a nice supplement to catch obvious pitfalls early.

On the flip side, if you encounter pushback on a design and need to articulate your reasoning, AI can help you frame arguments clearly. You can feed the context to AI and have it help articulate the concerns and explore alternatives. The AI will enumerate issues and you can use that to formulate a respectful, well-structured response. In essence, AI can bolster your communication around design, which is as important as the design itself in team settings.

A more profound shift is that we’re moving to spec-driven development. It’s not about code-first; in fact, we’re practically hiding the code! Modern software engineers are creating (or asking AI for) implementation plans first. Some start projects by asking the tool to create a technical design (saved to a markdown file) and an implementation plan (similarly saved locally and fed in later).”

Some note that they find themselves “thinking less about writing code and more about writing specifications - translating the ideas in my head into clear, repeatable instructions for the AI.” These design specs have massive follow-on value; they can be used to generate the PRD, the first round of product documentation, deployment manifests, marketing messages, and even training decks for the sales field. Today’s best engineers are great at documenting intent that in-turn spawns the technical solution.

This strategic application of AI has profound implications for what defines a senior engineer today. It marks a shift from being a superior problem-solver to becoming a forward-thinking solution-shaper. A senior AI-native engineer doesn't just use AI to write code faster; they use it to see around corners - to model future states, analyze industry trends, and shape technical roadmaps that anticipate the next wave of innovation. Leveraging AI for this kind of architectural foresight is no longer just a nice-to-have; it's rapidly becoming a core competency for technical leadership.

3. Implementation (Coding)

This is the phase most people immediately think of for AI assistance, and indeed it’s one of the most transformative. We covered in earlier sections how to use coding assistants in your IDE, so here let’s structure it around typical coding sub-tasks:

Scaffolding and setup: Setting up new modules, libraries, or configuration files can be tedious. AI can generate boilerplate configs (Dockerfiles, CI pipelines, ESLint configs, etc.) based on descriptions. For example, “Provide a minimal Vite and TypeScript config for a React app” may yield decent config files that you might only need to tweak slightly. Similarly, if you need to use a new library (say authentication or logging), you can ask AI, “Show an example of integrating Library X into an Express.js server.” It often can produce a minimal working example, saving you from combing through docs for the basics.

Feature implementation: When coding a feature, use AI as a partner. You might start writing a function and hit a moment of doubt - you can simply ask, “What’s the best way to implement X?” Perhaps you need to parse a complex data format - the AI might even recall the specific API you need to use. It’s like having Stack Overflow threads summarized for you on the fly. Many AI-native devs actually use a rhythm: they outline a function in comments (steps it should take), then prompt the AI to fill it in code. This often yields a nearly complete function which you then adjust. It’s a different way of coding: you focus on logic and intent, the AI fleshes out syntax and repetitive parts.

Code reuse and referencing: Another everyday scenario - you vaguely remember writing similar code before or know there’s an algorithm for this. You can describe it and ask the AI. For instance, “I need to remove duplicates from a list of objects in Python, treating objects with same id as duplicates. How to do that efficiently?” And if the first answer isn’t what you need, you can refine or just say “that’s not quite it, I need to consider X” and it will try again. This interactive Q&A for coding is a huge quality-of-life improvement.

Maintaining consistency and patterns: In a large project, you often follow patterns (say a certain way to handle errors or logging). AI can be taught these if you provide context (some tools let you add a style guide or have it read parts of your repo). Even without explicit training, if you point the AI to an existing file as an example, you can prompt “Create a new module similar to this one but for [some new entity]”. It will mimic the style and structure, which means the new code fits in naturally. It’s like having an assistant who read your entire codebase and documentation and always writes code following those conventions (one day, AI might truly do this seamlessly with features like the Model Context Protocol to plug into different environments).

Generating tests alongside code: A highly effective habit is to have AI generate unit tests immediately after writing a piece of code. Many tools (Cursor, Copilot, etc.) can suggest tests either on demand or even automatically. For example, after writing a function, you could prompt: “Generate a unit test for the above function, covering edge cases.” The AI will create a test method or test case code. This serves two purposes: it gives you quick tests, and it also serves as a quasi-review of your code (if the AI’s expected behavior in tests differs from your code, maybe your code has an issue or the requirements were misunderstood). It’s like doing TDD where the AI writes the test and you verify it matches intent. Even if you prefer writing tests yourself, AI can suggest additional cases you might miss (like large input, weird characters, etc.), acting as a safety net.

Debugging assistance: When you hit a bug or an error message, AI can help diagnose it. For instance, you can copy an error stack trace or exception and ask, “What might be causing this error?” Often, it will explain in plain terms what the error means and common causes. If it’s a runtime bug without obvious errors, you can describe the behavior: “My function returns null for input X when it shouldn’t. Here’s the code snippet… Any idea why?” The AI might spot a logic flaw. It’s not guaranteed, but even just explaining your code in writing (to the AI) sometimes makes the solution apparent to you - and the AI’s suggestions can confirm it. Some AI tools integrated into runtime (like tools in Replit) can even execute code and check intermediate values, acting like an interactive debugger. You could say, “Run the above code with X input and show me variable Y at each step” and it will simulate that. This is still early, but it’s another dimension of debugging that will grow.

Performance tuning & refactoring: If you suspect a piece of code is slow or could be cleaner, you can ask the AI to refactor it for performance or readability. For instance: “Refactor this function to reduce its time complexity” or “This code is doing a triple nested loop, can you make it more efficient?” The AI might recognize a chance to use a dictionary lookup or a better algorithm (e.g., going from O(n^2) to O(n log n)). Or for readability: “Refactor this 50-line function into smaller functions and add comments.” It will attempt to do so. Always double-check the changes (especially for subtle bugs), but it’s a great way to see alternative implementations quickly. It’s like having a second pair of eyes that isn’t tired and can rewrite code in seconds for comparison.

In all these coding scenarios, the theme is AI accelerates the mechanical parts of coding and provides just-in-time knowledge, while you remain the decision-maker and quality control. It’s important to interject a note on version control and code reviews: treat AI contributions like you would a junior developer’s pull request. Use git diligently, diff the changes the AI made, run your test suite after major edits, and do code reviews (even if you’re reviewing code the AI wrote for you!). This ensures robustness in your implementation phase.

4. Testing & quality assurance

Testing is an area where AI can shine by reducing the toil. We already touched on unit test generation, but let’s dive deeper:

Unit tests generation: You can systematically use AI to generate unit tests for existing code. One approach: take each public function or class in your module, and prompt AI with a short description of what it should do (if there isn’t clear documentation, you might have to infer or write a one-liner spec) and ask for a test. For example, “Function normalizeName(name) should trim whitespace and capitalize the first letter. Write a few PyTest cases for it.” The AI will output tests including typical and edge cases like empty string, all caps input, etc. This is extremely helpful for legacy code where tests are missing - it’s like AI-driven test retrofitting. Keep in mind the AI doesn’t know your exact business logic beyond what you describe, so verify that the asserted expectations match the intended behavior. But even if they don’t, it’s informative: an AI might make an assumption about the function that’s wrong, which highlights that the function’s purpose wasn’t obvious or could be misused. You then improve either the code or clarify the test.

Property-based and fuzz testing: You can use AI to suggest properties for property-based tests. For instance, “What properties should hold true for a sorting function?” might yield answers like “the output list is sorted, has same elements as input, idempotent if run twice” etc. You can turn those into property tests with frameworks like Hypothesis or fast-check. The AI can even help write the property test code. Similarly, for fuzzing or generating lots of input combinations, you could ask AI to generate a variety of inputs in a format. “Give me 10 JSON objects representing edge-case user profiles (some missing fields, some with extra fields, etc.)” - use those as test fixtures to see if your parser breaks.

Integration and end-to-end tests: For more complex tests like API endpoints or UI flows, AI can assist by outlining test scenarios. “List some end-to-end test scenarios for an e-commerce checkout process.” It will likely enumerate scenarios: normal purchase, invalid payment, out-of-stock item, etc. You can then script those. If you’re using a test framework like Cypress for web UI, you could ask AI to write a test script given a scenario description. It might produce a pseudo-code that you tweak to real code (Cypress or Selenium commands). This again saves time on boilerplate and ensures you consider various paths.

Test data generation: Creating realistic test data (like a valid JSON of a complex object) is mundane. AI can generate fake data that looks real. For example, “Generate an example JSON for a university with departments, professors, and students.” It will fabricate names and arrays etc. This data can then be used in tests or to manually try out an API. It’s like having an infinite supply of realistic dummy data without writing it yourself. Just be mindful of any privacy - if you prompt with real data, ensure you anonymize it first.

Exploratory testing via agents: A frontier area: using AI agents to simulate users or adversarial inputs. There are experimental tools where an AI can crawl your web app like a user, testing different inputs to see if it can break something. Anthropic’s Claude Code best practices talk about multi-turn debugging, where the AI iteratively finds and fixes issues. You might be able to say, “Here’s my function, try different inputs to make it fail” and the AI will do a mini fuzz test mentally. This isn’t foolproof, but as a concept it points to AI helping in QA beyond static test cases - by actively trying to find bugs like a QA engineer would.

Reviewing test coverage: If you have tests and want to ensure they cover logic, you can ask AI to analyze if certain scenarios are missing. For example, provide a function or feature description and the current tests, and ask “Are there any important test cases not covered here?”. The AI might notice, e.g., “the tests didn’t cover when input is null or empty” or “no test for negative numbers”, etc. It’s like a second opinion on your test suite. It won’t know if something is truly missing unless obvious, but it can spot some gaps.

The end goal is higher quality with less manual effort. Testing is typically something engineers know they should do more of, but time pressure often limits it. AI helps remove some friction by automating the creation of tests or at least the scaffolding of them. This makes it likelier you’ll have a more robust test suite, which pays off in fewer regressions and easier maintenance.

5. Debugging & maintenance

Bugs and maintenance tasks consume a large portion of engineering time. AI can reduce that burden too:

Explaining legacy code: When you inherit a legacy codebase or revisit code you wrote long ago, understanding it is step one. You can use AI to summarize or document code that lacks clarity. For instance, copy a 100-line function and ask, “Explain in simple terms what this function does step by step.” The AI will produce a narrative of the code’s logic. This often accelerates your comprehension, especially if the code is dense or not well-commented. It might also identify what the code is supposed to do versus what it actually does (catching subtle bugs). Some tools integrate this - you can click a function and get an AI-generated docstring or summary. This is invaluable when you maintain systems with scarce documentation.

Identifying the root cause: When facing a bug report like “Feature X is crashing under condition Y” you can involve AI as a rubber duck to reason through the possible causes. Describe the situation and the code path as you know it, and ask for theories: “Given this code snippet and the error observed, what could be causing the null pointer exception?” The AI might point out, “if data can be null then data.length would throw that exception, check if that can happen in condition Y.” It’s akin to having a knowledgeable colleague to bounce ideas off of, even if they can’t see your whole system, they often generalize from known patterns. This can save time compared to going down the wrong path in debugging.

Fixing code with AI suggestions: If you localize a bug in a piece of code, you can simply tell the AI to fix it. “Fix the bug where this function fails on empty input.” The AI will provide a patch (like adding a check for empty input). You still have to ensure that’s the correct fix and doesn’t break other things, but it’s quicker than writing it yourself, especially for trivial fixes. Some IDEs do this automatically: for example, if a test fails, an AI could suggest a code change to make the test pass. One must be careful here - always run tests after accepting such changes to ensure no side effects. But for maintenance tasks like upgrading a library version and fixing deprecated calls, AI can be a huge help (e.g., “We upgraded to React Router v7, update this v6 code to v7 syntax” - it will rewrite the code using the new API, a big time saver).

Refactoring and improving old code: Maintenance often involves refactoring for clarity or performance. You can employ AI to do large-scale refactors semi-automatically. For instance, “Our code uses a lot of callback-based async. Convert these examples to async/await syntax.” It can show you how to update a representative snippet, which you can then apply across code (perhaps with a search/replace or with the AI’s help file by file). Or at a smaller scale, “Refactor this class to use dependency injection instead of hardcoding the database connection.” The AI will outline or even implement a cleaner pattern. This is how AI helps you keep the codebase modern and clean without spending excessive time on rote transformations.

Documentation and knowledge management: Maintaining software also means keeping docs up to date. AI can make documenting changes easier. After implementing a feature or fix, you can ask AI to draft a short summary or update documentation. For example, “Generate a changelog entry: Fixed the payment module to handle expired credit cards by adding a retry mechanism.” It will produce a nicely worded entry. If you need to update an API doc, you can feed it the new function signature and ask for a description. The AI may not know your entire system’s context, but it can create a good first draft of docs which you then tweak to be perfectly accurate. This lowers the activation energy to write documentation.

Communication with team/users: Maintenance involves communication - explaining to others what changed, what the impact is, etc. AI can help write release notes or migration guides. E.g., “Write a short guide for developers migrating from API v1 to v2 of our service, highlighting changed endpoints.” If you give it a list of changes, it can format it into a coherent guide. For user-facing notes, “Summarize these bug fixes in non-technical terms for our monthly update.” Once again, you’ll refine it, but the heavy lifting of prose is handled. This ensures important information actually gets communicated (since writing these can often fall by the wayside when engineers are busy).

In essence, AI can be thought of as an ever-present helper throughout maintenance. It can search through code faster than you (if integrated), recall how something should work, and even keep an eye out for potential issues. For example, if you let an AI agent scan your repository, it might flag suspicious patterns (like an API call made without error handling in many places).

Anthropic’s approach with a CLAUDE.md to give the AI context about your repo is one technique to enable more of this. In time, we may see AI tools that proactively create tickets or PRs for certain classes of issues (security or style). As an AI-native engineer, you will welcome these assists - they handle the drudgery, you handle the final judgment and creative problem-solving.

6. Deployment & operations

Even after code is written and tested, deploying and operating software is a big part of the lifecycle. AI can help here, too:

Infrastructure as code: Tools like Terraform or Kubernetes manifests are essentially code - and AI can generate them. If you need a quick Terraform script for an AWS EC2 with certain settings, you can prompt, “Write a Terraform configuration for an AWS EC2 instance with Ubuntu, t2.micro, in us-west-2.” It’ll give a reasonable config that you adjust. Similarly, “Create a Kubernetes Deployment and Service for a Node.js app called myapp, image from ECR, 3 replicas.” The YAML it produces will be a good starting point. This saves a lot of time trawling through documentation for syntax. One caution: verify all credentials and security groups etc., but the structure will be there.

CI/CD pipelines: If you’re setting up a continuous integration (CI) workflow (like a GitHub Actions YAML or a Jenkins pipeline), ask AI to draft it. For example: “Write a GitHub Actions workflow YAML that lints, tests, and deploys a Python Flask app to Heroku on push to main.” The AI will outline the jobs and steps pretty well. It might not get every key exactly right (since these syntaxes update), but it’s far easier to correct a minor key name than to write the whole file yourself. As CI pipelines can be finnicky, having the AI handle the boilerplate and you just fix small errors is a huge time saver.

Monitoring and alert queries: If you use monitoring tools (like writing a Datadog query or a Grafana alert rule), you can describe what you want and let the AI propose the config. E.g., “In PromQL, how do I write an alert for if error_rate > 5% over 5 minutes on service X?” It will craft a query that you can plug in. This is particularly handy because these domain-specific languages (like PromQL, Splunk query language, etc.) can be obscure - AI has likely seen examples and can adapt them for you.

Incident analysis: When something goes wrong in production, you often have logs, metrics, traces to look at. AI can assist in analyzing those. For instance, paste a block of log around the time of failure and ask “What stands out as a possible issue in these logs?”. It might pinpoint an exception stack trace in the noise or a suspicious delay. Or describe the symptom and ask “What are possible root causes of high CPU usage on the database at midnight?” It could list scenarios (backup running, batch job, etc.), helping your investigation. OpenAI’s enterprise guide emphasizes using AI to surface insights from data and logs - this is becoming an emerging use-case: AI ops or AIOps.

ChatOps and automation: Some teams integrate AI into their ops chat. For example, a Slack bot backed by an LLM that you can ask, “Hey, what’s the status of the latest deploy? Any errors?” and it could fetch data and summarize. While this requires some setup (wiring your CI or monitoring into an AI-friendly format), it’s an interesting direction. Even without that, you can manually do it: copy some output (like test results or deployment logs) and have AI summarize it or highlight failures. It’s a bit like a personal assistant that reads long scrollbacks of text for you and says “here’s the gist: 2 tests failed, looks like a database connection issue.” You then know where to focus.

Scaling and capacity planning: If you need to reason about scaling (e.g., “If each user does X requests and we have Y users, how many instances do we need?”), AI can help do the math and even account for factors you mention. This isn’t magic - it’s just calculation and estimation, but phrasing it to AI can sometimes yield a formatted plan or table, saving you some mental load. Additionally, AI might recall known benchmarks (like “Usually a t2.micro can handle ~100 req/s for a simple app”) which can aid rough capacity planning. Always validate such numbers from official sources, but it’s a quick first estimate.

Documentation & runbooks: Finally, operations teams rely on runbooks - documents outlining what to do in certain scenarios. AI can assist by drafting these from incident post-mortems or instructions. If you solved a production issue, you can feed the steps to AI and ask for a well-structured procedure write-up. It will give a neat sequence of steps in markdown that you can put in your runbook repository. This lowers the friction to document operational knowledge, which is often a big win for teams (tribal knowledge gets documented in accessible form). Anthropic’s enterprise trust guide emphasizes process and people - having clear AI-assisted docs is one way to spread knowledge responsibly.

By integrating AI throughout deployment and ops, you essentially have a co-pilot not just in coding but in DevOps. It reduces the lookup time (how often do we google for a particular YAML snippet or AWS CLI command?), providing directly usable answers. However, always remember to double-check anything AI suggests when it comes to infrastructure - a small mistake in a Terraform script could be costly. Validate in a safe environment when possible. Over time, as you fine-tune prompts or use certain verified AI “recipes”, you’ll gain confidence in which suggestions are solid.

As we’ve seen, across the entire lifecycle from conception to maintenance, there are opportunities to inject AI assistance.

The pattern is: AI takes on the grunt work and provides knowledge, while you provide direction, oversight, and final judgment.

This elevates your role - you spend more time on creative design, critical thinking, and decision-making, and less on boilerplate and hunting for information. The result is often a faster development cycle and, if managed well, improved quality and developer happiness. In the next section, we’ll discuss some best practices to ensure you’re using AI effectively and responsibly, and how to continuously improve your AI-augmented workflow.

Best Practices for effective and responsible AI-augmented engineering

Using AI in software development can be transformative, but to truly reap the benefits, one must follow best practices and avoid common pitfalls. In this section, we distill key principles and guidelines for being highly effective with AI in your engineering workflow. These practices ensure that AI remains a powerful ally rather than a source of errors or false confidence.

1. Craft Clear, contextual prompts

We’ve said it multiple times: effective prompting is critical. Think of writing prompts as a new core skill in your toolkit - much like writing good code or good commit messages. A well-crafted prompt can mean the difference between an AI answer that is spot-on and one that is useless or misleading. As a best practice, always provide the AI with sufficient context. If you’re asking about code, include the relevant code snippet or a description of the function’s purpose. Instead of: “How do I optimize this?” say “Given this code [include snippet], how can I optimize it for speed, especially the sorting part?” This helps the AI focus on what you care about.

Be specific about the desired output format too. If you want a JSON, say so; if you expect a step-by-step explanation, mention that. For example, “Explain why this test is failing, step by step” or “Return the result as a JSON object with keys X, Y”. Such instructions yield more predictable, useful results. A great technique from prompt engineering is to break the task into steps or provide an example. You might prompt: “First, analyze the input. Then propose a solution. Finally, give the solution code.” This structure can guide the AI through complex tasks. Google’s advanced prompt engineering guide covers methods like chain-of-thought prompting and providing examples to reduce guesswork. If you ever get a completely off-base answer, don’t just sigh - refine the prompt and try again. Sometimes iterating on the prompt (“Actually ignore the previous instruction about X and focus only on Y…”) will correct the course.

It’s also worthwhile to maintain a library of successful prompts. If you find a way of asking that consistently yields good results (say, a certain format for writing test cases or explaining code), save it. Over time, you build a personal playbook. Some engineers even have a text snippet manager for prompts. Given that companies like Google have published extensive prompt guides, you can see how valued this skill is becoming. In short: invest in learning to speak AI’s language effectively, because it pays dividends in quality of output.

2. Always review and verify AI outputs

No matter how impressive the AI’s answer is, never blindly trust it. This mantra cannot be overstated. Treat AI output as you would a human junior developer’s work: likely useful, but in need of review and testing. There are countless anecdotes of bugs slipping in because someone accepted AI code without understanding it. Make it a habit to inspect the changes the AI suggests. If it wrote a piece of code, walk through it mentally or with a debugger. Add tests to validate it (which AI can help write, as we discussed). If it gave you an explanation or analysis, cross-check key points. For instance, if AI says “This API is O(N^2) and that’s causing slowdowns” go verify the complexity from official docs or by reasoning it out yourself.

Be particularly wary of factually precise-looking statements. AI has a tendency to hallucinate details - like function names or syntaxes that look plausible but don’t actually exist. If an AI answer cites an API or a config key, confirm it in official documentation. In an enterprise context, never trust AI with company-specific facts (like “according to our internal policy…”) unless you fed those to it and it’s just rephrasing them.

For code, a good practice is to run whatever quick checks you have: linters, type-checkers, test suites. AI code might not adhere to your style guidelines or could use deprecated methods. Running a linter/formatter not only fixes style but can catch certain errors (e.g., unused variables, etc.). Some AI tools integrate this - for example, an AI might run the code in a sandbox and adjust if it sees exceptions, but that’s not foolproof. So you as the engineer must be the safety net.

In security-sensitive or critical systems, apply extra caution. Don’t use AI to generate secrets or credentials. If AI provides a code snippet that handles authentication or encryption, double-check it against known secure practices. There have been cases of AI coming up with insecure algorithms because it optimized for passing tests rather than actual security. The responsibility lies with you to ensure all outputs are safe and correct.

One helpful tip: use AI to verify AI. For example, after getting a piece of code from the AI, you can ask the same (or another) AI, “Is there any bug or security issue in this code?” It might point out something you missed (like, “It doesn’t sanitize input here” or “This could overflow if X happens”). While this second opinion from AI isn’t a guarantee either, it can be a quick sanity check. OpenAI and Anthropic’s guides on coding even suggest this approach of iterative prompting and review - essentially debugging with the AI’s help.

Finally, maintain a healthy skepticism. If something in the output strikes you as odd or too good to be true, investigate further. AI is great at sounding confident. Part of becoming AI-native is learning where the AI is strong and where it tends to falter. Over time, you’ll gain an intuition (e.g., “I know LLMs tends to mess up date math, I’ll double-check that part”). This intuition, combined with thorough review, keeps you in the driver’s seat.

3. Manage Scope: Use AI to amplify, not to autopilot entire projects

While the idea of clicking a button and having AI build an entire system is alluring, in practice it’s rarely that straightforward or desirable. A best practice is to use AI to amplify your productivity, not to completely automate what you don’t oversee. In other words, keep a human in the loop for any non-trivial outcome. If you use an autonomous agent to generate an app (as we saw with prototyping tools), treat the output as a prototype or draft, not a finished product. Plan to iterate on it yourself or with your team.

Break big tasks into smaller AI-assisted chunks. For instance, instead of saying “Build me a full e-commerce website” you might break it down: use AI to generate the frontend pages first (and you review them), then use AI to create a basic backend (review it), then integrate and refine. This modular approach ensures you maintain understanding and control. It also leverages AI’s strengths on focused tasks, rather than expecting it to juggle very complex interdependent tasks (which is often where it may drop something important). Remember that AI doesn’t truly “understand” your project’s higher objectives; that’s your job as the engineer or tech lead. You decide the architecture and constraints, and then use AI as a powerful assistant to implement parts of that vision.

Resist the temptation of over-reliance. It can be tempting to just ask the AI every little thing, even stuff you know, out of convenience. While it’s fine to use it for rote tasks, make sure you’re still learning and understanding. An AI-native engineer doesn’t turn off their brain - quite the opposite, they use AI to free their brain for more important thinking. For example, if AI writes a complex algorithm for you, take the time to understand that algorithm (or at least verify its correctness) before deploying. Otherwise, you might accumulate “AI technical debt” - code that works but no one truly groks, which can bite you later.

One way to manage scope is to set clear boundaries for AI agents. If you use something like Cline or Devin (autonomous coding agents), configure them with your rules (e.g., don’t install new dependencies without asking, don’t make network calls, etc.). And use features like dry-run or plan mode. For instance, have the agent show you its plan (like Cline does) and approve it step by step. This ensures the AI doesn’t go on a tangent or take actions you wouldn’t. Essentially, you act as a project manager for the AI worker - you wouldn’t let a junior dev just commit straight to main without code review; likewise, don’t let an AI do that.

By keeping AI’s role scoped and supervised, you avoid situations where something goes off the rails unnoticed. You also maintain your own engagement with the project, which is critical for quality and for your own growth. The flip side is also true: do use AI for all those small things that eat time but don’t need creative heavy lifting. Let it write the 10th variant of a CRUD endpoint or the boilerplate form validation code while you focus on the tricky integration logic or the performance tuning that requires human insight. This division of labor - AI for grunt work, human for oversight and creative problem solving - is a sweet spot in current AI integration.

4. Continue learning and stay updated

The field of AI and the tools available are evolving incredibly fast. Being “AI-native” today is different from what it will be a year from now. So a key principle is: never stop learning. Keep an eye on new tools, new model capabilities, and new best practices. Subscribe to newsletters or communities (there are developer newsletters dedicated to AI tools for coding). Share experiences with peers: what prompt strategies worked for them, what new agent framework they tried, etc. The community is figuring this out together, and being engaged will keep you ahead.

One practical way to learn is to integrate AI into side projects or hackathons. The stakes are lower, and you can freely explore capabilities. Try building something purely with AI assistance as an experiment - you’ll discover both its superpowers and its pain points, which you can then apply back to your day job carefully. Perhaps in doing so, you’ll figure out a neat workflow (like chaining a prompt from GPT to Copilot in the editor) that you can teach your team. In fact, mentoring others in your team on AI usage will also solidify your own knowledge. Run a brown bag session on prompt engineering, or share a success story of how AI helped solve a hairy problem. This not only helps colleagues but often they will share their own tips, leveling up everyone.

Finally, invest in your fundamental skills as well. AI can automate a lot, but the better your foundation in computer science, system design, and problem-solving, the better questions you’ll ask the AI and the better you’ll assess its answers. The human creativity and deep understanding of systems are not being replaced - in fact, they’re more important, because now you’re guiding a powerful tool. As one of my articles suggests, focus on maximizing the “human 30%” - the portion of the work where human insight is irreplaceable. That’s things like defining the problem, making judgment calls, and critical debugging. Strengthen those muscles through continuous learning, and let AI handle the rote 70%.

5. Collaborate and establish team practices

If you’re working in a team setting (most of us are), it’s important to collaborate on AI usage practices. Share what you learn with teammates and also listen to their experiences. Maybe you found that using a certain AI tool improved your commit velocity; propose it to the team to see if everyone wants to adopt it. Conversely, be open to guidelines - for example, some teams decide “We will not commit AI-generated code without at least one human review and testing” (a sensible rule). Consistency helps; if everyone follows similar approaches, the codebase stays coherent and people trust each other’s AI-augmented contributions.

You might even formalize this into team conventions. For instance, if using AI for code generation, some teams annotate the PR or code comments like // Generated with Gemini, needs review. This transparency helps code reviewers focus attention. It’s similar to how we treated code from automated tools (like “this file was scaffolded by Rails generator”). Knowing something was AI-generated might change how you review - perhaps more thoroughly in certain aspects.

Encourage pair programming with AI. A neat practice is AI-driven code review: when someone opens a pull request, they might run an AI on the diff to get an initial review comments list, and then use that to refine the PR before a human even sees it. As a team, you could adopt this as a step (with caution that AI might not catch all issues nor understand business context). Another collaborative angle is documentation: maybe maintain an internal FAQ of “How do I ask AI to do X for our codebase?” - e.g., how to prompt it with your specific stack. This could be part of onboarding new team members to AI usage in your project.