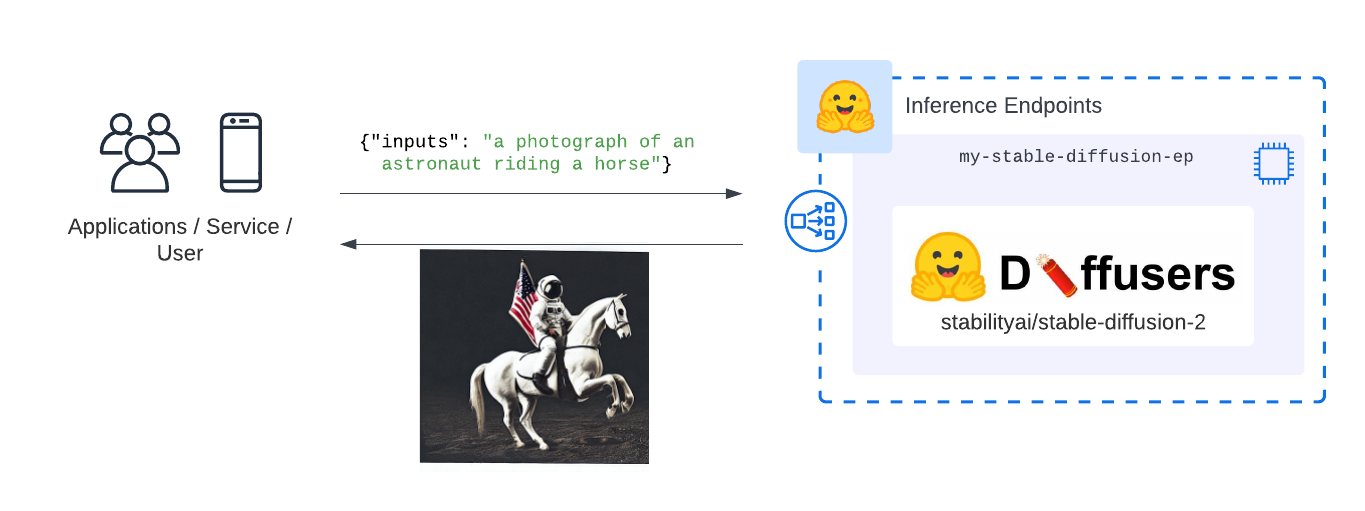

Welcome to this Hugging Face Inference Endpoints guide on how to deploy Stable Diffusionto generate images for a given input prompt. We will deploy stabilityai/stable-diffusion-2to Inference Endpoints for real-time inference using Hugging Faces 🧨 Diffusers library.

Before we can get started, make sure you meet all of the following requirements:

- An Organization/User with an active credit card. (Add billing here)You can access the UI at: https://ui.endpoints.huggingface.co

The Tutorial will cover how to:

- Deploy Stable Diffusion as an Inference EndpointTest & Generate Images with Stable Diffusion 2.0Integrate Stable Diffusion as API and send HTTP requests using Python

What is Stable Diffusion?

Stable Diffusion is a text-to-image latent diffusion model created by researchers and engineers from CompVis, Stability AI, and LAION. It is trained on 512x512 images from a subset of the LAION-5B database. LAION-5B is the largest, freely accessible multi-modal dataset that currently exists.

This guide will not explain how the model works. If you are interested, you should check out the Stable Diffusion with 🧨 Diffusers blog post or The Annotated Diffusion Mohttps://www.philschmid.de/static/blog/stable-diffusion-inference-endpoints/stable-diffusion.pngints/stable-diffusion.png" alt="stable-diffusion-architecture">

What are Hugging Face Inference Endpoints?

🤗 Inference Endpoints offers a secure production solution to easily deploy Machine Learning models on dedicated and autoscaling infrastructure managed by Hugging Face.

A Hugging Face Inference Endpoint is built from a Hugging Face Model Repository. It supports all the Transformers and Sentence-Transformers tasks as well as diffusers tasks and any arbitrary ML Framework through easy customization by adding a custom inference handler. This custom inference handler can be used to implement simple inference pipelines for ML Frameworks like Keras, Tensorflow, and sci-kit learn or to add custom business logic to your existing transformers pipeline.

1. Deploy Stable Diffusion as an Inference Endpoint

In this tutorial, you will learn how to deploy any Stable-Diffusion model from the Hugging Face Hub to Hugging Face Inference Endpoints and how to integrate it via an API into your products.

You can access the UI of Inference Endpoints directly at: https://ui.endpoints.huggingface.co/ or through the Landingpage.

The first step is to deploy our model as an Inference Endpoint. Therefore we add the Hugging face repository Id of the Stable Diffusion model we want to deploy. In our case, it is stabilhttps://www.philschmid.de/static/blog/stable-diffusion-inference-endpoints/repository.pngtable-diffusion-inference-endpoints/repository.png" alt="repository">

Note: If the repository is not showing up in the search it might be gated, e.g. runwayml/stable-diffusion-v1-5. To deploy gated models you need to accept the terms on the model page. Additionally, it is currently only possible to deploy gated repositories from user accounts and not within organizations.

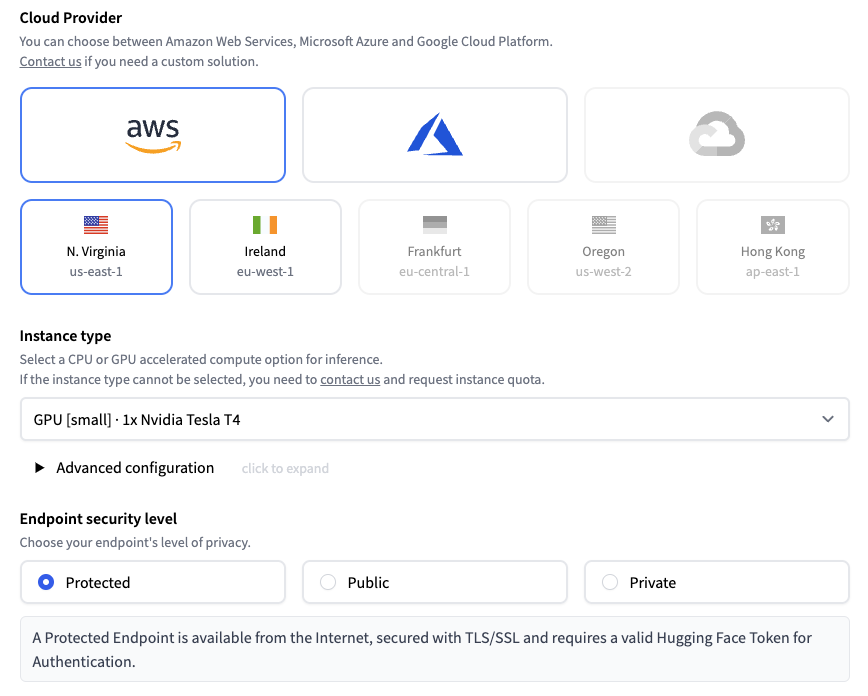

Now, we can make changes to the provider, region, or instance we want to use as well as configure the security level of our endpoint. The easieshttps://www.philschmid.dehttps://www.philschmid.de/static/blog/stable-diffusion-inference-endpoints/settings.png

We can deploy our model by clicking on the “Create Endpoint” button. Once we click the “create” button, Inference Endpoints will create a dedicated container with the model and start our resources. After a few minutes, our endpoint is up and running.

2. Test & Generate Images with Stable Diffusion 2.0

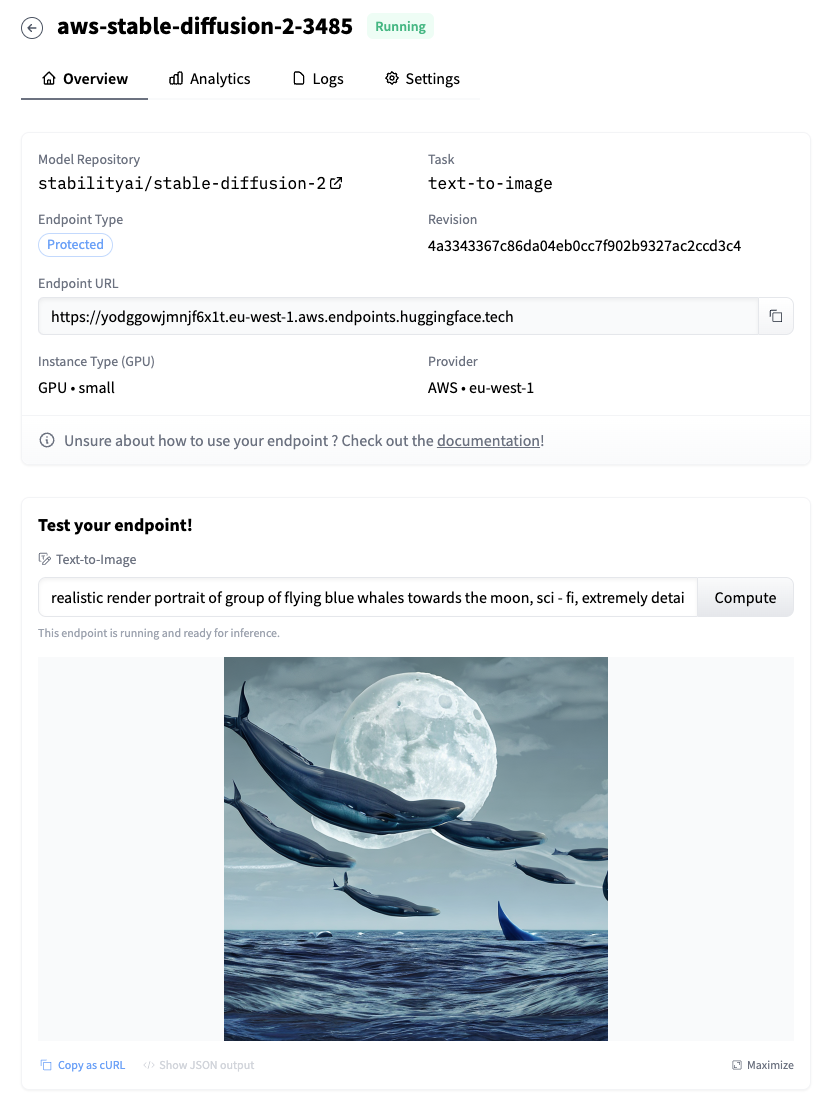

Before integrating the endpoint and model into our applications, we can demo and test the model directly in the UI. Each Inference Endpoint comes with an inference widget similar to the ones you know from the Hugging Face Hub.

We can provide a prompt for the image to be generated. Let's try realistic render portrait of group of flying blhttps://www.philschmid.dehttps://www.philschmid.de/static/blog/stable-diffusion-inference-endpoints/detail-page.png painting.

3. Integrate Stable Diffusion as API and send HTTP requests using Python

Hugging Face Inference endpoints can directly work with binary data, meaning we can directly send our prompt and get an image in return. We are going to use requests to send our requests and use PIL to save the generated images to disk. (make your you have it installed pip install request Pillow)

import jsonimport requests as rfrom io import BytesIOfrom PIL import Image ENDPOINT_URL="" # url of your endpointHF_TOKEN="" # token where you deployed your endpoint def generate_image(prompt:str): payload = {"inputs": prompt} headers = { "Authorization": f"Bearer {HF_TOKEN}", "Content-Type": "application/json", "Accept": "image/png" # important to get an image back } response = r.post(ENDPOINT_URL, headers=headers, json=payload) img = Image.open(BytesIO(response.content)) return img # define your promptprompt = "realistic render portrait realistic render portrait of group of flying blue whales towards the moon, intricate, toy, sci - fi, extremely detailed, digital painting, sculpted in zbrush, artstation, concept art, smooth, sharp focus, illustration, chiaroscuro lighting, golden ratio, incredible art by artgerm and greg rutkowski and alphonse mucha and simon stalenhag" # generate imageimage = generate_image(prompt) # save to diskimage.save(

We can also change the hyperparameter for the Stable Diffusion pipeline by providing the parameters in the parameters attribute when sending requests, below is an example JSON payload on how to generate a 768x768 image.

{ "inputs": "realistic render portrait realistic render portrait of group of flying blue whales towards the moon, intricate, toy, sci - fi, extremely detailed, digital painting, sculpted in zbrush, artstation, concept art, smooth, sharp focus, illustration, chiaroscuro lighting, golden ratio, incredible art by artgerm and greg rutkowski and alphonse mucha and simon stalenhag", "parameters": { "width": 768, "height": 768, "guidance_scale": 9 }}Now, its yoru time be creative and generate some amazing images with Stable Diffusion 2.0 on Inference Endpoints.

Thanks for reading! If you have any questions, feel free to contact me on Twitter or LinkedIn.