This week, I had the opportunity to give a behind the scenes tour of building a machine learning system for Southeast Asia’s largest hospital group, at the DataTalks.Club conference. The video is available below. We’ll discuss a few key points in this post.

• • •

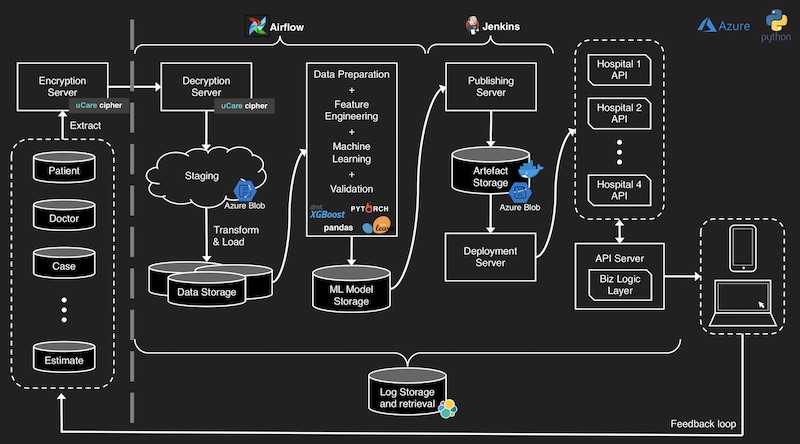

Overall design: From data to endpoint, and back to data

Design of the machine learning system, from data to model endpoint.

The grey dashed line (on the left) separates the hospitals’ environment from ours. Hospitals store the data, include patient, doctor, and case information. This data is periodically extracted, encrypted, and transported to our SFTP server. We then decrypt, validate, and store the raw, unstructured data (e.g., CSVs, parquet files, etc.) in our staging area. The raw data is then parsed and stored in our RDBMS.

The data science workflow reads the data (from the tabular databases) and puts it through the pipeline, including data preparation, feature engineering, machine learning, and model validation. (For more details, please view the talk.)

The trained model is stored in our model store. Models can take various formats, such as pickle files, library-specific formats (e.g., PyTorch), or Docker images that include serving code. These models are then pushed to the publishing server, which versions and deploys the models. Model versioning lets us easily rollback model deployments, providing an insurance policy (in case a deployment goes awry).

Each hospital has a unique model endpoint and stores the predictions received (we also log these predictions on our end). This completes the entire cycle and provides a feedback loop to validate and improve model performance.

Logging and monitoring is done via the ELK stack, orchestration via Airflow, and endpoint publishing via Jenkins. Implementation was done in Python and deployed on Azure (a common cloud provider used by healthcare organizations in Southeast Asia).

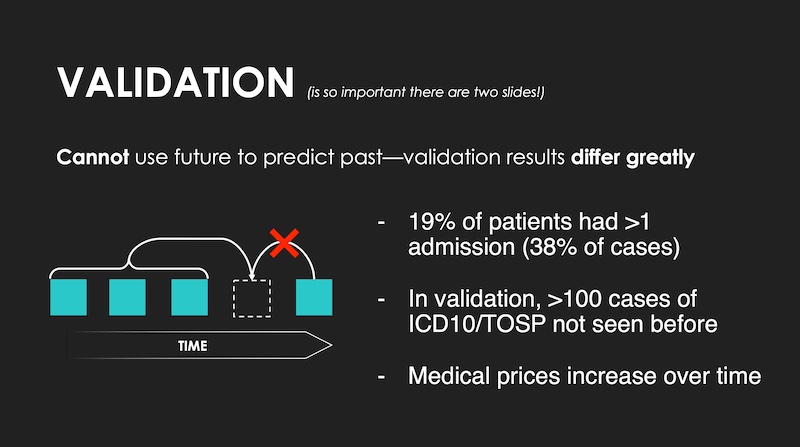

Most prod systems should use time-based validatiohttps://eugeneyan.com/assets/datax-validation.jpg/datax-validation.jpg" loading="The difference between a random-split and a time-based split" title="The difference between a random-split and a time-based split" alt="Image">

The difference between a random-split and a time-based split.

In most production systems, our data will have a strong temporal aspect. Thus, using random train-test split or cross-validation will give an overly optimistic result. This was especially so in our data where many patients had more than one visit, and a random split might lead to using future hospital visits to predict past hospital visits. Thus, a time-based split should be used instead.

There are exceptions to this though. For example, a product classifier which categorizes products based on image or text (e.g., title, description). The relationship between the images/text and product classification is likely stable over time. Thus, there probably isn’t much difference between a random-splithttps://eugeneyan.comhttps://eugeneyan.com/assets/datax-validation2.jpg

Data leaks happen if we're not using a time-based validation split.

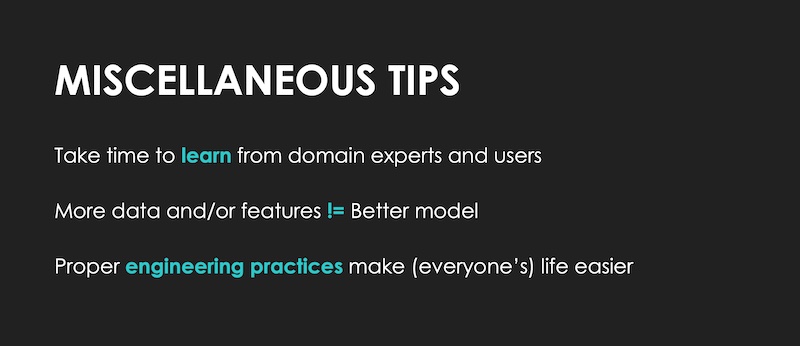

Miscellaneous tips and key takeaways

During the Q&A, the conference organizer, Alexey, chimed that the slides on miscellaneous tips and key takeaways were probably the mosthttps://eugeneyan.comhttps://eugeneyan.com/assets/datax-misc.jpgere’s a brief rundown:

Three simple bullet points that had outsized impact on overall outcomes.

Take time to learn from domain experts and users. While developing and improving our system, I visited the hospitals and observed the nursing and counter staff use their existing system, and eventually, our new system. This helped me learn about the assumptions and heuristics they adopted while using the systems, providing insight into new features (for our ML model) that reduced error. I also consulted hospital administrators for advice on how to better understand and clean the data—this also helped reduce error.

More data and/or features != better model. My initial approach was to use all the data from the various hospitals to build a single model—I assumed the model could learn about the idiosyncrasies of each hospital. This was not the case (or perhaps my models were underpowered). Building a separate model for each hospital worked much better (i.e., significantly lower error). In hindsight, this makes sense as each hospital was different in price point (e.g., 5-star hotel vs. public hospital), surgery and disease specialization, doctors, etc.

Proper engineering practices make life easier. Beyond engineering practices in our code (e.g., test cases, linting, type checking, etc.), adopting version control on model artifacts let us rollback “defective” models if we ever needed to (we never did). Docker helped us encapsulate and horizontally scale our model endpoints, while the ELK stack provided much-needed visibility across our entire pipeline. All this made deploying and operating the ML system much easier.

Machine learning is <20% of the effort—the methodology and engineering process are more important. Much of the improvements to the system came from engaging with stakeholders to understand the problem, defining the right proxy metrics, and grokking the data. Also, as the architecture diagram shows, machine learning is really only a small part of the overall system. And I didn’t cover the topics of infra, networking, maintenance, monitoring, etc.

I shared a similar sentiment on Vicki Boykis’ question, and some people seemed to agree.

• • •

A common bottleneck I see in start-ups and SMEs is to focus too much on applying machine learning on batch data, usually CSVs, to achieve artificially strong results that are overfitted and not replicable in production. I hope this talk provided a greater understanding of how to avoid this, and develop a production-grade ML system.

If you found this useful, please cite this write-up as:

Yan, Ziyou. (Feb 2021). DataTalksClub - Building an ML System; Behind the Scenes. eugeneyan.com. https://eugeneyan.com/speaking/machine-learning-in-healthcare/.

or

@article{yan2021healthcare, title = {DataTalksClub - Building an ML System; Behind the Scenes}, author = {Yan, Ziyou}, journal = {eugeneyan.com}, year = {2021}, month = {Feb}, url = {https://eugeneyan.com/speaking/machine-learning-in-healthcare/}}