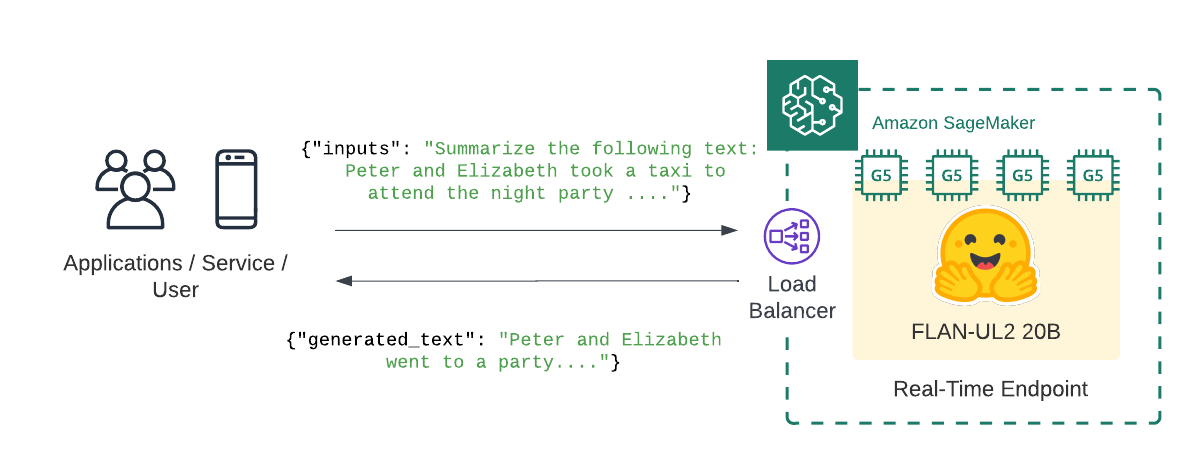

Welcome to this Amazon SageMaker guide on how to deploy the FLAN-UL2 20B on Amazon SageMaker for inference. We will deploy google/flan-ul2 to Amazon SageMaker for real-time inference using Hugging Face Inference Deep Learning Container.

What we are going to do

- Create FLAN-UL2 20B inference scriptCreate SageMaker

model.tar.gz artifactDeploy the model to Amazon SageMakerRun inference using the deployed modelQuick intro: FLAN-UL2, a bigger FLAN-T5

Flan-UL2 is an encoder decoder (seq2seq) model based on the T5 architecture. It uses the same configuration as the UL2 model released earlier last year. It was fine tuned using the "Flan" prompt tuning and dataset collection. FLAN-UL2 was trained as part of the Scaling Instruction-Finetuned Language Models paper. Noticeable difference to FLAN-T5 XXL are:

- FLAN-UL2 has context window of 2048 compared to 512 for FLAN-T5 XXL+~3% better performance than FLAN-T5 XXL on benchmarkshttps://www.philschmid.de/static/blog/deploy-flan-ul2-sagemaker/flan.webpn-ul2-sagemaker/flan.webp" alt="flan-ul2">

Before we can get started we have to install the missing dependencies to be able to create our model.tar.gz artifact and create our Amazon SageMaker endpoint.We also have to make sure we have the permission to create our SageMaker Endpoint.

!pip install "sagemaker>=2.140.0" boto3 "huggingface_hub==0.13.0" "hf-transfer" --upgrade If you are going to use Sagemaker in a local environment (not SageMaker Studio or Notebook Instances). You need access to an IAM Role with the required permissions for Sagemaker. You can find here more about it.

import sagemakerimport boto3sess = sagemaker.Session()# sagemaker session bucket -> used for uploading data, models and logs# sagemaker will automatically create this bucket if it not existssagemaker_session_bucket=Noneif sagemaker_session_bucket is None and sess is not None: # set to default bucket if a bucket name is not given sagemaker_session_bucket = sess.default_bucket() try: role = sagemaker.get_execution_role()except ValueError: iam = boto3.client('iam') role = iam.get_role(RoleName='sagemaker_execution_role')['Role']['Arn'] sess = sagemaker.Session(default_bucket=sagemaker_session_bucket) print(f"sagemaker role arn: {role}")print(f"sagemaker bucket: {sess.default_bucket()}")print(f"sagemaker session region: {sess.boto_region_name}")Create FLAN-UL2 20B inference script

Amazon SageMaker allows us to customize the inference script by providing a inference.py file. The inference.py file is the entry point to our model. It is responsible for loading the model and handling the inference request. If you are used to deploying Hugging Face Transformers that might be new to you. Usually, we just provide the HF_MODEL_ID and HF_TASK and the Hugging Face DLC takes care of the rest. For FLAN-UL2 thats not yet possible. We have to provide the inference.py file and implement the model_fn and predict_fn functions to efficiently load the 11B large model.

If you want to learn more about creating a custom inference script you can check out Creating document embeddings with Hugging Face's Transformers & Amazon SageMaker

In addition to the inference.py file we also have to provide a requirements.txt file. The requirements.txt file is used to install the dependencies for our inference.py file.

The first step is to create a code/ directory.

As next we create a requirements.txt file and add the accelerate to it. The accelerate library is used efficiently to load the model on multiple GPUs.

%%writefile code/requirements.txtaccelerate==0.18.0transformers==4.27.2The last step for our inference handler is to create the inference.py file. The inference.py file is responsible for loading the model and handling the inference request. The model_fn function is called when the model is loaded. The predict_fn function is called when we want to do inference.

We are using the AutoModelForSeq2SeqLM class from transformers load the model from the local directory (model_dir) in the model_fn. In the predict_fn function we are using the generate function from transformers to generate the text for a given input prompt.

%%writefile code/inference.pyfrom typing import Dict, List, Anyfrom transformers import AutoModelForSeq2SeqLM, AutoTokenizerimport torch def model_fn(model_dir): # load model and processor from model_dir model = AutoModelForSeq2SeqLM.from_pretrained(model_dir, device_map="auto", torch_dtype=torch.bfloat16, low_cpu_mem_usage=True ) tokenizer = AutoTokenizer.from_pretrained(model_dir) return model, tokenizer def predict_fn(data, model_and_tokenizer): # unpack model and tokenizer model, tokenizer = model_and_tokenizer # process input inputs = data.pop("inputs", data) parameters = data.pop("parameters", None) # preprocess input_ids = tokenizer(inputs, return_tensors="pt").input_ids # pass inputs with all kwargs in data if parameters is not None: outputs = model.generate(input_ids, **parameters) else: outputs = model.generate(input_ids) # postprocess the prediction prediction = tokenizer.decode(outputs[0], skip_special_tokens=True) return [{"generated_text": prediction}] Create SageMaker model.tar.gz artifact

To use our inference.py we need to bundle it together with our model weights into a model.tar.gz. The archive includes all our model-artifcats to run inference. The inference.py script will be placed into a code/ folder. We will use the huggingface_hub SDK to easily downloadgoogle/flan-ul2 from Hugging Face and then upload it to Amazon S3 with the sagemaker SDK.

Make sure the enviornment has enough diskspace to store the model, ~35GB should be enough.

from distutils.dir_util import copy_treefrom pathlib import Pathimport os # set HF_HUB_ENABLE_HF_TRANSFER env var to enable hf-transfer for faster downloadsos.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"from huggingface_hub import snapshot_download HF_MODEL_ID="google/flan-ul2"# create model dirmodel_tar_dir = Path(HF_MODEL_ID.split("/")[-1])model_tar_dir.mkdir(exist_ok=True) # Download model from Hugging Face into model_dirsnapshot_download(HF_MODEL_ID, local_dir=str(model_tar_dir), local_dir_use_symlinks=False) # copy code/ to model dircopy_tree("code/", str(model_tar_dir.joinpath("code")))Before we can upload the model to Amazon S3 we have to create a model.tar.gz archive. Important is that the archive should directly contain all files and not a folder with the files. For example, your file should look like this:

model.tar.gz/|- config.json|- pytorch_model-00001-of-00012.bin|- tokenizer.json|- ...parent_dir=os.getcwd()# change to model diros.chdir(str(model_tar_dir))# use pigz for faster and parallel compression!tar -cf model.tar.gz --use-compress-program=pigz *# change back to parent diros.chdir(parent_dir)After we created the model.tar.gz archive we can upload it to Amazon S3. We will use the sagemaker SDK to upload the model to our sagemaker session bucket.

from sagemaker.s3 import S3Uploader # upload model.tar.gz to s3s3_model_uri = S3Uploader.upload(local_path=str(model_tar_dir.joinpath("model.tar.gz")), desired_s3_uri=f"s3://{sess.default_bucket()}/flan-ul2") print(f"model uploaded to: {s3_model_uri}")Deploy the model to Amazon SageMaker

After we have uploaded our model archive we can deploy our model to Amazon SageMaker. We will use HuggingfaceModel to create our real-time inference endpoint.

We are going to deploy the model to an g5.12xlarge instance. The g5.12xlarge instance is a GPU instance with 4x NVIDIA A10G GPU. If you are interested in how you could add autoscaling to your endpoint you can check out Going Production: Auto-scaling Hugging Face Transformers with Amazon SageMaker.

from sagemaker.huggingface.model import HuggingFaceModel # create Hugging Face Model Classhuggingface_model = HuggingFaceModel( model_data=s3_model_uri, # path to your model and script role=role, # iam role with permissions to create an Endpoint transformers_version="4.26", # transformers version used pytorch_version="1.13", # pytorch version used py_version='py39', # python version used model_server_workers=1) # deploy the endpoint endpointpredictor = huggingface_model.deploy( initial_instance_count=1, instance_type="ml.g5.12xlarge", # container_startup_health_check_timeout=600, # increase timeout for large models # model_data_download_timeout=600, # increase timeout for large models )Run inference using the deployed model

The .deploy() returns an HuggingFacePredictor object which can be used to request inference using the .predict() method. Our endpoint expects a json with at least inputs key.

When using generative models, most of the time you want to configure or customize your prediction to fit your needs, for example by using beam search, configuring the max or min length of the generated sequence, or adjusting the temperature to reduce repetition.The Transformers library provides different strategies and kwargs to do this, the Hugging Face Inference toolkit offers the same functionality using the parameters attribute of your request payload. Below you can find examples on how to generate text without parameters, with beam search, and using custom configurations. If you want to learn about different decoding strategies check out this blog post.

payload = """Summarize the following text:Peter and Elizabeth took a taxi to attend the night party in the city. While in the party, Elizabeth collapsed and was rushed to the hospital.Since she was diagnosed with a brain injury, the doctor told Peter to stay besides her until she gets well.Therefore, Peter stayed with her at the hospital for 3 days without leaving.""" parameters = { "do_sample": True, "max_new_tokens": 50, "top_p": 0.95,} # Run predictionpredictor.predict({ "inputs": payload, "parameters" :parameters})# [{'generated_text': 'Peter stayed with Elizabeth at the hospital for 3 days.'}]Lets try another examples! This time we focus ond questions answering with a step by step approach including some simple math.

payload = """Answer the following question step by step:Roger has 5 tennis balls. He buys 2 more cans of tennis balls.Each can has 3 tennis balls. How many tennis balls does he have now?""" parameters = { "early_stopping": True, "length_penalty": 2.0, "max_new_tokens": 50, "temperature": 0,} # Run predictionpredictor.predict({ "inputs": payload, "parameters" :parameters})# [{'generated_text': 'He buys 2 cans of tennis balls, so he has 2 * 3 = 6 tennis balls. He has 5 + 6 = 11 tennis balls now.'}]Delete model and endpoint

To clean up, we can delete the model and endpoint.

predictor.delete_model()predictor.delete_endpoint()Thanks for reading! If you have any questions, feel free to contact me on Twitter or LinkedIn.