This tutorial will help you to get started with AWS Trainium and Hugging Face Transformers. It will cover how to set up a Trainium instance on AWS, load & fine-tune a transformers model for text-classification

You will learn how to:

- Setup AWS environmentLoad and process the datasetFine-tune BERT using Hugging Face Transformers and Optimum Neuron

Before we can start, make sure you have a Hugging Face Account to save artifacts and experiments.

Quick intro: AWS Trainium

AWS Trainium (Trn1) is a purpose-built EC2 for deep learning (DL) training workloads. Trainium is the successor of AWS Inferentia focused on high-performance training workloads claiming up to 50% cost-to-train savings over comparable GPU-based instances.

Trainium has been optimized for training natural language processing, computer vision, and recommender models used. The accelerator supports a wide range of data types, including FP32, TF32, BF16, FP16, UINT8, and configurable FP8.

The biggest Trainium instance, the trn1.32xlarge comes with over 500GB of memory, making it easy to fine-tune ~10B parameter models on a single instance. Below you will find an overview of the available instance types. More details here:

| instance size | accelerators | accelerator memory | vCPU | CPU Memory | price per hour |

|---|---|---|---|---|---|

| trn1.2xlarge | 1 | 32 | 8 | 32 | $1.34 |

| trn1.32xlarge | 16 | 512 | 128 | 512 | $21.50 |

| trn1n.32xlarge (2x bandwidth) | 16 | 512 | 128 | 512 | $24.78 |

Now we know what Trainium offers, let's get started. 🚀

Note: This tutorial was created on a trn1.2xlarge AWS EC2 Instance.

1. Setup AWS environment

In this example, we will use the trn1.2xlarge instance on AWS with 1 Accelerator, including two Neuron Cores and the Hugging Face Neuron Deep Learning AMI.

This blog post doesn't cover how to create the instance in detail. You can check out my previous blog about “Setting up AWS Trainium for Hugging Face Transformers”, which includes a step-by-step guide on setting up the environment.

Once the instance is up and running, we can ssh into it. But instead of developing inside a terminal we want to use a Jupyter environment, which we can use for preparing our dataset and launching the training. For this, we need to add a port for forwarding in the ssh command, which will tunnel our localhost traffic to the Trainium instance.

PUBLIC_DNS="" # IP address, e.g. ec2-3-80-....KEY_PATH="" # local path to key, e.g. ssh/trn.pemssh -L 8080:localhost:8080 -i ${KEY_NAME}.pem ubuntu@$PUBLIC_DNSWe can now start our jupyter server.

python -m notebook --allow-root --port=8080You should see a familiar jupyter output with a URL to the notebook.

http://localhost:8080/?token=8c1739aff1755bd7958c4cfccc8d08cb5da5234f61f129a9

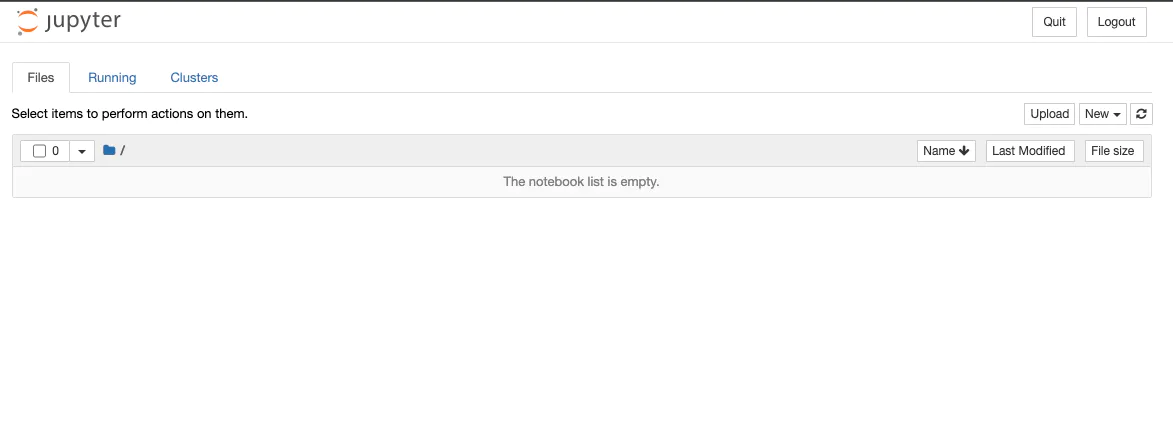

We can click on it, and a jupyter environment opens in our local browser.

We are going to use the Jupyter environment only for preparing the dataset and then torchrun for launching our training script on both neuron cores for distributed training. Lets create a new notebook and get started.

2. Load and process the dataset

We are training a Text Classification model on the emotion dataset to keep the example straightforward. The emotion is a dataset of English Twitter messages with six basic emotions: anger, fear, joy, love, sadness, and surprise.

We will use the load_dataset() method from the 🤗 Datasets library to load the emotion.

from datasets import load_dataset# Dataset id from huggingface.co/datasetdataset_id = "philschmid/emotion"# Load raw datasetraw_dataset = load_dataset(dataset_id)print(f"Train dataset size: {len(raw_dataset['train'])}")print(f"Test dataset size: {len(raw_dataset['test'])}")# Train dataset size: 16000# Test dataset size: 2000Let’s check out an example of the dataset.

from random import randrangerandom_id = randrange(len(raw_dataset['train']))raw_dataset['train'][random_id]# {'text': 'i feel isolated and alone in my trade', 'label': 0}We must convert our "Natural Language" to token IDs to train our model. This is done by a Tokenizer, which tokenizes the inputs (including converting the tokens to their corresponding IDs in the pre-trained vocabulary). if you want to learn more about this, out chapter 6 of the Hugging Face Course.

Our Neuron Accelerator expects a fixed shape of inputs. We need to truncate or pad all samples to the same length.

from transformers import AutoTokenizerimport os# Model id to load the tokenizermodel_id = "bert-base-uncased"save_dataset_path = "lm_dataset"# Load Tokenizertokenizer = AutoTokenizer.from_pretrained(model_id)# Tokenize helper functiondef tokenize(batch): return tokenizer(batch['text'], padding='max_length', truncation=True,return_tensors="pt")# Tokenize datasetraw_dataset = raw_dataset.rename_column("label", "labels") # to match Trainertokenized_dataset = raw_dataset.map(tokenize, batched=True, remove_columns=["text"])tokenized_dataset = tokenized_dataset.with_format("torch")# save dataset to disktokenized_dataset["train"].save_to_disk(os.path.join(save_dataset_path,"train"))tokenized_dataset["test"].save_to_disk(os.path.join(save_dataset_path,"eval"))3. Fine-tune BERT using Hugging Face Transformers

Normally you would use the Trainer and TrainingArguments to fine-tune PyTorch-based transformer models.

But together with AWS, we have developed a NeuronTrainer to improve performance, robustness, and safety when training on Trainium instances. The NeuronTrainer also comes with a model cache, which allows us to use precompiled models and configuration from Hugging Face Hub to skip the compilation step, which would be needed at the beginning of training. This can reduce the training time by ~3x.

The NeuronTrainer is part of the optimum-neuron library and can be used as a 1-to-1 replacement for the Trainer. You only have to adjust the import in your training script.

- from transformers import Trainer, TrainingArguments+ from optimum.neuron import NeuronTrainer as Trainer+ from optimum.neuron import NeuronTrainingArguments as TrainingArgumentsWe prepared a simple train.py training script based on the "Getting started with Pytorch 2.0 and Hugging Face Transformers” blog post with the TrainiumTrainier. Below is an excerpt

from transformers import TrainingArgumentsfrom optimum.neuron import TrainiumTrainer as Trainerdef parse_arge(): ...def training_function(args): # load dataset from disk and tokenizer train_dataset = load_from_disk(os.path.join(args.dataset_path, "train")) ... # Download the model from huggingface.co/models model = AutoModelForSequenceClassification.from_pretrained( args.model_id, num_labels=num_labels, label2id=label2id, id2label=id2label ) training_args = TrainingArguments( ... ) # Create Trainer instance trainer = Trainer( model=model, args=training_args, train_dataset=train_dataset, eval_dataset=eval_dataset, compute_metrics=compute_metrics, ) # Start training trainer.train()We can load the training script into our environment using the wget command or manually copy it into the notebook from here.

!wget https://raw.githubusercontent.com/huggingface/optimum-neuron/main/notebooks/text-classification/scripts/train.pyWe will use torchrun to launch our training script on both neuron cores for distributed training. torchrun is a tool that automatically distributes a PyTorch model across multiple accelerators. We can pass the number of accelerators as nproc_per_node arguments alongside our hyperparameters.

We'll use the following command to launch training:

!torchrun --nproc_per_node=2 scripts/train.py \ --model_id bert-base-uncased \ --dataset_path lm_dataset \ --lr 5e-5 \ --per_device_train_batch_size 16 \ --bf16 True \ --epochs 3Note: If you see bad, bad accuracy, you might want to deactivate bf16 for now.

After 9 minutes the training was completed and achieved an excellent f1 score of 0.914.

***** train metrics ***** epoch = 3.0 train_runtime = 0:08:30 train_samples = 16000 train_samples_per_second = 96.337***** eval metrics ***** eval_f1 = 0.914 eval_runtime = 0:00:08Last but not least, terminate the EC2 instance to avoid unnecessary charges. Looking at the price-performance, our training only cost 20ct (1.34$/h * 0.15h = 0.20$)

Conclusion

In this tutorial, we learned how to train a BERT model for text classification model with the emotion dataset on AWS Trainium. Hugging Face Transformers and Optimum allows us to easily convert our existing GPU training script into neuron-compatible ones.

The Trainium instance delivers impressive performance for deep learning training workloads, especially natural language processing, computer vision, and recommender models.

Moving your training to Trainium instances can help increase the iteration and speed of your models and data science teams.

Thanks for reading! If you have any questions, feel free to contact me on Twitter or LinkedIn.