Yes! We all know vSphere, but do you really? Do you know how virtualization meets artificial intelligence; and scalability meets efficiency? Let’s dive deep into the world of AI and ML with vSphere with GPUs and some new partnerships between VMware with Hugging Face and Ray.

Imagine harnessing the power of vSphere’s virtualization expertise with cutting-edge GPU cards. Add to that the seamless integration with a coding assistant from Hugging Face’s AI magic and the dynamic scaling abilities of Ray. The best thing of all is that you can get hands-on and test the labsolutely amazing products and integrations with VMware Hands-on Labs! The labs described here are all related to vSphere for AI/ML work as the main topic, so let’s dive in!

Accelerate AI/ML in vSphere Using GPUs

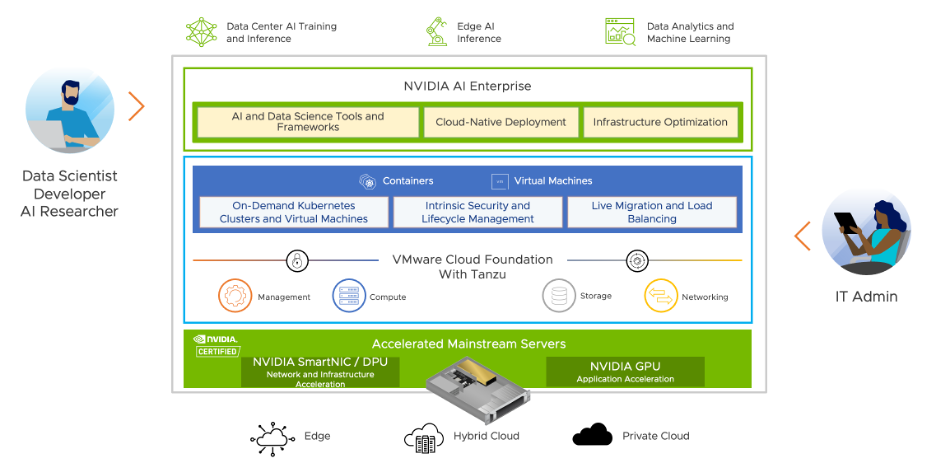

Let’s start with a focus on the core infrastructure for vSphere with GPUs. A GPU within a vSphere virtual machine can deliver near bare-metal performance. You can get all the resources and storage benefits without sacrificing the scalability of VMs.

This lab will be particularly useful for people like data scientists and/or ML practitioners who want to deliver virtual machines to their end users with GPU acceleration already added to them.

In this lab, you will learn how you can accelerate Machine Learning Workloads on vSphere using GPUs. The acceleration is done through the combination of the management benefits of VMware vSphere with the power of NVIDIA GPUs.

| Lab Modules |

| Module 1 – Introduction to AI/ML in vSphere Using GPUs |

| Module 2 – Run Machine Learning Workloads Using NVIDIA vGPU |

| Module 3 – Using GPUs in Pass-through Mode |

| Module 4 – Configure a container using NVIDIA vGPU in vSphere with Tanzu |

| Module 5 – Choosing the Right Profile for your Workload (vGPU vs MIG vGPU) |

Dive into the lab: https://userui.learningplatform.vmware.com/HOL/catalogs/lab/14433

Redefining Coding Dynamics: Hugging Face’s Plugin for Visual Studio IDE

Let’s now dive deeper into one powerful AI/ML use case that demonstrates how Generative AI can help with the coding/application development process – and how that can be incorporated into a developer tool like an Integrated Development Environment (IDE).

Imagine a tool tailored specifically to your company’s coding style, offering real-time code suggestions in a matter of seconds. Introducing the Hugging Face plugin for Visual Studio IDE. This plug-in talks to a server with GPUs which produces the code completion. The technology uses Machine Learning algorithms to predict the next line of code, providing developers with a new tool to accelerate and streamline their coding process.

Many data scientists are in fact developers in Python or other languages, so what they have on their desktop for daily use is an IDE or a Jupyter Notebook. This lab will show how their work can be made easier and more effective.

| Lab Modules |

| Module 1 – Introduction to AI Autocomplete with Visual Studio Plugin |

Take the lab: https://userui.learningplatform.vmware.com/HOL/catalogs/lab/14355

Scale AI Workloads with Ray on VMware

Now, if you already have a coding assistant and your virtualized environment is a 10 out of 10 in performance, let’s take it to the next level. We want to distribute these AI/ML workloads over several servers.

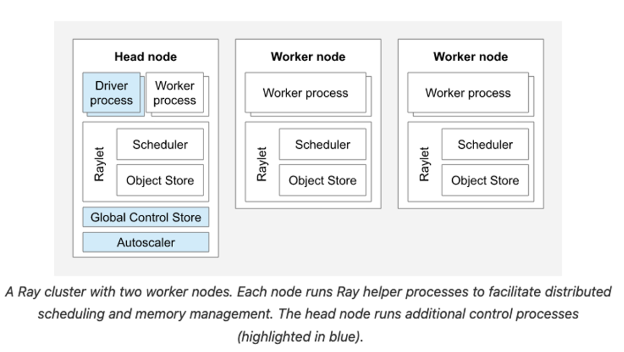

VMware now has a partnership with Anyscale, the creators of Ray, to create an open-source plug-in to run Ray on vSphere using Virtual Machines. Ray is an optimized workload scheduler for ML workloads that brings a serverless-style scaling to training and inferencing workloads. Ray provides the ability to split an AI/ML model training job across multiple physical and virtual machines and control that from a central point, called a “head node” and VMware has contributed to upstream Ray to make this possible on vSphere 8.

The head node manages the cluster and scales the number of worker nodes within it. These distributed worker nodes are responsible for training, fine-tuning, and serving models.

Take the lab and test the solution for yourself!

| Lab Modules |

| Module 1 – Provision a Ray Cluster on vSphere and Execute “Hello World” |

| Module 2 – Train an XGBoost model on Ray and Destroy Ray Cluster |

Test the solution now: https:// userui.learningplatform.vmware.com/HOL/catalogs/lab/14435

For more information on our partnerships and our GenAI offerings, go to https://news.vmware.com/releases/vmware-explore-2023-private-ai-foundation

If you have a comment or request, contact us at discovery-request@vmware.com

Stay connected to VMware via Twitter, Facebook, and YouTube

The post Integrating AI & ML in vSphere appeared first on VMware Hands-on Labs Blog.