This was originally posted on the a16z Growth Newsletter on Substack. To receive analysis like this in your inbox early—plus additional media recommendations and insights from the Growth team—be sure to subscribe.

Last month, we explained why certain companies become modelbusters—or companies that grow far faster and for longer than anyone would’ve modeled.

Modelbusters arise when founders build two things: a breakthrough product that unlocks far bigger markets than anyone could forecast and a business model to seize that windfall. Though modelbusters can crop up idiosyncratically, the biggest and best ones flourish during platform shifts, when new tech primitives let founders redraw products and business models.

(If you missed our breakdown of modelbusters last month, read more about how this played out for Roblox, Anduril, CrowdStrike, and Apple below.)

This is exactly where we are with AI today, but the opportunity stands to be significantly bigger than anything we’ve seen to date. Though we’re early in the cycle, AI is already delivering 10x product experiences for a 1/10th of the cost, and we’re starting to see new business models emerge to capture this value. If you used comps from the SaaS or mobile eras to figure out how big AI would be when, say, ChatGPT came out, your models would have grossly underestimated AI’s growth already.

From where we stand, it’s hard to overestimate how much better AI could get and how much cheaper it will be to serve—and how improvements along both of those axes fundamentally reshape the total opportunity and how founders can capture it. To help founders better understand the magnitude of the opportunity available, we’ll outline how we see it shaping up today.

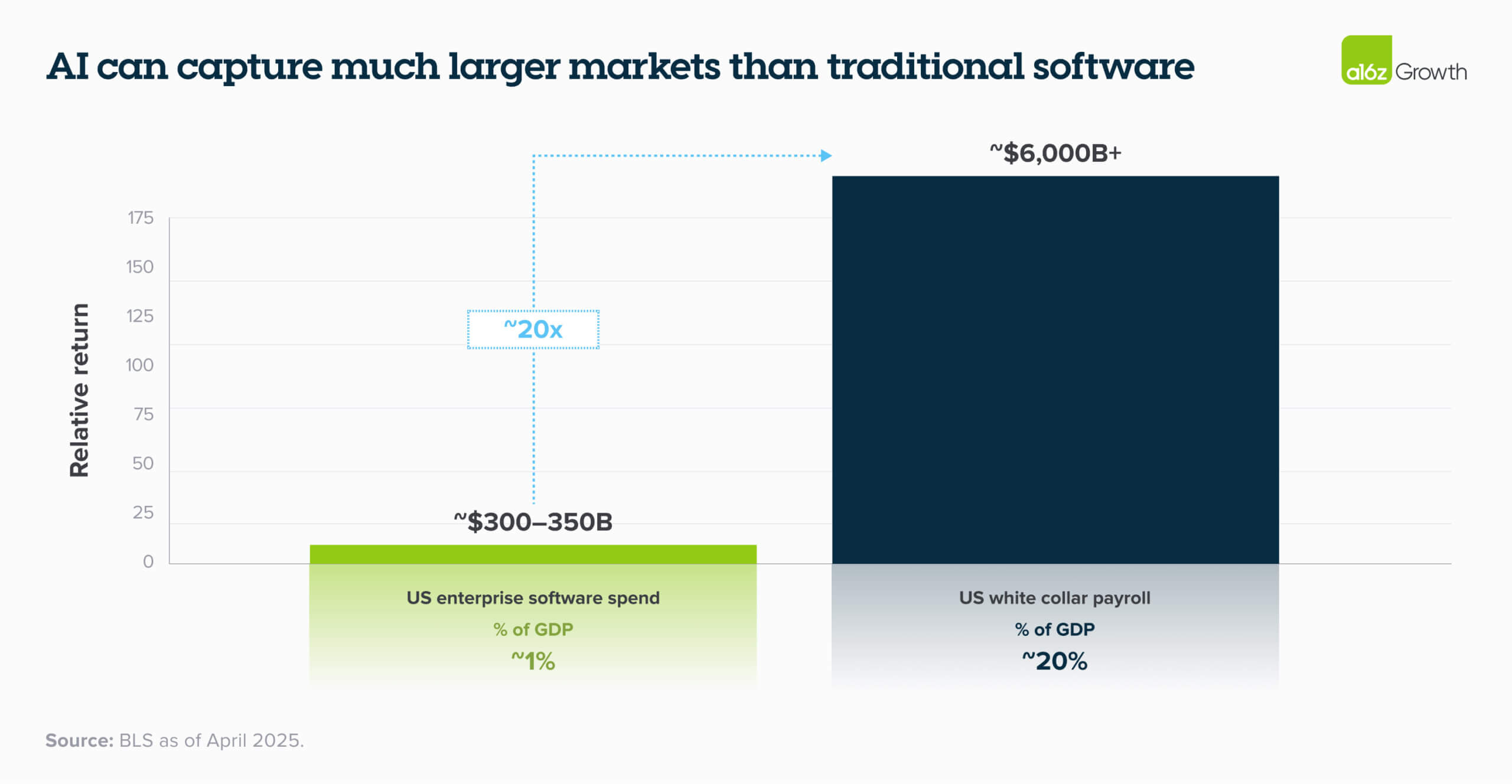

As our partner Alex Rampell has pointed out, by enabling computers instead of humans to query, reason over, and act on the data within databases, AI companies have the capabilities to attack the $6T white-collar services market, which is 20x larger than US enterprise software spend.

AI is also rapidly taking over the attention economy. Fast-improving gen AI text, audio, image, and video capabilities are siphoning user engagement away from other platforms, and AI is emerging as the first new scaled entrant into the consumer space since TikTok. ChatGPT users in the US are already spending ~20 minutes a day on the platform (up ~2x in a year), and the app has over 1B monthly active users.1

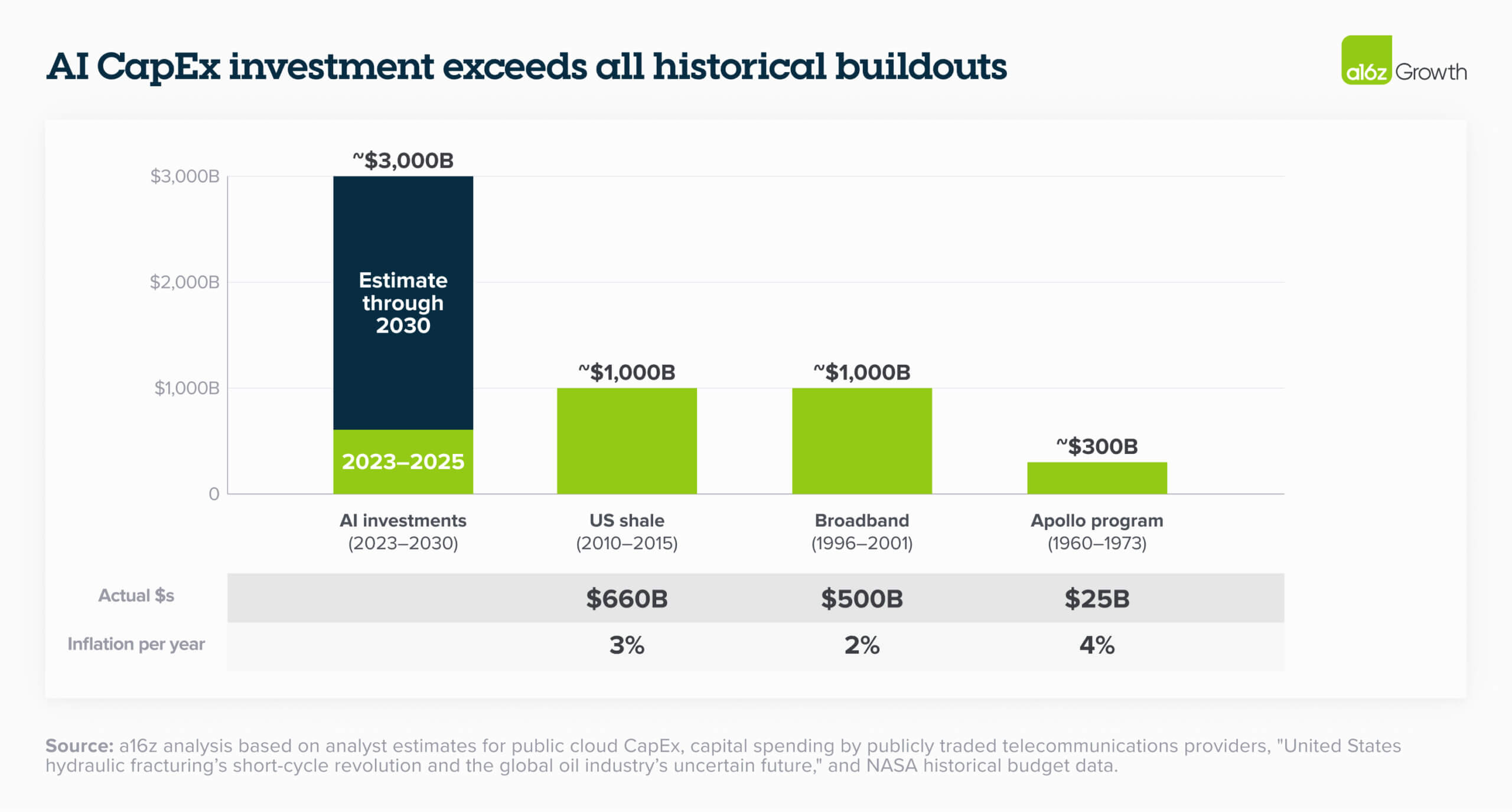

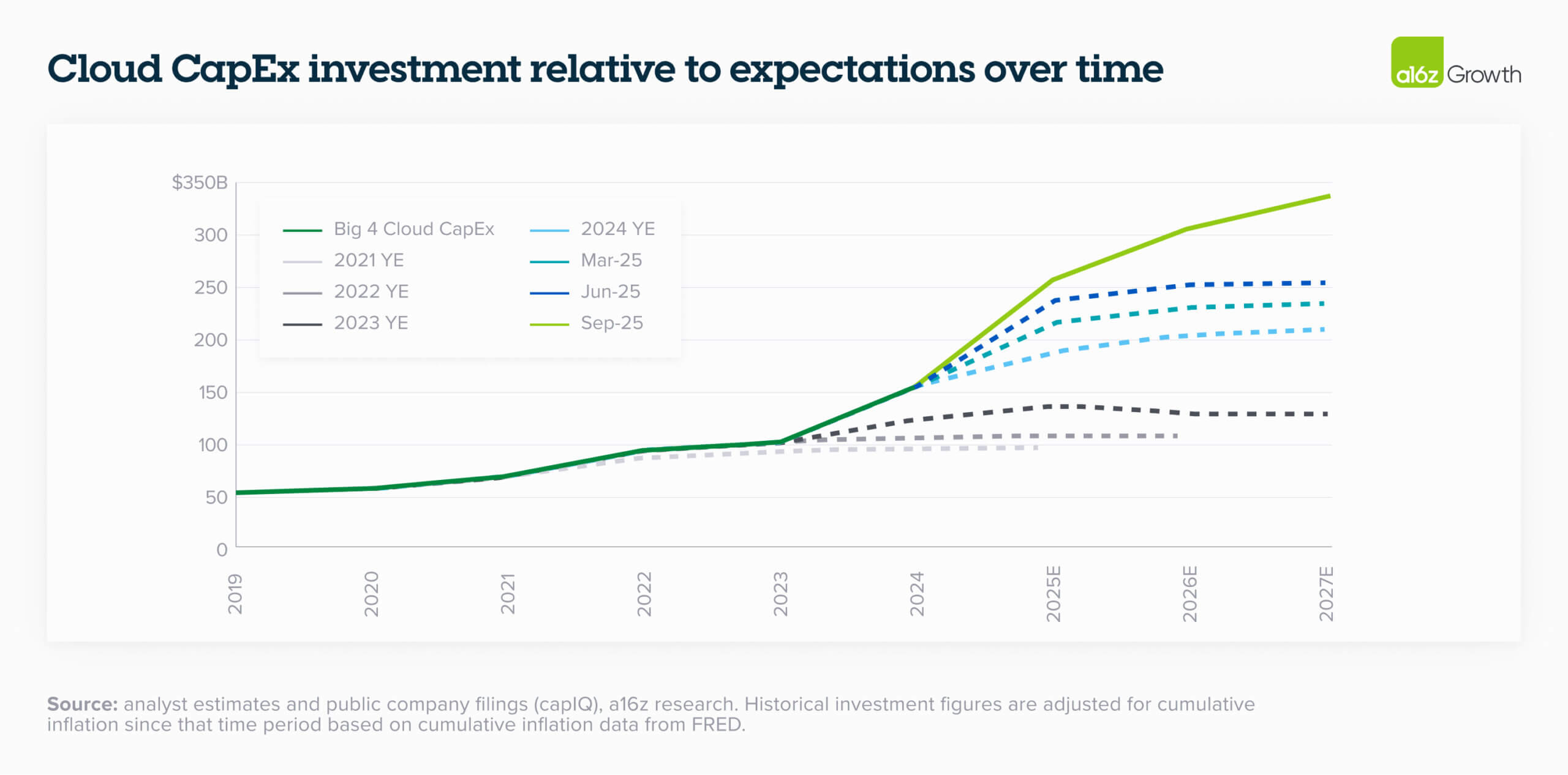

At the same time, we’re at the beginning of the largest CapEx investment in infrastructure in history. Last month, we mentioned that AI investment is already on pace to surpass $1T in a 5-year span, larger than the Apollo program, the broadband boom, and the entire US shale buildout. But that number keeps climbing up every time we check it: the cumulative investment in AI computing capacity is now expected to exceed $3T by 2030.

These investments in AI are shouldered by the biggest companies with some of the best balance sheets in the world: Amazon, Microsoft, Google, Oracle, and Meta. These companies are making this the biggest bet they’ve ever made, and they’re even taking on debt to make it happen. We believe the investment from these players makes the ecosystem anti-fragile: founders can build on top of AI infrastructure without fear of those technological primitives failing during supply shocks or regulatory or geopolitical shifts.

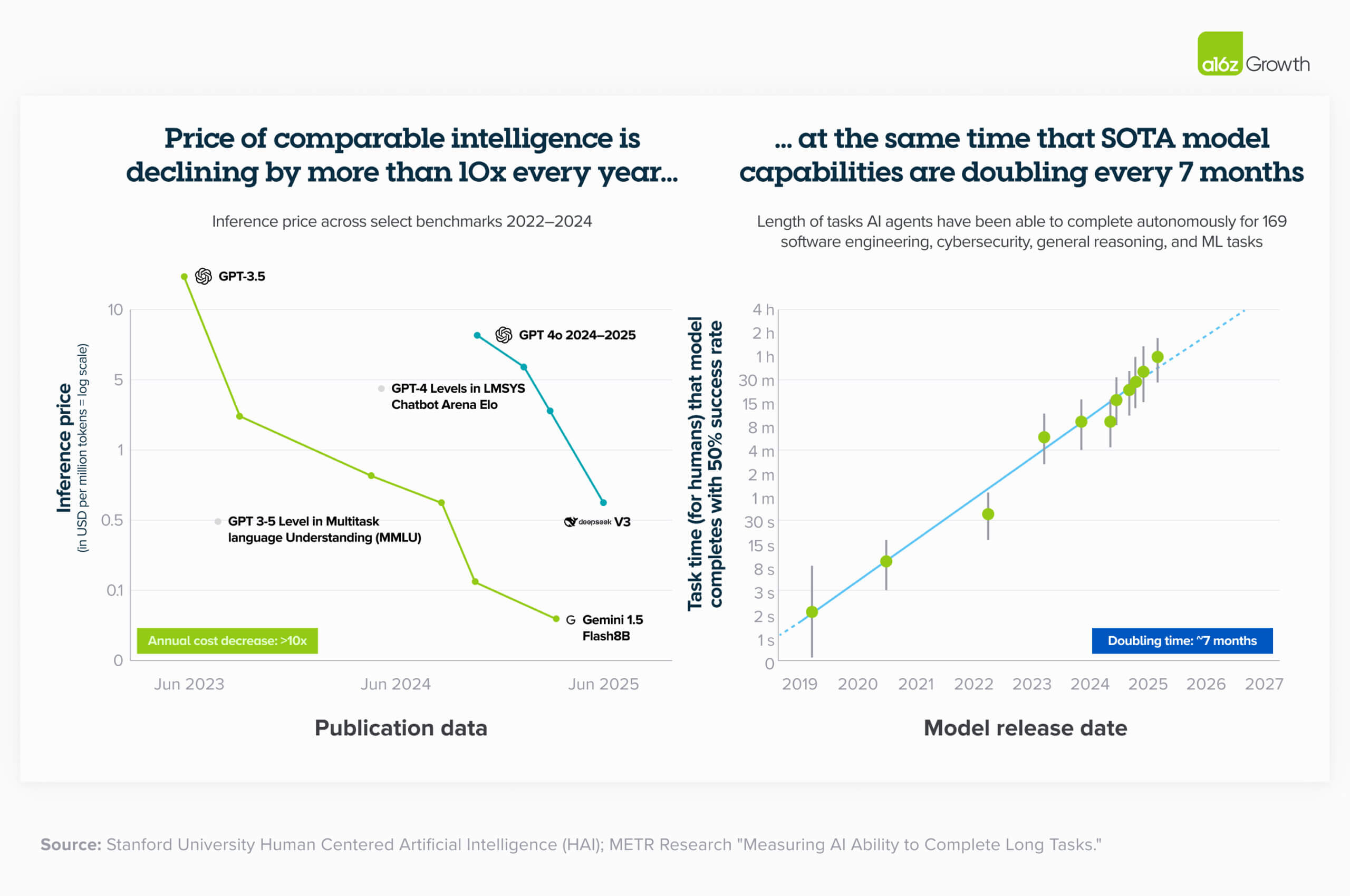

This CapEx investment should contribute to demand over time. The more computing capacity we have online, the likelier it is that serving models will get much cheaper. This is already playing out. Over the past 3 years, the cost of intelligence has declined over 10x every year as model capability has doubled roughly every 7 months. A Stanford study showed that the cost of intelligence was down 99.7% over 2 years.

As the costs continue to decline and capabilities continue to improve, founders are in a much better position to capture more of the attention economy for consumers and the $6T services market for B2B. As Marc Andreessen has said, when your friend asks to borrow your phone charger, you don’t charge him 10 cents. Our hope and expectation is that AI will be like electricity. As intelligence becomes too cheap to meter and models become more capable, the range of what founders can build expands exponentially and the consumer demand for AI will likely surge.

During the last platform shift, the biggest winners were buyers of technology. Think of Apple, Tesla, Google, Visa. Market competition drove their prices down, so in order to reach the largest part of their TAM, these companies priced their products cheaper than what higher-end customers alone would be willing to pay. So: the value customers got out of those products way outpaced the price of those products, which drove very quick adoption. We’re all winners with iPhones, Google Search, Amazon Prime, and Facebook, and speaking for ourselves, we’d definitely pay way more for each if we had to.

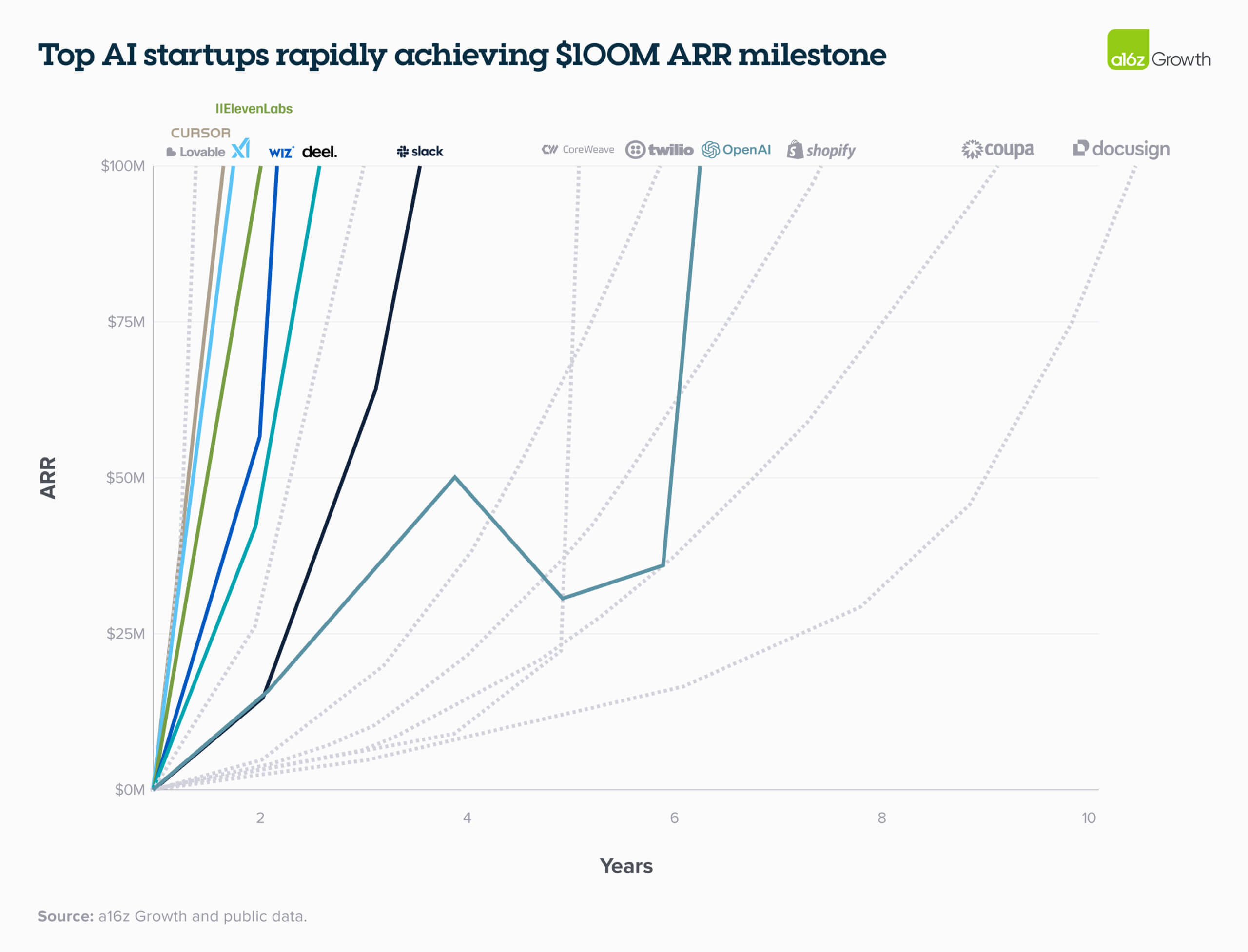

We think the same is already happening in AI as it introduces new ways of working, new UXs, and new ways of accessing data. AI has already killed the triple, triple, double, double, double, doing in ~2 years what it took SaaS to do in 10. Look at Cursor: it went from $2M to $300M, not $2M to $6M.

To put this into perspective, OpenAI and Anthropic net revenue added in 2025 (based on press coverage) is almost half of all public software, excluding the Mag 7. And that doesn’t even include the proportion of the revenue that goes to AWS from Anthropic and the proportion of OpenAI hosted by Azure.

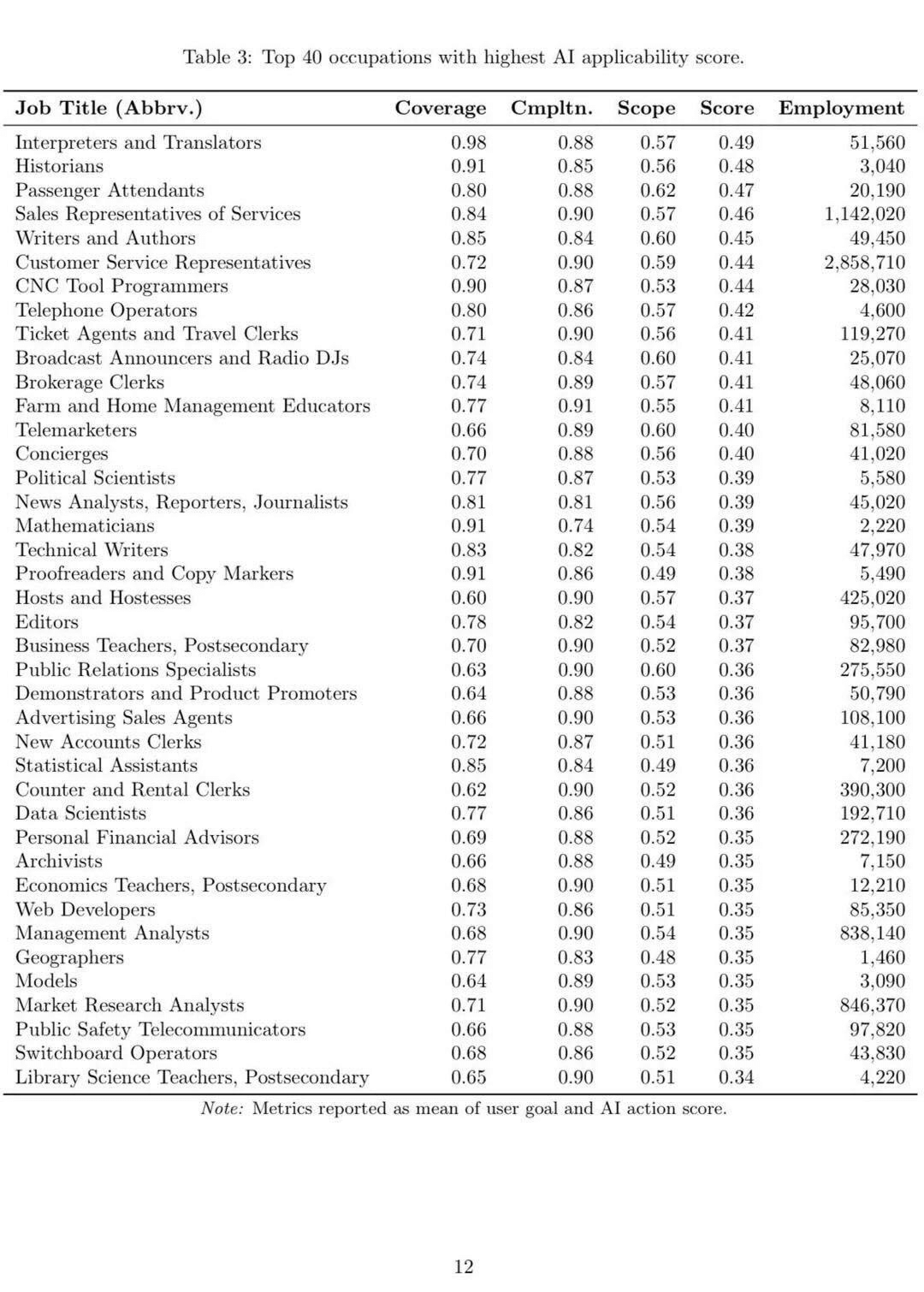

Though AI companies have built better guardrails around accuracy and hallucinations, there’s still a big gap between model horsepower and use cases—which means the latent model capabilities today leave a ton of room to build. So, when people say we’re in the early innings of AI, what they’re really saying is that we’re in inning 1 of usefulness. We’re seeing early traction across horizontal markets, departments, and vertical end markets, but it takes time for founders to build great products on the backs of platform shifts. Look at the study from Microsoft that identified the 40 jobs that are most likely to be disrupted by AI that made the rounds on X a couple weeks ago. AI companies have tackled only a handful of those 40 jobs today.

The unprecedented ramp of AI-native startups indicates to us that these companies are capturing budget dollars from existing technology spend and labor budgets while delivering a huge surplus to the customer. Cursor customers spend with them not just to replace other software tools they already use, but to transform the productivity and capability of their engineering team. Or when Decagon customers shift their existing services spend to Decagon’s AI-powered platform, they see costs drop by ~60% while doubling satisfaction scores.2

AI’s early success will likely compound, too. During the SaaS/mobile/cloud era, founders needed to carefully pick the use cases or markets they wanted to build for, then built their entire org around that particular niche. But with the success of coding copilots and other AI productivity and orchestration tools, founders can spin up companies that can attack this new services budget with a fraction of the headcount, and can ship second, third, and fourth products much sooner than they would have 10 years ago. So it’s very likely we’ll see much more growth, much sooner with this crop of modelbusters.

Free-to-play gaming did this well in the SaaS/mobile/cloud era. By removing the upfront $60 purchase, publishers opened the door to a much bigger audience, then captured more value through voluntary in-game spending that scaled with each player’s engagement. This approach achieved near-perfect price discrimination—casual users pay nothing, superfans may spend hundreds—so revenue rose in direct proportion to perceived value. This business model broadened the funnel, deepened lifetime value, and transformed static products into high-margin, recurring-revenue services.

We’ll probably see something similar with AI. We’re already seeing some early proof points with outcome-based pricing, where companies charge for outputs instead by seats or by usage, in customer support. This pricing model has worked best where products take on a clearly defined, discrete task, like solving a support ticket. As AI products can more capably deliver agentic outcomes, however, outcome-based pricing could help startups to capture the gap between the cost of a human and the cost to complete the task with AI and attack incumbents that are reluctant to disrupt their own pricing. If a CRM company was still selling seats and a new AI product came in and offered better services for even 1% of revenue created, it’d be pretty hard for that incumbent CRM company to compete.

Otherwise, the field is wide open for new monetization strategies. There’s probably room for some ROI-sharing pricing models, where companies could capture 10% of the savings when offering a 10x product. It’s hand-wavey to anticipate the exact contours of these new business models, so suffice it to say that as product capabilities evolve, the way companies monetize them will, too.

</div>