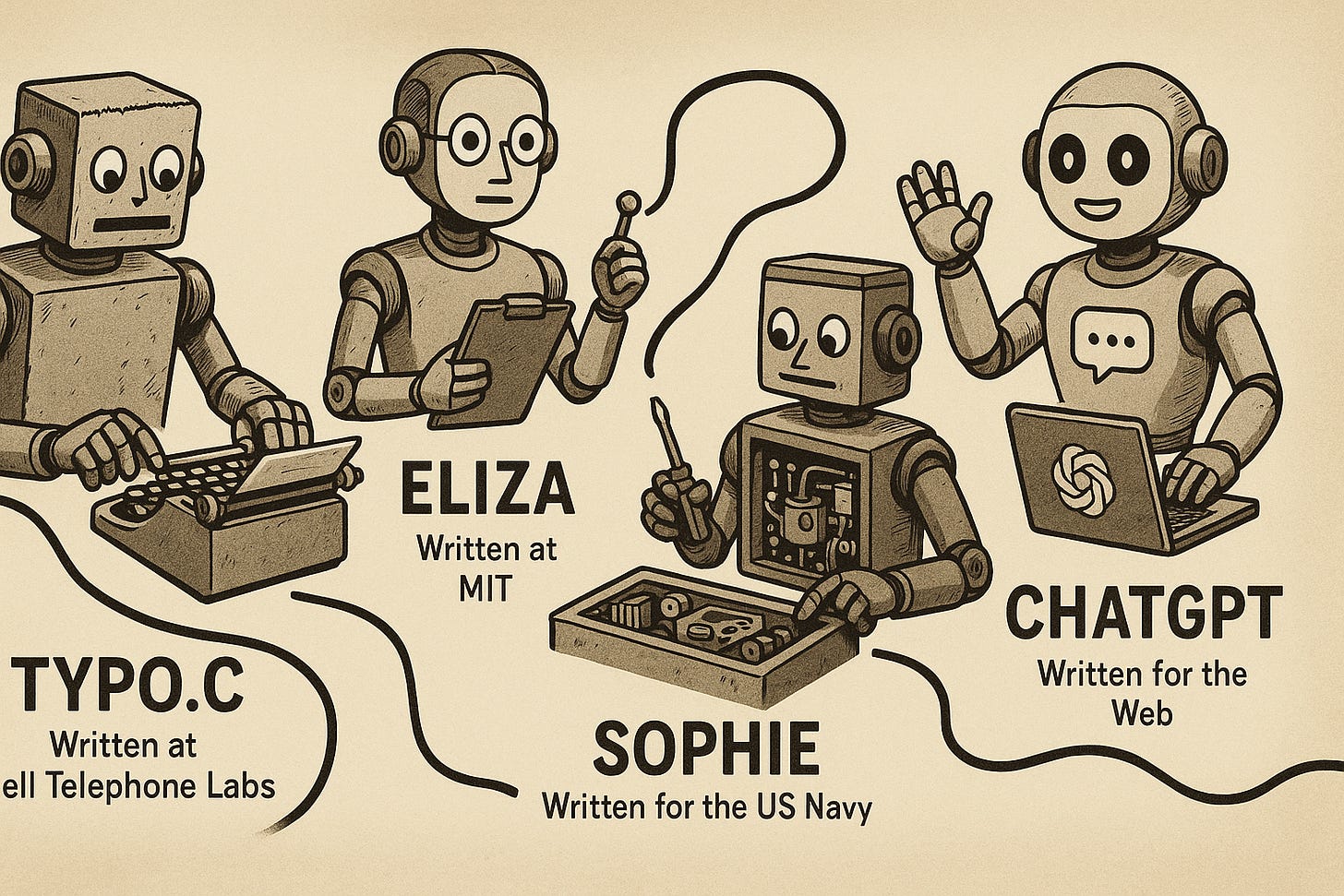

This my second post on the history of language models. The first one discussed typo.c, created by Prof. Robert Morris, Sr.

My first encounter with a Language Model

I was a teaching assistant in college and was given a serious clue about my future career when I was asked to plan and conduct an AI lab session around analyzing SOPHIE, which was the most advanced language model of the day.

It was created by Bobrow, Brown, Burton, and de Kleer under a contract with the US Navy while at Bolt, Beranek, and Newman.

The setting was to use a natural language frontend to converse with Navy recruits learning how to troubleshoot electronic equipment. Students were given a schematic and access to a simulation of a power supply implemented using the most popular electronics simulator of the day, called SPICE.

It could handle user input sentences like “Insert a hard fault”, “What is the voltage across resistor R4” , “I believe the problem is a base to emitter short in transistor Q5”, etc.

And it would reply with measurements it retrieved from the SPICE model it was running in an inferior thread. “The voltage at p12 is +0.4 V”

If you voiced a hypothesis, it would say something like

”You claim the failure is a base-emitter short in Q5. Let’s see if that was reasonable….

You measured the voltage at point P12 to be 0.4 volts. If you had been correct, that voltage would have been 14.1 volts.

[… and it proceeds to completely destroy my methodology … :-D ]

The actual error is… “

SOPHIE was still 20th Century AI

Before 2001, with some exceptions noted in my other posts, all language models were True Models. We used word lists and grammars, templates for generation, etc. The Language Model was created by human programmers such as myself. We would attempt to simplify the problem of understanding language to rules we could program into our systems. If we had known how hard that problem really was, we might have given up earlier.

We need to remember that computers available at the time were small – way too small for anyone even imagining Holistic (Model Free) approaches, which is what got us to 20th Century LLMs.

I claim, speaking as an Epistemologist, that current Large Language Models are in fact not Models. They are collections of prior experience stored as patterns and patterns of patterns. And they are therefore Holistic, not Reductionist or Scientific. If this is news to you, welcome, please read some more of my posts.

SOPHIE was true to the 20th Century Reductionist, Model-based paradigm. In fact, it did a pretty good job of it. Systems like ELIZA had been around for a few years, but they used trivial language models where most of the conversation was handcrafted general prompting to make the user continue. A strategy we can still see traces of in current LLMs.

In SOPHIE, the vehicle was an Interlisp program to debug a power supply but their main research was about how to lift the conversation and the number of recognized and correctly parsed sentences to the next level. We note that the language domain they chose to handle was extremely narrow – How to troubleshoot a power supply. These were not Large Language Models. Without Machine Learning, that was all the language complexity we could handle at the time, because the entire language competence had to be human designed and implemented to some level of detail.

ATNC

They developed ATNC - the Augmented Transition Network Compiler – that could take a high level (BNF-like) description of a dialog state machine where the current “meaning” is a node in the state diagram and you can move to other states when receiving new input, such as an answer to a question that was asked. At the time, this was advanced compiler technology and they applied it to natural language.

Decades later, I made that a career theme. In several engagements, I wrote natural language understanding systems for things like parsing corporate quarterly reports and the like on the web, and I used ANTLR – a popular compiler-compiler that generates Java code – that I had subverted to my natural language applications rather than for compiling programming languages. This was an amazingly powerful recipe. I started a company where we sold this tech wrapped into a cloud search federating webapp.

But Is It Holistic?

How can we tell if a system, such as an LLM, is Reductionist or Holistic?

Holistic Systems

They learn. Which means they get better with practice

They guess. They cannot guarantee correct results.

They are robust within their problem domain

The domain knowledge itself is not part of the programming effort.

Reductionist Systems

They get it right on the first try

They are brittle in the face of change or in other domains

They require external understanding of the problem domain

Changing anything requires reprogramming.

Example: An LLM can learn English or Chinese without reprogramming but if we wanted a French version of ELIZA or SOPHIE (which were both using Reductionist NLP), we programmers would have to do a lot of program, dictionary, and grammar level changes to these system.

And without understanding your problem domain (such as rocketry) well, and understanding exactly what F=ma (a Model in Physics) means, you would not know how to use that Model. Reductionism requires external Understanding of the problem domain, because the Epistemic Reduction is performed by humans.

LLMs are not Models because they contain nothing that we can extract in a meaningful way. Not even grammars. They are opaque to Reductionist inspection, because Models would be all we could detect with such tools, and I claim LLMs can get by without any Models whatsoever. Their difficulty at Reasoning is a clue, and follows directly from the lack of Models to reason about.

Getting it right on the first try is discussed in the castle siege example at https://vimeo.com/showcase/5329344/video/5012093