Published on September 16, 2025 5:06 PM GMT

Back in May, we announced that Eliezer Yudkowsky and Nate Soares’s new book If Anyone Builds It, Everyone Dies was coming out in September. At long last, the book is here![1]

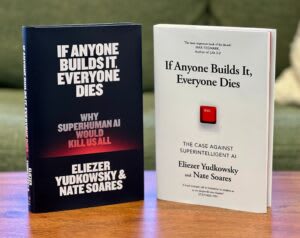

US and UK books, respectively. IfAnyoneBuildsIt.com

Read on for info about reading groups, ways to help, and updates on coverage the book has received so far.

Discussion Questions & Reading Group Support

We want people to read and engage with the contents of the book. To that end, we’ve published a list of discussion questions. Find it here: Discussion Questions for Reading Groups

We’re also interested in offering support to reading groups, including potentially providing copies of the book and helping coordinate facilitation. If interested, fill out this AirTable form.

How to Help

Now that the book is out in the world, there are lots of ways you can help it succeed.

For starters, read the book! And encourage others to buy and read the book. Pre-orders and first-week sales count together, when it comes to determining whether a book is a best seller or not, so this week matters a lot. Consider buying a few copies as Christmas gifts, if you agree with us that “the book does well” is a relevant tool for shifting the conversation at the national and international level.

Next: write reviews on Amazon (US, UK) and Goodreads and similar platforms. Share and discuss the book on social media. If you make video content, consider making videos about the book’s ideas. Write essays about parts of it, or about ideas it sparked in you. Spark conversation and engage in whatever way feels exciting to you, without worrying about whether you’re following a Perfectly Polished PR Strategy or anything like that.

One of the primary goals of this book is to get people talking about the situation. Not just policymakers and technical folks, but everyone. We don’t expect everyone to agree with us on every claim, but we honestly believe that if more people understood the actual state of affairs, the world could take serious action here and address the danger.

Blurbs

Back in June we shared an initial set of book blurbs that we were thrilled about. We continue to be super jazzed by the book’s reception. Some new blurbs we’ve gotten for the book include:

This book outlines a thought-provoking scenario of how the emerging risks of AI could drastically transform the world. Exploring these possibilities helps surface critical risks and questions we cannot collectively afford to overlook.

—Yoshua Bengio, Turing Award winner and scientific director of MILAThe authors present in clear and simple terms the dangers inherent in ‘superintelligent’ artificial brains that are ‘grown, not crafted’ by computer scientists. A quick and worthwhile read for anyone who wants to understand and participate in the ongoing debate about whether and how to regulate AI.

—Joan Feigenbaum, Grace Murray Hopper Professor of Computer Science, Yale UniversityA serious book in every respect. In Yudkowsky and Soares’s chilling analysis, a super-empowered AI will have no need for humanity and ample capacity to eliminate us. If Anyone Builds It, Everyone Dies is an eloquent and urgent plea for us to step back from the brink of self-annihilation.

—Fiona Hill, former Senior Director, White House, National Security CouncilA good book, worth reading to understand the basic case for why many people, even those who are generally very enthusiastic about speeding up technological progress, consider superintelligent AI uniquely risky.

—Vitalik Buterin, co-founder of EthereumThe definitive book about how to take on “humanity’s final boss” — the hard-to-resist urge to develop superintelligent machines — and live to tell the tale.

—Jaan Tallinn, co-founder of SkypeIn If Anyone Builds It, Everyone Dies, Eliezer Yudkowsky and Nate Soares deliver a stark and urgent warning: humanity is racing toward the creation of superintelligent AI without the safeguards to survive it. With credibility, clarity and conviction, they argue that advanced AI systems, if misaligned even slightly, could spell the end of human civilization. This provocative book challenges technologists, policymakers, and citizens alike to confront the existential risks of artificial intelligence before it’s too late. An appeal for awareness and a call for caution, this is essential reading for anyone who cares about the future.

—Emma Sky, Senior Fellow, Yale Jackson School of Global Affairs

Media

It’s very early still, but we’ve already seen some great coverage associated with the book launch. Kevin Roose wrote a New York Times profile of Eliezer, and also interviewed Eliezer on his Hard Fork podcast. Reed Albergotti at Semafor wrote about the book and interviewed Nate and Eliezer. Nate also appeared on Bannon’s podcast, War Room.

Two of my favorite takes have been in The Times and SF Chronicle.

The book is also listed in various “must read” lists, including AARP’s The Big Fall 2025 Book Preview: 32 Hot New Reads, Our Culture’s Most Anticipated Books of Fall 2025, and The Guardian’s biggest books of the autumn. A large (edited) excerpt of Chapter 2 of the book was recently published in The Atlantic.

There will be a large number of media appearances over the coming weeks, so stay tuned! You can get regular updates via the MIRI X (Twitter) account.[2]

If you have contacts with media venues, popular podcasts and Youtube channels, etc. that you can put us in contact with, please email us at media@intelligence.org.

In Closing

We’ve never done this before! A major book launch turns out to be made up of equal parts excitement, nervousness, and exhaustion. We’re about to find out if the past 18 months of mostly-behind-the-scenes effort will pay off in the way we hope it will.

We’re excited for the book to finally be out, and available for all of you to read and engage with. We’d like to see these ideas spread far and wide in the world, and for this to become a real, live, and serious topic of conversation.

It’s been a massive project, and we’re grateful for everyone who’s helped. As a woefully incomplete start, we are thankful for our publishing team at Little, Brown; our publicist DEY; the Lightcone team for tremendous web development help; all of our colleagues within MIRI and outside of it; and (of course) to Eliezer and Nate, for the hard work they’ve done over the years.

This project has consumed approximately all of my life force over the past six months, and now I’m going to go collapse for a bit before poking my head back out to see how big the wave has actually become. In the best of worlds, this is really just the beginning. LFG.

- ^

In the US. Two more days for the UK—apologies to the folks across the pond. Also, translations are in the works but those take time.

- ^

Discuss