Security Operations Centers (SOCs) within cybersecurity teams are at a crossroads. They face well-documented challenges, including increasing alert volumes to talent shortages and limitations of legacy tools. At the same time, they must grapple with the capabilities of large language models (LLMs) and the implementation challenges of leveraging them effectively.

This report examines how AI/LLMs are transforming and reshaping SOC workflows. It explores areas such as automating alert management, enriching threat data, and optimizing incident response times and investigations. Featuring insights from my discussions with CISOs and security leaders, as well as case studies on emerging technologies, this report provides a roadmap for adopting AI-augmented SOC strategies that enhance productivity, reduce costs, and address trust and transparency concerns.

This report builds on my previous collaboration with Josh Trup on the Security Automation report. It highlights how LLMs are specifically enhancing SOC operations. I hope you find it valuable.

Collaboration:

This report was done in collaboration with Filip Stojkovski, an SOC & Security Engineering leader at leading companies like Snyk, Adobe, Crowdstrike, and Deutsche bank. He brings over a decade of experience in the SOC. He writes a blog called Cybersecurity Automation and Orchestration.

Market Ecosystem for Security Operations Center (SOC)

Key Takeaways

The term ‘AI for SOC (Security Operations Center)’ is a flawed acronym for defining this category. A better categorization of this sector would be AI-Assisted SOC Analyst or Augmented AI Analyst. Almost all the security leaders we’ve spoken to can’t ever envision a SOC that would be completely “AI-driven”.

The challenges within the SOC are well-documented and affect nearly every SOC. Even the best-performing SOCs with strong MTTD (Mean Time to Detect) and MTTR (Mean Time to Respond) still face challenges with monitoring and gaining visibility over all alerts. These challenges can be categorized into:

Alert Fatigue: The high volume of alerts per day due to ineffective detection rules. Some of which lead to low morale and burnout from repetitive processes, contributing to attrition.

High costs: Many companies still face high costs in managing the SOC infrastructure and monitoring tools to keep up with emerging threats.

Improved MTTD (Mean Time to Detect) & MTTR (Mean Time to Respond): The reality is that the longer a threat goes undetected, the more damage it’ll cause. As a result, SOC leaders are under significant pressure to reduce their MTTD/MTTR to minimize any threat impact.

Missed detections: Teams struggle to manage detections for newer solutions. In some cases, alerts may be ignored, which can keep a leader awake at night.

Limitation of past automation solutions: The first generation of automation for security has disappointed many leaders due to the large upfront investment and ongoing maintenance required.

This market faces an uphill battle from both the demand and supply sides for AI-SOC technologies. The demand refers to buyers/users and supply refers to vendors.

The demand side challenge comes from CISOs and SecOps leaders who are wary of AI solutions due to “trust issues” regarding their efficacy. Leaders are not confident enough to place AI at the frontlines of the SOC. Messaging & terms like “replacing an Analyst” have turned some analysts against these emerging solutions. CISOs want greater transparency in how security solutions generate their resources and results. Many companies are still comfortable with the status quo, where analysts comb through alerts and occasionally use SOAR playbooks for repetitive tasks.

The supply side challenge comes from vendors having to convince leaders that their solutions are more effective, transparent, and accurate than existing processes.

The criteria for evaluating success will be whether companies believe the AI-Analyst is almost as capable of improving MTTD/MTTR and augmenting productivity as a human analyst. Other key factors include managing costs and improving time productivity.

In the near future, we believe the market is ready for next-gen, AI-powered SOAR solutions. These solutions build upon the foundation of existing playbooks for different incidents together and then integrate LLMs to improve the ease of leveraging them. This combination offers the best of both worlds, helping SOC teams address existing market challenges.

Breaking Down Security Operations Center (SOC)

Before diving deep into automation and the challenges faced by security teams, it is important to begin by clearly defining Security Operations Centers (SOCs). SOCs are centralized units within organizations dedicated to monitoring, detecting, analyzing, and responding to cybersecurity incidents. These units play a pivotal role in safeguarding an organization’s digital assets, infrastructure, and sensitive data against a variety of cyber threats. In essence, SOCs are crucial for protecting organizational assets.

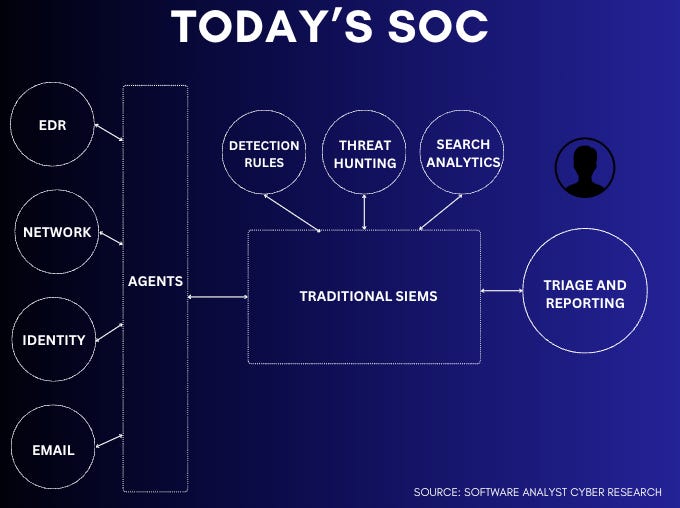

How Most SOCs Operate

Security Operations Center (SOC) typically provide monitoring and detection services by collecting and analyzing MELT data, an acronym that refers to the essential types of telemetry required to detect, investigate, and respond to security incidents. In the early days of SOC operations, companies used agents to collect MELT data points i.e.

Metrics

Events

Logs

Traces

MELT data forms the backbone of the SOC. By collecting and analyzing MELT data, SOC teams gain full visibility into their environment, enabling them to detect anomalies, policy violations, and indicators of compromise (IOCs). Agents (or sensors or APIs) are installed on endpoints or servers to gather this data. Once collected, Tier 2 or Tier 3 security analysts focus on analyzing alerts, writing detection rules, and performing threat hunting in the SIEM. The objective here is to highlight actual threats from the raw alerts, logs and telemetry while storing this information for future investigations and queries.

Differences between Tier 1, Tier 2 and Tier 3 Analysts:

Tier 1 Analyst: Primarily responsible for the initial triage of security alerts and incidents using a SIEM. They identify and categorize potential threats. They escalate more high-risk cases to senior analysts.

Tier 2 Analyst: Focuses on investigating, containing, and responding to escalated incidents. They fine-tune detection rules for better and improved processes.

Tier 3 Analyst: Proactively identifies advanced threats and enhances SOC processes. They conduct threat hunting and malware analysis, leveraging threat intelligence.

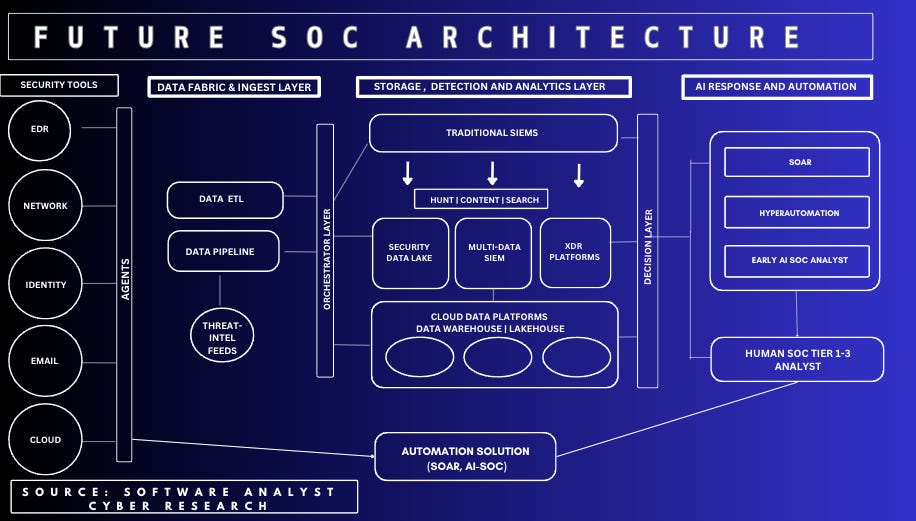

Future SOC Architecture

This post isn’t focused on the SOC, but we believe a foundational understanding of this layer is essential to drive the rest. Below is a breakdown of this architecture:

Data Fabric Layer

In SOC teams, a layer of raw security data from diverse sources must be standardized and processed for effective analysis before being sent to a SIEM (or automation solution). This category has two distinct types of vendors. 1) Those focused on building and managing the engineering data pipeline. 2) Those prioritizing data filtering and enrichment to improve detection quality.

The key task is formatting data into a consistent structure to enable seamless integration and adding contextual information, such as IP geolocation or threat intelligence feeds, to improve data quality. We’ll uncover how this layer increasingly intersects with automation efforts.

Storage and Detection Layer

Companies leverage threat detection rules to define the logic for identifying malicious activity in their data. Once processed, data is routed to a centralized repository for storage and analysis. This may involve a SIEM (Security Information and Event Management) offering real-time monitoring and alerting or a cloud-based data lake designed to reduce costs.

AI Response & Automation

When detection rules are triggered, or alerts are generated, SOC analysts thoroughly investigate these alerts, assess their severity levels, and implement appropriate remediation measures. Modern SOC automation solutions are evolving to adopt a more proactive approach, integrating directly with security tools rather than relying solely on SIEM alerts. This advancement allows for enhanced alert enrichment and contextual analysis, leading to more efficient remediation processes. Analysts can now differentiate real threats from false positives more quickly and conduct thorough assessments and containment strategies. For incident response, teams can now manage security incidents more effectively, conducting deeper investigations by leveraging indicators discovered during the triage phase, with legacy SOAR solutions proving particularly effective in this domain. While AI technology is poised to revolutionize SOC operations in this layer, its adoption remains complicated by SOC leaders' ongoing concerns about SIEM-related costs and implementation challenges.

Full Market Ecosystem for Security Operations (in 2024)

In summary, the SOC is the centralized hub for monitoring, detecting, analyzing, and responding to cybersecurity incidents. Its core processes include data collection, where telemetry (MELT: Metrics, Events, Logs, Traces) is gathered from endpoints, networks, and systems; alert triage, prioritizing and categorizing threats; investigation, conducting root cause analyses and threat hunting; response, mitigating and remediating incidents; and continuous improvement, updating detection rules and workflows based on new threats. By leveraging SIEMs, automation tools, and skilled analysts across Tier 1 (triage), Tier 2 (response), and Tier 3 (proactive hunting), SOCs protect an organization’s assets against evolving cyber threats.

Outsourced & Managed Security Operations

Organizations with large budgets and extensive scale may choose to build a fully internal SOC, purchasing all the necessary technologies. In this report, we outline the processes and technologies involved in creating a modern SOC. However, it is important to note that not every organization will implement all these technologies.

Smaller and mid-sized companies often turn to managed 24/7 SOC services. These include Managed Detection and Response (MDR) providers or Managed Security Service Providers (MSSPs), which use their own SIEM, EDR, or other solutions to monitor client environments around the clock. These services identify alerts, assess potential threats, and notify clients about incidents requiring attention.

By leveraging managed services, organizations reduce their need for in-house expertise while managing costs associated with these technologies. Additionally, outsourcing tasks such as threat intelligence, forensic investigations, and incident response (IR) can help internal SOCs manage reporting and integrate multiple threat feeds more effectively.

The Evolution of Automation In Cybersecurity

At its core, automation has a single purpose: to let machines perform repetitive, time-consuming and monotonous tasks. This frees up scarce human analysts to focus on more important issues, such as threat hunting and proactive investigations. A common concern, both in cybersecurity and other industries, is that automation might replace human workers. While this fear is partly justified, automation primarily enhances human capabilities in security operations and helps bridge the talent gap in cybersecurity.

Different organizations and SOC teams have been approaching automation in different ways, depending on budget, technical talent and team size. Key approaches include:

Bespoke automation scripts: Many organizations initially relied on bespoke, in-house scripts to manage security tasks. These custom scripts, while tailored to specific organizational needs, often required significant software engineering expertise to develop and maintain.

Generalist Automation: Next came RPA and automation solutions like Zapier, n8n, and UIPath, which primarily focused on automating workflows across various applications and systems without the need for extensive coding knowledge. While these tools enhanced operational efficiency, they were often not designed specifically for security operations beyond basic IT workflows.

History of Automation In Cybersecurity Operations

Traditional SOAR Platforms

Security Operations Automation & Response were the first generation of products built to automate security operations tasks. SOAR platforms were built with security-specific capabilities, including threat intelligence/SIEM platforms integration, incident response playbooks, and automated workflows tailored for security incidents. These solutions were designed to address the outputs of SIEM's by automating responses and providing tools for managing, planning, and coordinating incident response activities.

These systems were built to understand and prioritize alerts based on their security relevance, enabling SOC teams to quickly identify and respond to critical threats. By 2018, advanced SOAR platforms began integrating artificial intelligence (AI) and machine learning (ML) to enhance threat detection and response, making these systems not only reactive but also proactive by predicting and mitigating potential security threats.

Sample vendors include Phantom Cyber, acquired by Splunk in 2018, which was one of the earliest and most prominent names in the SOAR space. Phantom’s platform allows security teams to automate tasks, orchestrate workflows, and integrate various security tools. Subsequently, Google SecOps was built around the acquisition of Siemplify, which offered a security operations platform often categorized as a SOAR solution.

Key Challenges with legacy SOAR platforms

Despite their promise, traditional SOAR solutions often fell short of expectations, creating significant challenges for security teams:

Complex Implementation: Lengthy deployment cycles and extensive configuration requirements delayed time-to-value

Resource-Intensive Management: High total cost of ownership (TCO) due to the need for specialized expertise and ongoing maintenance

Integration Limitations: Restricted connectivity with existing security tools and difficulties adapting to new technologies

Alert Management Issues: Inconsistent alert handling and missed critical alerts due to rigid playbook structures

Limited Adaptability: Inflexible frameworks that struggled to accommodate evolving security needs and emerging threats

Hyperautomation

The 2020s brought a range of challenges for Security Operations Centers (SOC) that existing SOAR tools struggled to address. Cybersecurity threats became increasingly sophisticated as businesses integrated more cloud-native data solutions and contended with numerous security tools. Simultaneously, there was a growing demand for experienced cybersecurity professionals, but the supply remained limited.

These legacy SOAR tools faced a significant challenge as they grew in size and complexity, often compelling organizations to hire experts or spend on professional services. Companies needed to invest heavily in professional services. These additional costs frequently exceeded the initial price of the SOAR tool itself.

Defining AI-SOC

AI for SOC (Security Operations Center) is a flawed acronym for defining this category, a category often surrounded by buzzwords. It primarily represents an advanced cybersecurity framework incorporating LLMs (post-ChatGPT) into traditional SOC processes.

These next generation of solutions aim to leverage machines to replicate the role of security analysts. As discussed earlier, enterprises traditionally routed alerts through a SOAR workflow, or human analysts would manually assess and respond to alerts or security incidents. The goal of an AI SOC analyst is to immediately process low-level alerts, automatically triage them, and investigate incidents.

AI for SOC signifies a shift from reactive, manual security processes to a proactive, intelligence-driven paradigm. Its main components include—

Triage,

Investigation,

Response, and,

Workflow automation

Together, these components create an ecosystem that enhances efficiency. These systems are not intended to replace analysts but to empower them to focus on larger security challenges while continuously adapting to an ever-changing threat landscape.

As of today, most AI functionalities excel at tasks typically performed by Tier 1 analysts. AI for SOC can be split into a few core categories, primarily aimed at automating the following:

Alerting and Triage: AI handles the flood of alerts, highlights critical ones, and enriches indicators of compromise (IOCs) with actionable threat intelligence.

Investigation and Threat Hunting: AI delves deeper into incidents, correlates data, and uncovers hidden threats. It checks past user behavior to understand threat patterns.

Response Execution: AI automates remediation while keeping humans in the loop for oversight. It may refer back to business systems such as directory information, emails, and tickets for context before taking action.

Proactive Process Engineering: AI evolves workflows and integrations to meet the dynamic demands of cybersecurity.

Evidence Analysis: AI analyzes PDFs, shell scripts, packet captures, and other evidence for malicious intent.

AI-SOC Assistant Vendors (Post-2022 ChatGPT)

Based on our research, this is our framework for categorizing the vendors that have emerged or existing solutions that rebuilt their solutions around emerging LLM technologies and model advancements for understanding language:

AI-XDR & Security co-pilot platforms

AI-SOC Tier 1 Analyst for alert triage & Investigation

AI-SOC Tier 2/3 Analyst for advanced incident response and threat hunting

AI Automation Engineer for creating custom advanced security workflows

1) AI-Powered XDR & Co-Pilot Platforms

The largest EDR/XDR vendors, such as Microsoft, Palo Alto Networks, and Crowdstrike, now provide AI-powered XDR platforms and co-pilot solutions. These vendors have evolved from focusing solely on endpoint telemetry to ingesting diverse third-party data powered by XDR solutions with basic response capabilities.

With advancements in AI/LLM, these vendors have incorporated generative AI, significantly enhancing their solutions’ ability to triage alerts automatically and provide robust AI-driven summaries and log data analysis. Many began with co-pilot releases, and leading vendors in this category include:

CrowdStrike's Charlotte AI

Microsoft Security Copilot

SentinelOne PurpleAI

Palo Alto Networks (Cortex XSIAM)

Splunk ES, Google SecOps, Radware, etc.

One of the major capabilities of these solutions is their security Co-Pilot feature, which enhances SOC operations through various intelligent functionalities. These Co-Pilots help analysts navigate data sources more efficiently, enabling them to more quickly find ground-truths and expedite their investigations, research, and discovery processes. They provide analysts with guided responses for incident handling, automatically generate detailed incident reports, and conduct thorough analyses of scripts and code for potential threats.

These co-pilots are particularly helpful in efficiently summarizing incidents, offering analysts quick insights, and accelerating their investigation processes. This, in turn, reduces response times and lessens the analysts’ workload. What makes these security pilots powerful is their foundations built on vast datasets, such as Microsoft's security-specific model, which has been trained on a collection of over 65 trillion security signals.

Another key advantage these vendors possess is their extensive datasets, drawn from threat intelligence and endpoint telemetry, which enable advanced AI model training. These massive telemetry datasets, accumulated over the years, provide a significant competitive edge in developing robust AI models and comprehensive threat intelligence capabilities. Additionally, they benefit from strong ecosystem integration by seamlessly combining proprietary tools (e.g., Cortex, Falcon) with third-party platforms.

For example,

Charlotte AI can correlate seemingly unrelated alerts to identify complex attack patterns and provide real-time threat hunting capabilities, leveraging its strengths at the endpoint These platforms now offer integrated AI capabilities that combine alert analysis with automated response orchestration, creating a more streamlined security workflow.

Enhanced Analysis, Human-Guided Decisions: AI serves as an intelligent assistant, processing vast amounts of data and providing insights, but critical security decisions still require human expertise. Modern AI-powered alert analysis systems act as intelligent collaborators which enhance the capabilities of security teams by automating complex analysis tasks.

2) AI-SOC Tier 1 Analyst Solutions

These solutions are taking things a step further with the goal of emulating a human Tier 1 analyst’s actions when an alert is generated. By automating routine investigation tasks typically performed by human analysts, they aim to reduce response times and lighten the workload for security teams. These systems go beyond simple alert summaries, delivering enriched, actionable insights. Their core functionalities can be categorized into the following key roles:

Alert Grouping: They leverage AI tools to efficiently organize and consolidate alerts to minimize noise and provide a clearer picture of potential threats:

Alert deduplication and grouping per asset: They identify and consolidate duplicate events that may have bypassed initial SIEM filtering. They also aggregate alerts related to the same asset, creating a holistic view of potential threats.

False positives alerts: These solutions sift through extensive datasets to identify genuine threats and dismiss false positives. They provide analysts with detailed explanations for closing cases. Despite AI's advancements in streamlining triage, human expertise remains critical to validate alerts and make the final call.

Alert Enrichment (True Positives): AI enhances raw alerts by adding essential context, empowering analysts with the information needed for thorough investigations and decision-making. Key enrichment areas include:

Indicator of Compromise (IOC) Enrichment: This is a key component. The system leverages threat intelligence feeds to perform checks using both paid and open-source intelligence sources, or consults a Threat Intelligence Platform (TIP) if an enterprise has all intelligence data centralized in a central location.

Machine (Endpoint/Server) Enrichment: Systems gather detailed information about affected machines, such as configurations, vulnerabilities, and activity patterns.

Account (Identity and Access Management) Enrichment: Solutions retrieve user account details, access patterns, and recent activity to identify potential misuse or compromise.

Through strong, alert grouping and enrichment capabilities, many of the AI-SOC Analysts aim to enhance the human analyst workflow and, subsequently, over time, replace repetitive tasks with an AI agent. However, there are some limitations:

Predefined Detections: AI Tier 1 systems primarily operate within the scope of predefined detection rules and require regular fine-tuning to adapt to new threats.

Onboarding Challenges: Configuring these tools during initial deployment and for new detection scenarios can be resource-intensive.

Additional time spent on False Positives (FPs): While AI helps streamline triage, human expertise is still needed to validate alerts and make the final call. There is fear regarding the time required to manage these solutions relative to an analyst.

Don't expect these tools to eliminate false positives (FPs) entirely. While they help, a human must still make the final call on alerts. AI Tier 1 tools guide you toward the answer but won't make the decision for you—they're primarily designed to automate routine tasks, freeing analysts to focus on more critical issues.

Sample vendors: Some of the leading vendors include Dropzone, Prophet AI and Hunters AI.

Dropzone: I was impressed by Dropzone’s visualization - its user-friendly UI and interface designed for seamless navigation by analysts.

Prophet AI: I was impressed at their rapid deployment. Prophet AI integrates into environments faster than competitors, providing visibility to investigative questions and evidence.

Hunters AI: They released Hunters AI-Assisted Investigation, which leverages advanced AI capabilities to offer tailored context, explanations, and actionable next steps. This solution is designed to empower analysts of all skill levels. It provides enhanced contextual summaries, relevant explanations, and guided remediation. Analysts gain a unified view of all related information, with Hunters AI enabling rapid investigation. It saves analysts significant time through automated context and actionable insights, addressing the challenges of high alert volumes. Hunters AI provides a full analysis of AI-Assisted Investigation.

There are many solutions on the market, including Intezer AI SOC solutions, which are worth noting.

3) AI-SOC Tier 2 Analyst - Reactive Threat Hunter

The first tier of analysts performs basic responsibilities within security operations centers. However, there is another group of analysts that has more advanced skills. They are usually responsible for in-depth incident analysis, threat hunting, and leveraging threat intelligence, package analyzers, or forensic tools within their investigation workflow.

These analysts can be referred to as AI Responders and Threat Hunters. This takes automation a step further. It doesn’t just analyze the alert; it also executes a response. For instance, it can isolate a host, quarantine a file, or adjust your block/allow lists—all automatically.

So, imagine you’ve received an alert from your Tier 1 AI tool, and it’s flagged as a suspicious file. The AI Tier 2 platform can jump in and take actions such as:

Implementing containment, eradication, and recovery steps for confirmed incidents.

Performing host isolation.

Managing file quarantine.

Automatically updating block/allow lists.

Correlating data from multiple sources to build a detailed picture of an attack and evaluating the blast radius of an incident.

Engaging in reactive threat-hunting once an incident has been triggered.

While these automated actions follow predefined playbooks and work efficiently for routine tasks, they have limitations with complex or custom workflows. The systems perform best on familiar, established processes but become less reliable when handling intricate scenarios—such as compliance alerts, which we'll discuss later. In general, some solutions are evolving to address the highly dynamic, undefined, and technical scenarios in the SOC.

4) AI Automation Engineer: The Future of Custom Workflows

AI Automation Engineers combine next-generation SOAR/Hyperautomation with large language model (LLM) capabilities into a unified platform. This emerging market segment shows tremendous potential. The key innovation lies in using AI to create automation workflows through simple natural language commands. They enable Security Operations Center (SOC) teams to create sophisticated workflows with minimal coding expertise.

Some of the reasons we favour this category include:

Strong workflow capabilities: These solutions already have strong playbooks and automated predefined workflows for triaging alerts, gathering data, and performing initial investigations. They can do this across a vast number of use cases within the enterprise

Moving past static workflows: Historically, traditional SOAR solutions, relied on playbooks that executed static workflows, often lacking the ability to adapt to dynamic scenarios or nuanced contexts. Incorporating AI now dynamically enriches workflows by interpreting the context of incidents and adapting responses accordingly. For example: AI can determine whether a flagged IP address is truly malicious based on updated threat intelligence or automatically tailor remediation steps based on the criticality of the affected asset.

Continuous Learning & Adaptation: Historically, SOAR relied on preconfigured rules and manual updates to playbooks, which can become outdated as threats evolve. Now, using AI systems to learn from every incident, they continuously improve their detection and response capabilities.

Custom automation: Build and modify workflows specific to your environment. Teams can use NLP to create automation flows using conversational commands instead of code. This technology is key for teams needing to rapidly implement new detection logic or optimize response strategies.

Leading platforms like Torq, Tines, Mindflow, and Blink Ops are pioneering this space. The only limitation with these solutions is that they typically require more resources and expertise to manage effectively.

Real-World Case Study of an AI-Powered Unified SOC Workflow

This breakdown explains the core processes involved:

Identification: We start with a SIEM alert, let’s take the example of admin account spinning up a new resource in a region that is not usually used. We then proceed with the usual triage process to determine which steps can actually be fully automated, rather than spending over 30 minutes doing it manually. (Note that all of these steps take into consideration that we have all necessary data points to enrich the data and run analysis.)

Alert Reception and Triage Agent Activation - Deduplication and Grouping: One of the first things an AI for SOC or Hyperautomation platform does is identify similar alerts triggered for the same cloud account or user. This reduces noise and helps focus on the actual issue at hand.

Alert Enrichment:

Internal Enrichment: The AI platform enriches the alert with details about the admin account, historical provisioning patterns, and resource types. It checks whether this activity aligns with previously authorized changes or expected admin behaviour. In this case, the automation—whether it’s a whitebox or blackbox solution—would connect with your IAM or ITDR systems to gather this context.

External Enrichment: The AI pulls in threat intelligence to check if the admin’s IP or related activity matches known bad actors or tactics. For instance, are these resources linked to cryptomining campaigns or flagged IPs? The quality of this enrichment depends heavily on the threat intelligence feeds you’re using and your confidence in their reliability. Here, the automation consolidates all relevant information into one place, making it easier for an analyst—or the AI agent itself—to determine if the alert is a true positive (TP), a false positive (FP), or requires escalation.

The key questions addressed include:

Who is involved (admin account); Where is the activity originating from? And when did it occur?

Containment (Tier 2 - Investigation)

Digging through logs and connecting the dots has always been one of those tedious, time-consuming tasks. With today’s AI for SOC and hyperautomation platforms, we can significantly speed this up—or, in some cases, even let the platform handle it autonomously.

Timeline Analysis: With the help of the AI, the alert summery can reconstruct the sequence of events to pinpoint when the provisioning began. This step involves accessing logs, searching for key indicators, and automatically building a timeline that provides a clear picture of what happened and when.

Forensic Evidence: Collecting forensic evidence—another labor-intensive step—can be fully automated. The platform captures snapshots of affected cloud instances, lists all running processes and active connections, and prepares saved searches for relevant CloudTrail logs or API activity. This ensures that analysts (or even the AI itself) have everything they need for further analysis.

The key questions we aim to address:

What is happening (e.g., resource misuse)?

Why is it occurring (e.g., compromised credentials, malicious intent)?

Eradication (Tier 3 - Remediation) - Automated Remediation:

Automated remediation has been around since the early days of SOAR, and with hyperautomation platforms, you can take it to the next level. For AI-driven SOCs, though, this is still a mixed bag because every organization has its own way of handling remediation.

In cases where the system is allowed to auto-remediate, it’s straightforward: isolate the affected host, rotate compromised credentials, or block IOCs. These actions can be executed seamlessly by the automation platform without human intervention.

However, if the remediation steps need to follow a change management process, things get a bit more structured. Here, the platform could generate the required change request or even prepare CloudFormation/Terraform templates for review and approval before applying fixes. This ensures compliance with organizational policies while still leveraging automation to save time.

Lessons Learned: Adaptive Learning: AI shines in this phase by automating what used to be painful manual work. It can generate detailed root cause analyses and incident summaries tailored for management and stakeholders, ensuring everyone gets the insights they need. But the real value lies in automating the feedback loop.

With adaptive learning, any newly discovered IOCs or TTPs are automatically added to your Threat Intelligence repository, enriching your defenses for the future. At the same time, any detection gaps identified during the incident are pushed directly into the backlog for your detection engineering team to address. This ensures continuous improvement and keeps your defenses sharp without adding extra overhead.

Torq released their security AI workflow builder and Torq AI Socrates. We’ll use a case study to see how Torq would handle suspicious IP behaviour.

Incident Case Study Description for how AI-powered SOAR + LLM converge:

A SOC receives an alert about suspicious outbound traffic from a corporate device to an unknown IP address. This IP has been flagged by a threat intelligence feed as associated with malware command-and-control servers.

How Torq Socrates Solved the Incident

Detection and Workflow Creation: A junior analyst notices the alert and uses the AI Workflow Builder to create an automation rule using a prompt: “Check IP address 192.168.1.100 with VirusTotal and other integrated threat intelligence feeds. If flagged as malicious, initiate containment actions and alert the SOC.” The workflow is automatically generated and deployed, enabling Torq Socrates to take over the investigation.

Enrichment and Validation: Socrates pulls additional context from SIEM logs to determine recent activity from the device, EDR tools for evidence of malware or suspicious processes, and uses threat intelligence feeds to confirm whether the IP is flagged as malicious across multiple sources.

Autonomous Decision-Making: Socrates identifies that the IP is flagged as malicious with high confidence. It autonomously isolates the affected device from the corporate network using integration with the EDR platform. Furthermore, it alerts the security team through Slack with a summary of actions taken.

Human Oversight and Summary Report: Torq generates a detailed report on the incident that happened, such as a device attempting to communicate with a flagged IP. The report includes timestamped activity logs and confirms that no data exfiltration was detected, and the device was isolated. Analysts review the summary to confirm actions and decide on further remediation.

Proactive Follow-Up: If required, automated mitigation is initiated. Password resets are triggered for the user associated with the device. A forensic investigation begins, initiating a deep scan of the isolated device to identify potential malware and its origin.

Communication and Documentation: Torq notifies stakeholders (e.g., IT and management) with a tailored report explaining the incident and resolutions in non-technical terms. Immutable documentation is auto-generated for compliance records, ensuring full traceability of the actions taken.

This hyperautomation process combines automation and AI to address the full lifecycle of an incident, from detection to resolution. We recommend visiting here to read about the new solution: Guide to AI in the SOC manifesto.

The role of AI agents in SecOps

Most of this report has outlined the current status quo of AI in the SOC, including a unified AI-powered SOC with a human-in-the-loop case study above. However, moving forward, we believe AI agents will have a big role to play in cybersecurity.

AI Agents - SecOps Implementation

So, what exactly is an AI agent?

While we’re accustomed to interacting with Large Language Models (LLMs) like ChatGPT through simple text prompts and responses, AI agents take this interaction to the next level. Imagine an LLM that doesn’t just provide answers but can plan, make decisions, and carry out tasks until they’re fully completed. In essence, an AI agent is like a chatbot with the added abilities to take actions, access tools, and iteratively work through tasks without needing constant human intervention.

You might wonder why we need agents when advanced LLMs like GPT-4 already exist. The key limitation of non-agentic LLMs is their inability to adapt beyond the initial prompt and response—they can’t perform actions or refine their output without additional input. AI agents shine by offering adaptability and flexibility, allowing them to think, iterate, and improve their responses autonomously.

This enhanced capability is primarily achieved through a concept called ReAct, short for “Reasoning and Acting.” Think of it as giving the LLM the ability to loop through reasoning and actions until it completes its task satisfactorily. It’s similar to how you might go back and forth with ChatGPT multiple times to refine an answer—except the AI agent does this internally, streamlining the process.

Imagine a scenario where you no longer need to manually write automation scripts. Instead, an AI agent or a browser-based plugin monitors your daily tasks, suggests processes to automate, and even builds the automation for you. Need to integrate a new tool or service? The AI agent retrieves the API documentation and constructs the integration with all available actions. This isn’t a distant future—it’s the transformative potential of AI agents in cybersecurity today.

AI agents hold the promise of revolutionising cybersecurity by automating repetitive tasks, enhancing threat detection, and enabling more proactive defense strategies. However, trust, explainability, and integration challenges must be addressed to fully realise their potential. By carefully evaluating the benefits and considering the criteria outlined above, organisations can make informed decisions about adopting AI agents to strengthen their cybersecurity posture.

Broader Challenges / Barriers To AI-SOC Assistant Adoption

Trust Issues: The biggest challenge is that security operators don’t fully trust these solutions enough to adopt them. Secondly, there is also a concern about putting these technologies at the front line due to the fear of missing something crucial.

Limited Customization and Black Box AI: A significant challenge in the industry is the prevalence of black box solutions, where AI model training occurs behind the scenes. Enterprises only receive final outputs like enriched alerts and remediation recommendations, without visibility into or control over the underlying processes. Many vendors restrict the ability to customize or fine-tune their models, creating a dependency on vendor updates and fixes. This limitation becomes particularly problematic for large enterprises with unique security requirements. While smaller organizations might benefit from quick deployment and standardized solutions, enterprises often need more flexibility to address their specific security contexts and detection scenarios. A lack of transparency in decision-making (common in Black Box solutions) can conflict with compliance requirements in regulated industries.

Analyst Involvements - AI tools do not fully replace human analysts; they complement them. Analysts are still required to validate findings, handle escalations, and adapt workflows to evolving threats. Teams must be trained to interact effectively with AI-powered systems, which may face resistance or steep learning curves.

Accuracy & efficacy of models - It is crucial for technologies that these models become highly accurate when compared to a human analyst over 30, 60 and 90 days. The ability of a model to produce similar results to how a top-tier analyst would respond is key.

Conclusion: The Path to Full AI-Driven SOCs

If we want to take SOC automation to the next level, we need platforms that handle the entire cycle—from incident response to detection engineering, automation, and finally, testing the whole process. Right now, most AI SOC tools only offer pieces of that puzzle, giving you a false sense of security that you’ve automated more than you actually have.

And don’t forget, while these platforms are decent at automating incident response, they tend to struggle with compliance alerts and governance issues. The truth is, a large chunk of the alerts in any given environment are related to vulnerability management or cloud security, and these aren’t handled as effectively by current AI solutions.