Published on September 11, 2025 1:23 PM GMT

Introduction

Vastly transformative AI (TAI) will plausibly be created this decade. Governments need a clear, coherent overview of urgent actions to prioritise and how they relate to each other, with contingencies given success or failure of important projects with uncertain outcomes. For example, how should success or failure in creating robust frontier model evaluations determine subsequent governance actions?

We propose that an AI Governance Standard—analogous to the UN Sustainable Development Goals—can provide this strategic clarity. Drawing from a broad literature review of expert recommendations, we present an early iteration of such a standard: Three high-level goals and nine interrelated subgoals that together can constitute a theory of victory for TAI readiness.

National and international approaches to AI governance lack a shared understanding of how different governance objectives may depend on, conflict with or support others. Regulatory bodies are faced with the huge burden of reconciling a vast body of interdisciplinary AI safety recommendations into a coherent high-level action plan. This increases the risk of leaving out or compromising on high-impact expert research. Forcing each jurisdiction to develop their plans from scratch is also inefficient, forcing each actor to grapple with the same problems independently. This makes national AI regulation more likely to diverge, international collaboration more difficult, and creates challenges to international compliance for AI developers, thereby hindering innovation.

The AI Governance Standard aims to develop a shared understanding on how to approach the diverse challenges involved, based on substantial expert consensus that already exists around minimum governance standards. The Standard can provide a structured approach for prioritizing interventions, and offer governments a flexible yet actionable roadmap toward TAI readiness.

This work is especially crucial if we assume that short timelines to transformative AI are plausible, and that current AI governance is not TAI-ready. An AI governance standard gives us a benchmark by which we can assess current progress towards TAI readiness in relevant jurisdictions, and internationally. Poor assessments on this benchmark could motivate the targeted, urgent action required to prevent catastrophe.

I detail the challenges a TAI Governance standard addresses, illustrate what this standard could look like with an early example, discuss some challenges and limitations, and propose next steps.

The Unaddressed Challenges

AI governance entails a vast body of recommendations, spanning a variety of often distinct domains, from technical alignment research, to incentivising lab reporting requirements, to creating international treaties on AI safety. There is very little work engaging directly with the problem of how to reconcile these recommendations into a single strategy. As a result, regulatory bodies and AI safety researchers are forced to grapple with this challenge directly on a case by case basis. There are at least four major challenges to achieving existential security that an AI Governance Standard would address:

- There is no common understanding of what success looks like for TAI Governance. Currently, all approaches to AI governance represent muddle through approaches, rather than having identified robust theories of victory. The result is a lack of clarity on whether AI safety work is targeting the right things within the right time frame. A clear standard provides a basis for selecting, planning and prioritising from the variety of expert AI safety recommendations. Currently no basis like this exists.

It is likely that most or all governments are not on track to achieve success in short timelines. As of September 2025 efforts at TAI governance are largely nascent. Steps towards concrete governance in the US, arguably the most important jurisdiction for governing frontier AI, have been blocked (repeal of EO, SB-1047), and the Current US AI Action Plan emphasises accelerated AI development. The most robust AI governance legislation to date, the EU AI Act, is still facing delays and challenges. Meanwhile, the UN is establishing platforms for multilateral discussion, and a UK AI Bill is in development.

An AI governance standard can provide strong motivation for accelerated action around TAI readiness. Without a theory of victory, it is difficult to say whether, for example, the EU AI Act is sufficient for TAI readiness, without some detailed idea about what TAI readiness entails. An AI Governance standard would help identify when a regulatory framework is lagging behind, and detail exactly how it need be improved, by what year, and which viable expert recommendations may meet the need.

The US, EU and China are taking diverging approaches to AI governance. This forces each government to grapple with the same problems individually, increasing risks of strategic incoherence, and covering old ground that has been covered by experts or other nations. It also renders international collaboration more difficult, as regulations are less likely to be interoperable or otherwise compatible. Lastly, it creates challenges to international compliance for AI developers, hindering innovation. Building shared understanding between regulatory bodies with an AI Governance Standard increases the likelihood of collaboration and compatible or complementary approaches to AI safety. AI safety experts frequently spend resources to identify and prioritize what to research and advocate for. A successful standard for AI governance will help researchers target areas that are underdeveloped but crucial for successful TAI governance, and help lobbyists identify areas they can work on that are well understood but not being acted on by the relevant actors.Creating a TAI Governance Standard: a goals-based Taxonomy of Risk Mitigations

A standard should ultimately show how a given action relates to achieving the goal of existential security. It should also support analysis of how a given action relates to other prospective or ongoing actions, so that certain actions can be prioritized over others, or combined with others synergistically. One possible approach is to identify the set of broad, interrelated goals for TAI safety, such that achieving these goals would amount to TAI readiness, analogous to the Sustainable Development Goals for achieving peace and prosperity for people and the planet. All mitigations can then be thought of as in service to one or more of the TAI readiness goals and/or subgoals.

These assumptions were the starting point for developing a goals-based taxonomy of AI risk mitigations. The taxonomy aims to identify the broad goals of many different TAI Governance recommendations, to draw out how they relate to and overlap with each other.

Methodology for Developing a goals-based taxonomy of TAI risk mitigations

In order to produce an illustrative first attempt:

- We conducted a literature review of ~50 sources that present overviews of critical AI safety interventions. Includes sources from labs, governments, academics and civil society.We listed every intervention mentioned by these sources,We described each intervention on a similar level of abstraction to enable meaningful comparisons. This entailed combining some interventions, and splitting others into two or more.Through three iterations, we organized these interventions according to their underlying objectives. This revealed natural groupings around what each intervention was designed to achieve. We also explored how certain groupings may relate to others. For example, developing the means to create Safe AI (1) is prior to establishing safety practices (2).This is reflected by directional arrows in the taxonomy below.The taxonomy underwent review with 6 AI governance experts, whose feedback led to ongoing refinement of category definitions and intervention groupings.

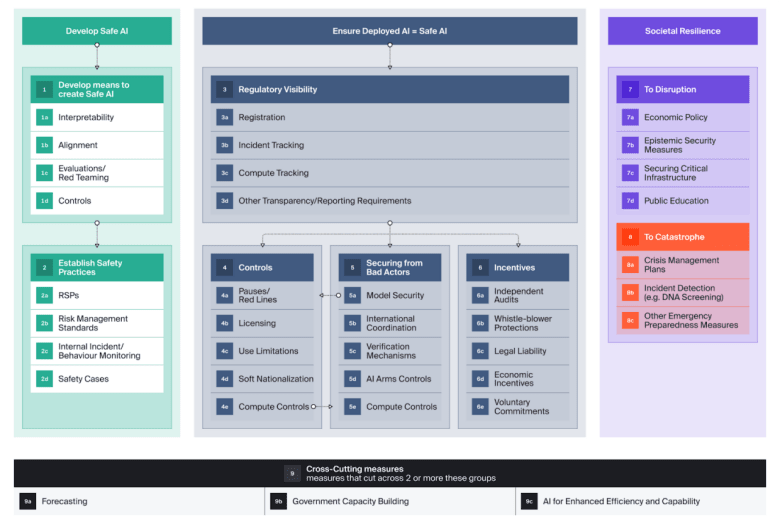

The resulting groupings suggest 3 high-level goals of TAI risk mitigations are:

- Creating Safe TAIEnsuring that deployed TAI’s are safeSocietal Resilience to disruptions from TAI.

Within these goals, the taxonomy suggests 9 interrelated subgoals for AI safety.

- Develop the technical means to create AI.Establish best practices for creating safe AI.Create regulatory visibility.Increase Government Control of AISecure AI from Bad ActorsIncentivise frontier developers to create safe AIBuild societal resilience to disruptionBuild societal resilience to catastropheCross-cutting measures, that support a range of goals and subgoals more than any single goal or subgoal.

These goals and the taxonomy could constitute the beginnings of AI Governance standard and support addressing the challenges discussed above. We also propose that a likely third element would be a more detailed mapping of mitigation interdependencies, which we propose in next steps.

A Goals-Based taxonomy of TAI Risk Mitigations

Using the Taxonomy:

View the full-sized imaged and associated sources here.

Arrows represent dependencies, where one element generally has significant implications for the success of the other. Boxes represent sets and subsets of goals.

To find detailed discussions of an intervention, consult the corresponding number in the Sources Table.

This framework allows a user to view most well discussed interventions on an even plane, and make decisions about which ones to select in order to meet their goals (e.g how to create regulatory visibility) in a way that fits their specific needs or context, with a selection of the relevant expert-recommended interventions.

Limitations of the Taxonomy, and some replies:

- There are some mitigations that are not covered by this version of the taxonomy; note gaps like (3d) and (8c).There will likely be diverging expert perspectives on the details of implementing any of the mitigations covered by the taxonomy - especially at the frontier of research.

- Reply: The standard provides a framework for structured disagreements. Groupings help users understand disagreements more clearly and choose between alternative approaches. For instance, if experts propose different methods for regulatory visibility, users can evaluate which works best for their situation.Reply: There likely is already enough expert consensus around some governance standards to motivate further government action. While researchers continue developing and debating what would constitute excellent TAI readiness, governments can already begin implementing basic measures that most experts agree on.

- Reply: The Standard may be complete enough to accommodate most new recommendations with minor updates.

- Reply: Governments and investors currently acknowledge that major disruptions from AI are plausible in at least military and economic domains.Reply: The taxonomy describes existing expert positions rather than advocating novel ones. It does prescribe some high level actions, (develop safe AI, prevent misuse) but these may be broadly acceptable regardless of specific threat models.

Proposed Next steps:

We aim to take these steps concurrently.

Confirm the Completeness of the Goals-Based Taxonomy

- MIT AI Risk Repository has released a resource exhaustively Mapping AI Risk Mitigations; this resource could be the basis of testing the goals-based taxonomy for completeness.The taxonomy could concurrently be shared with a wider range of experts, through a combination of broad consultations, expert surveys or working groups to further test and confirm its validity, as well as increasing the visibility and expert support for the standard.

Map interdependence of different TAI safety mitigations.

- Continue research on dependencies, redundancies and synergies between specific AI safety mitigations covered by the taxonomy. This would provide important context for selecting different combinations of mitigations.Case studies, for example of the EU AI Act, could give practical insights on how different mitigations synergise or depend on each other.Complement the goals-based taxonomy with a tool that illustrates these relationships accessibly. (See this mock-up by Claude for a simplified illustrative example of one possible approach.)

Applying the standard in the real world.

- Existing legislation can be assessed against an AI Governance standard in case studies to produce targeted recommendations for TAI Readiness. This could easily build on case studies (mentioned above in 2) ) conducted as research to improve the AI Governance standard.As the UK, US and other nations continue to develop their AI legislation, the standard could be the basis of pilot projects to support strategic planning.

Call for feedback!

- Is this useful for you? How so?How well can this framework address the challenges described?Do you know any well-discussed proposals that aren’t covered by the taxonomy?

- For example, I think the societal Resilience block is at a higher level of abstraction to the other and could be expanded on.

Thanks to David Kristoffersson, Elliot Mckernon, Justin Bullock, Deric Cheng, Adam Jones, Iskandar Haykel and others for early discussions and input on this topic.

Discuss