Published on September 11, 2025 10:09 AM GMT

Various people are worried about AI causing extreme power concentration of some form, for example via:

I have been talking to some of these people and trying to sense-make about ‘power concentration’.

These are some notes on that, mostly prompted by some comment exchanges with Nora Ammann (in the below I’m riffing on her ideas but not representing her views). Sharing because I found some of the below helpful for thinking with, and maybe others will too.

(I haven’t tried to give lots of context, so it probably makes most sense to people who’ve already thought about this. More the flavour of in progress research notes than ‘here’s a crisp insight everyone should have’.)

AI risk as power concentration

Sometimes when people talk about power concentration it sounds to me like they are talking about most of AI risk, including AI takeover and human powergrabs.

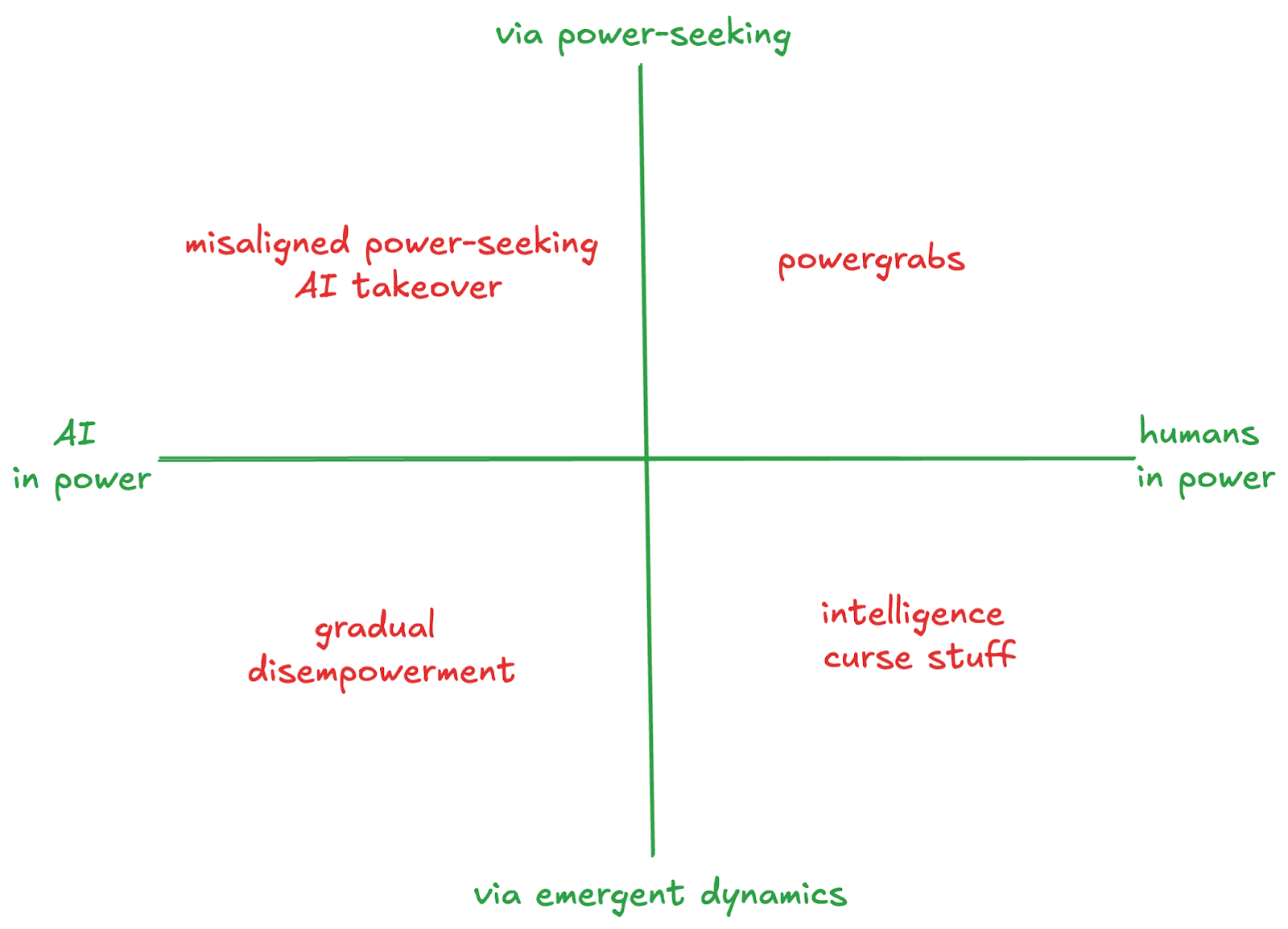

To try to illustrate this, here’s a 2x2, where the x axis is whether AIs or humans end up with concentrated power, and the y axis is whether power gets concentrated emergently or through power-seeking:

Some things I like about this 2x2:

- It suggests a spectrum between AI and human power. You could try to police the line the y axis draws between human and AI, and say ‘this counts as human takeover, this counts as AI takeover’. But I expect it’s usually more useful to hold it as a messy spectrum where things just shade into one another.It also suggests a spectrum between power-seeking and emergent. I expect that in most scenarios there will be some of both, and again I expect policing the line won’t be that helpful.I don’t think it’s obvious that we should have strong preferences about where we fall on either spectrum. Human power concentration doesn’t seem obviously better than AI power concentration, emergent doesn’t seem obviously less bad than power-seeking. I’d like people with strong opinions that we should prefer places on these spectrums to make that case.For me, when I look at the 2x2 I think that power-seeking vs emergent is more where it’s at than sudden vs gradual. You could imagine a powergrab which unfolds gradually over a number of years through a process of backsliding, and it’s very deliberate. You could also imagine most humans being disempowered quite quickly by emergent dynamics like races to the bottom in epistemics - e.g. crazy superpersuasion capabilities get deployed and quickly make everyone kind of insane.

Overall, this isn’t my preferred way of thinking about AI risk or power concentration, for two reasons:

- I think it’s useful to have more granular categories, and don’t love collapsing everything into one containerMisaligned AI takeover and gradual disempowerment could result in power concentration (where most power is in the hands of a small number of actors), but could also result in a power shift (where power is still in the hands of lots of actors, just none of them are human). I don’t have much inside view on how much probability mass should go on power shift vs power concentration here, and would be interested in people’s takes.

But since drawing this 2x2 I more get why for some people this makes sense as a frame.

Power concentration as the undermining of checks and balances

Nora’s take is that the most important general factor driving the risk of power concentration is the undermining of legacy checks and balances.

Here’s my attempt to flesh out what this means (Nora would probably say something different):

- The world is unequal today and power is often abused, but there are limits to thisSpecifically, there are a bunch of checks and balances on power, including:

- Formal/bureaucratic checks: the law, electionsChecks on hard power: capital, military force and the ability to earn money are all somewhat distributed, so there are other powerful actors who can keep you in checkSoft checks: access to information, truth often being more convincing than lies

Some things I like about this frame:

- One thing is that it feels more mechanistic to me than abstractions like ‘the economy, culture, states’. When I read the gradual disempowerment paper I felt a bit disorientated, and now I more get why they chose these things to focus onSomehow it stands out as very obvious to me in the above that eroding one check makes it much easier to erode the other ones. You can still think this in other frames, but for me it’s especially salient in this one. I’m a lot more worried about formal checks being undermined if hard power and ability to sense-make is already very concentrated, and similarly for other combinations.It feels juicy for thinking about more specific scenarios. E.g.

- Formal checks could get eroded suddenly through powergrabs, or gradually through backsliding. Possible they could also get eroded emergently rather than deliberately, if the pace of change gets way faster than election cycles and/or companies get way more capable than governmentsConcentrating hard power feels very closely tied to automating human labour. I think the more labour automation there is, the more capital and the ability to earn money and wage war will get concentrated. I do think you could get military concentration prior to rolling out the automation of human labour though, if one actor develops much more powerful military tech than everyone else very rapidly.The soft checks could get eroded either by deliberate adversarial interference on the part of powerful actors, or emergently through races to the bottom if the incentives are bad or we’re unlucky about what technologies get developed when (e.g. crazy superpersuasion before epistemic defenses to that). Eroding the soft checks would in itself disempower a lot of people (who’d no longer be able to effectively act in their own interests), and could also make it much easier for people to concentrate power even further.

Discuss