Ensuring the reliability, safety, and performance of AI agents is critical. That’s where agent observability comes in.

This blog post is the third out of a six-part blog series called Agent Factory which will share best practices, design patterns, and tools to help guide you through adopting and building agentic AI.

Seeing is knowing—the power of agent observability

As agentic AI becomes more central to enterprise workflows, ensuring reliability, safety, and performance is critical. That’s where agent observability comes in. Agent observability empowers teams to:

- Detect and resolve issues early in development.

- Verify that agents uphold standards of quality, safety, and compliance.

- Optimize performance and user experience in production.

- Maintain trust and accountability in AI systems.

With the rise of complex, multi-agent and multi-modal systems, observability is essential for delivering AI that is not only effective, but also transparent, safe, and aligned with organizational values. Observability empowers teams to build with confidence and scale responsibly by providing visibility into how agents behave, make decisions, and respond to real-world scenarios across their lifecycle.

What is agent observability?

Agent observability is the practice of achieving deep, actionable visibility into the internal workings, decisions, and outcomes of AI agents throughout their lifecycle—from development and testing to deployment and ongoing operation. Key aspects of agent observability include:

- Continuous monitoring: Tracking agent actions, decisions, and interactions in real time to surface anomalies, unexpected behaviors, or performance drift.

- Tracing: Capturing detailed execution flows, including how agents reason through tasks, select tools, and collaborate with other agents or services. This helps answer not just “what happened,” but “why and how did it happen?”

- Logging: Records agent decisions, tool calls, and internal state changes to support debugging and behavior analysis in agentic AI workflows.

- Evaluation: Systematically assessing agent outputs for quality, safety, compliance, and alignment with user intent—using both automated and human-in-the-loop methods.

- Governance: Enforcing policies and standards to ensure agents operate ethically, safely, and in accordance with organizational and regulatory requirements.

Traditional observability vs agent observability

Traditional observability relies on three foundational pillars: metrics, logs, and traces. These provide visibility into system performance, help diagnose failures, and support root-cause analysis. They are well-suited for conventional software systems where the focus is on infrastructure health, latency, and throughput.

However, AI agents are non-deterministic and introduce new dimensions—autonomy, reasoning, and dynamic decision making—that require a more advanced observability framework. Agent observability builds on traditional methods and adds two critical components: evaluations and governance. Evaluations help teams assess how well agents resolve user intent, adhere to tasks, and use tools effectively. Agent governance can ensure agents operate safely, ethically, and in compliance with organizational standards.

This expanded approach enables deeper visibility into agent behavior—not just what agents do, but why and how they do it. It supports continuous monitoring across the agent lifecycle, from development to production, and is essential for building trustworthy, high-performing AI systems at scale.

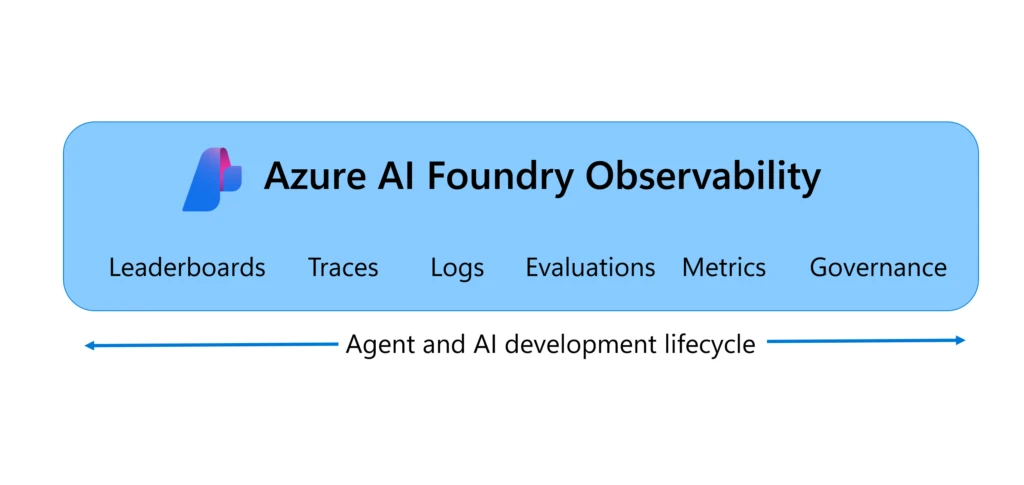

Azure AI Foundry Observability provides end-to-end agent observability

Azure AI Foundry Observability is a unified solution for evaluating, monitoring, tracing, and governing the quality, performance, and safety of your AI systems end to end in Azure AI Foundry—all built into your AI development loop. From model selection to real-time debugging, Foundry Observability capabilities empower teams to ship production-grade AI with confidence and speed. It’s observability, reimagined for the enterprise AI era.

With built-in capabilities like the Agents Playground evaluations, Azure AI Red Teaming Agent, and Azure Monitor integration, Foundry Observability brings evaluation and safety into every step of the agent lifecycle. Teams can trace each agent flow with full execution context, simulate adversarial scenarios, and monitor live traffic with customizable dashboards. Seamless CI/CD integration enables continuous evaluation on every commit and governance support with Microsoft Purview, Credo AI, and Saidot integration helps enable alignment with regulatory frameworks like the EU AI Act—making it easier to build responsible, production-grade AI at scale.

Five best practices for agent observability

1. Pick the right model using benchmark driven leaderboards

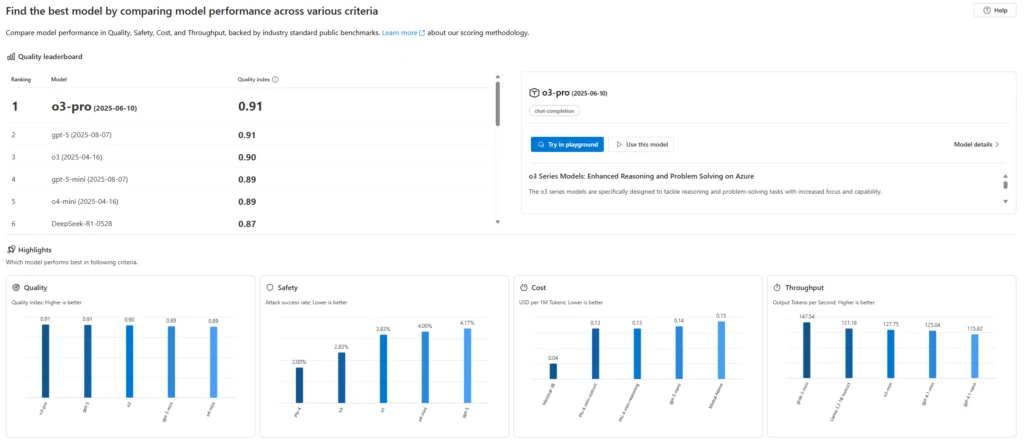

Every agent needs a model and choosing the right model is foundational for agent success. While planning your AI agent, you need to decide which model would be the best for your use case in terms of safety, quality, and cost.

You can pick the best model by either evaluating the model on your own data or use Azure AI Foundry’s model leaderboards to compare foundation models out-of-the-box by quality, cost, and performance—backed by industry benchmarks. With Foundry model leaderboards, you can find model leaders in various selection criteria and scenarios, visualize trade-offs among the criteria (e.g., quality vs cost or safety), and dive into detailed metrics to make confident, data-driven decisions.

Azure AI Foundry’s model leaderboards gave us the confidence to scale client solutions from experimentation to deployment. Comparing models side by side helped customers select the best fit—balancing performance, safety, and cost with confidence.

—Mark Luquire, EY Global Microsoft Alliance Co-Innovation Leader, Managing Director, Ernst & Young, LLP*

2. Evaluate agents continuously in development and production

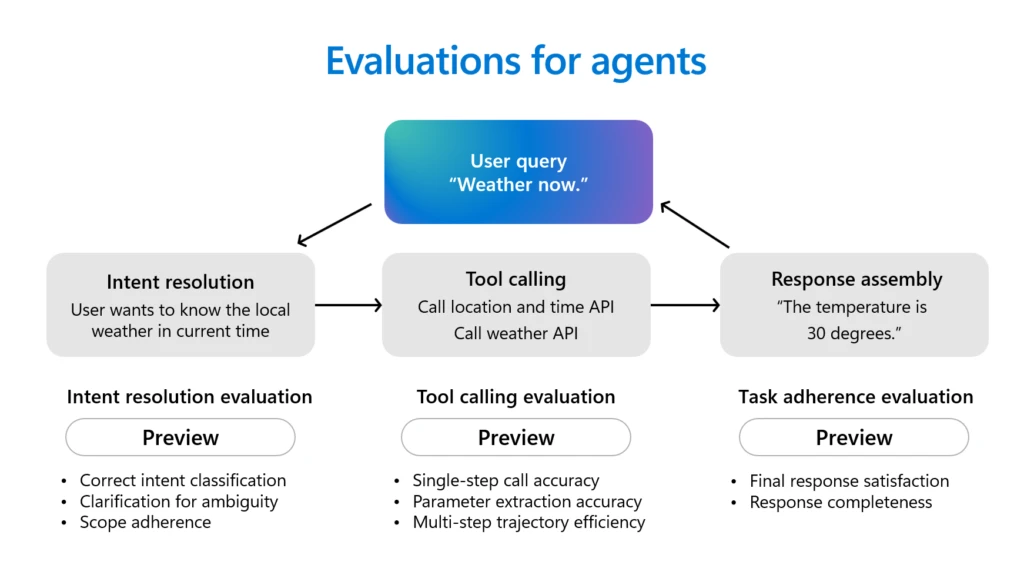

Agents are powerful productivity assistants. They can plan, make decisions, and execute actions. Agents typically first reason through user intents in conversations, select the correct tools to call and satisfy the user requests, and complete various tasks according to their instructions. Before deploying agents, it’s critical to evaluate their behavior and performance.

Azure AI Foundry makes agent evaluation easier with several agent evaluators supported out-of-the-box, including Intent Resolution (how accurately the agent identifies and addresses user intentions), Task Adherence (how well the agent follows through on identified tasks), Tool Call Accuracy (how effectively the agent selects and uses tools), and Response Completeness (whether the agent’s response includes all necessary information). Beyond agent evaluators, Azure AI Foundry also provides a comprehensive suite of evaluators for broader assessments of AI quality, risk, and safety. These include quality dimensions such as relevance, coherence, and fluency, along with comprehensive risk and safety checks that assess for code vulnerabilities, violence, self-harm, sexual content, hate, unfairness, indirect attacks, and the use of protected materials. The Azure AI Foundry Agents Playground brings these evaluation and tracing tools together in one place, letting you test, debug, and improve agentic AI efficiently.

The robust evaluation tools in Azure AI Foundry help our developers continuously assess the performance and accuracy of our AI models, including meeting standards for coherence, fluency, and groundedness.

—Amarender Singh, Director, AI, Hughes Network Systems

3. Integrate evaluations into your CI/CD pipelines

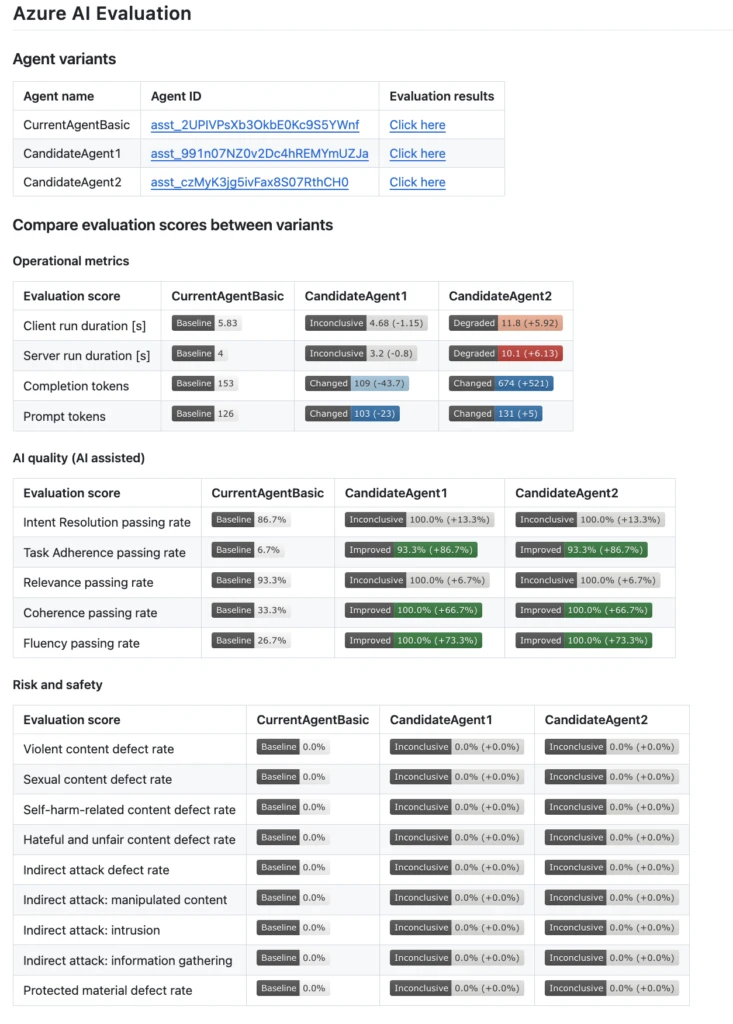

Automated evaluations should be part of your CI/CD pipeline so every code change is tested for quality and safety before release. This approach helps teams catch regressions early and can help ensure agents remain reliable as they evolve.

Azure AI Foundry integrates with your CI/CD workflows using GitHub Actions and Azure DevOps extensions, enabling you to auto-evaluate agents on every commit, compare versions using built-in quality, performance, and safety metrics, and leverage confidence intervals and significance tests to support decisions—helping to ensure that each iteration of your agent is production ready.

We’ve integrated Azure AI Foundry evaluations directly into our GitHub Actions workflow, so every code change to our AI agents is automatically tested before deployment. This setup helps us quickly catch regressions and maintain high quality as we iterate on our models and features.

—Justin Layne Hofer, Senior Software Engineer, Veeam

4. Scan for vulnerabilities with AI red teaming before production

Security and safety are non-negotiable. Before deployment, proactively test agents for security and safety risks by simulating adversarial attacks. Red teaming helps uncover vulnerabilities that could be exploited in real-world scenarios, strengthening agent robustness.

Azure AI Foundry’s AI Red Teaming Agent automates adversarial testing, measuring risk and generating readiness reports. It enables teams to simulate attacks and validate both individual agent responses and complex workflows for production readiness.

Accenture is already testing the Microsoft AI Red Teaming Agent, which simulates adversarial prompts and detects model and application risk posture proactively. This tool will help validate not only individual agent responses, but also full multi-agent workflows in which cascading logic might produce unintended behavior from a single adversarial user. Red teaming lets us simulate worst-case scenarios before they ever hit production. That changes the game.

—Nayanjyoti Paul, Associate Director and Chief Azure Architect for Gen AI, Accenture

5. Monitor agents in production with tracing, evaluations, and alerts

Continuous monitoring after deployment is essential to catch issues, performance drift, or regressions in real time. Using evaluations, tracing, and alerts helps maintain agent reliability and compliance throughout its lifecycle.

Azure AI Foundry observability enables continuous agentic AI monitoring through a unified dashboard powered by Azure Monitor Application Insights and Azure Workbooks. This dashboard provides real-time visibility into performance, quality, safety, and resource usage, allowing you to run continuous evaluations on live traffic, set alerts to detect drift or regressions, and trace every evaluation result for full-stack observability. With seamless navigation to Azure Monitor, you can customize dashboards, set up advanced diagnostics, and respond swiftly to incidents—helping to ensure you stay ahead of issues with precision and speed.

Security is paramount for our large enterprise customers, and our collaboration with Microsoft allays any concerns. With Azure AI Foundry, we have the desired observability and control over our infrastructure and can deliver a highly secure environment to our customers.

—Ahmad Fattahi, Sr. Director, Data Science, Spotfire

Get started with Azure AI Foundry for end-to-end agent observability

To summarize, traditional observability includes metrics, logs, and traces. Agent Observability needs metrics, traces, logs, evaluations, and governance for full visibility. Azure AI Foundry Observability is a unified solution for agent governance, evaluation, tracing, and monitoring—all built into your AI development lifecycle. With tools like the Agents Playground, smooth CI/CD, and governance integrations, Azure AI Foundry Observability empowers teams to ensure their AI agents are reliable, safe, and production ready. Learn more about Azure AI Foundry Observability and get full visibility into your agents today!

What’s next

In part four of the Agent Factory series, we’ll focus on how you can go from prototype to production faster with developer tools and rapid agent development.

Did you miss these posts in the series?

*The views reflected in this publication are the views of the speaker and do not necessarily reflect the views of the global EY organization or its member firms.