It's no longer whether you can build an agent—it’s how fast and seamlessly you can go from idea to enterprise-ready deployment.

This blog post is the fourth out of a six-part blog series called Agent Factory which will share best practices, design patterns, and tools to help guide you through adopting and building agentic AI.

Developer experiences as the key to scale

AI agents are moving quickly from experimentation to real production systems. Across industries, we see developers testing prototypes in their Integrated Development Environment (IDE) one week and deploying production agents to serve thousands of users the next. The key differentiator is no longer whether you can build an agent—it’s how fast and seamlessly you can go from idea to enterprise-ready deployment.

Industry trends reinforce this shift:

- In-repo AI development: Models, prompts, and evaluations are now first-class citizens in GitHub repos—giving developers a unified space to build, test, and iterate on AI features.

- More capable coding agents: GitHub Copilot’s new coding agent can open pull requests after completing tasks like writing tests or fixing bugs, acting as an asynchronous teammate.

- Open frameworks maturing: Communities around LangGraph, LlamaIndex, CrewAI, AutoGen, and Semantic Kernel are rapidly expanding, with “agent templates” on GitHub repos becoming common.

- Open protocols emerging: Standards like the Model Context Protocol (MCP) and Agent-to-Agent (A2A) are creating interoperability across platforms.

Developers increasingly expect to stay in their existing workflow—GitHub, VS Code, and familiar frameworks—while tapping into enterprise-grade runtimes and integrations. The platforms that win will be those that meet developers where they are—with openness, speed, and trust.

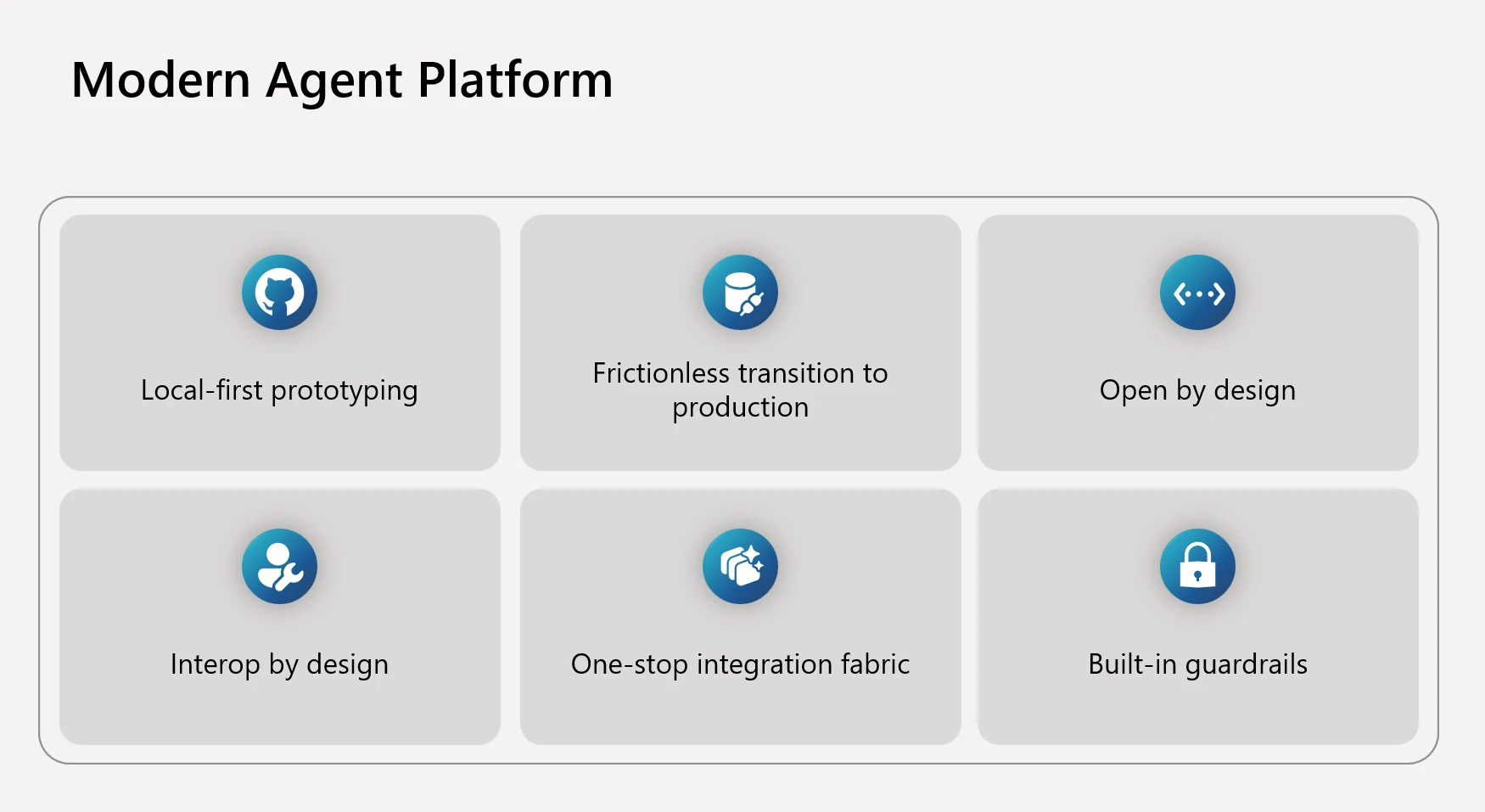

What a modern agent platform should deliver

From our work with customers and the open-source community, we’ve seen a clear picture emerge of what developers really need. A modern agent platform must go beyond offering models or orchestration—it has to empower teams across the entire lifecycle:

- Local-first prototyping: Developers want to stay in their flow. That means designing, tracing, and evaluating AI agents directly in their IDE with the same ease as writing and debugging code. If building an agent requires jumping into a separate UI or unfamiliar environment, iteration slows and adoption drops.

- Frictionless transition to production: A common frustration we hear is that an agent that runs fine locally becomes brittle or requires heavy rewrites in production. The right platform provides a single, consistent API surface from experimentation to deployment, so what works in development works in production—with scale, security, and governance layered in automatically.

- Open by design: No two organizations use the exact same stack. Developers may start with LangGraph for orchestration, LlamaIndex for data retrieval, or CrewAI for coordination. Others prefer Microsoft’s first-party frameworks like Semantic Kernel or AutoGen. A modern platform must support this diversity without forcing lock-in, while still offering enterprise-grade pathways for those who want them.

- Interop by design: Agents are rarely self-contained. They must talk to tools, databases, and even other agents across different ecosystems. Proprietary protocols create silos and fragmentation. Open standards like the Model Context Protocol (MCP) and Agent-to-Agent (A2A) unlock collaboration across platforms, enabling a marketplace of interoperable tools and reusable agent skills.

- One-stop integration fabric: An agent’s real value comes when it can take meaningful action: updating a record in Dynamics 365, triggering a workflow in ServiceNow, querying a SQL database, or posting to Teams. Developers shouldn’t have to rebuild connectors for every integration. A robust agent platform provides a broad library of prebuilt connectors and simple ways to plug into enterprise systems.

- Built-in guardrails: Enterprises cannot afford agents that are opaque, unreliable, or non-compliant. Observability, evaluations, and governance must be woven into the development loop—not added as an afterthought. The ability to trace agent reasoning, run continuous evaluations, and enforce identity, security, and compliance policies is as critical as the models themselves.

How Azure AI Foundry delivers this experience

Azure AI Foundry is designed to meet developers where they are, while giving enterprises the trust, security, and scale they need. It connects the dots across IDEs, frameworks, protocols, and business channels—making the path from prototype to production seamless.

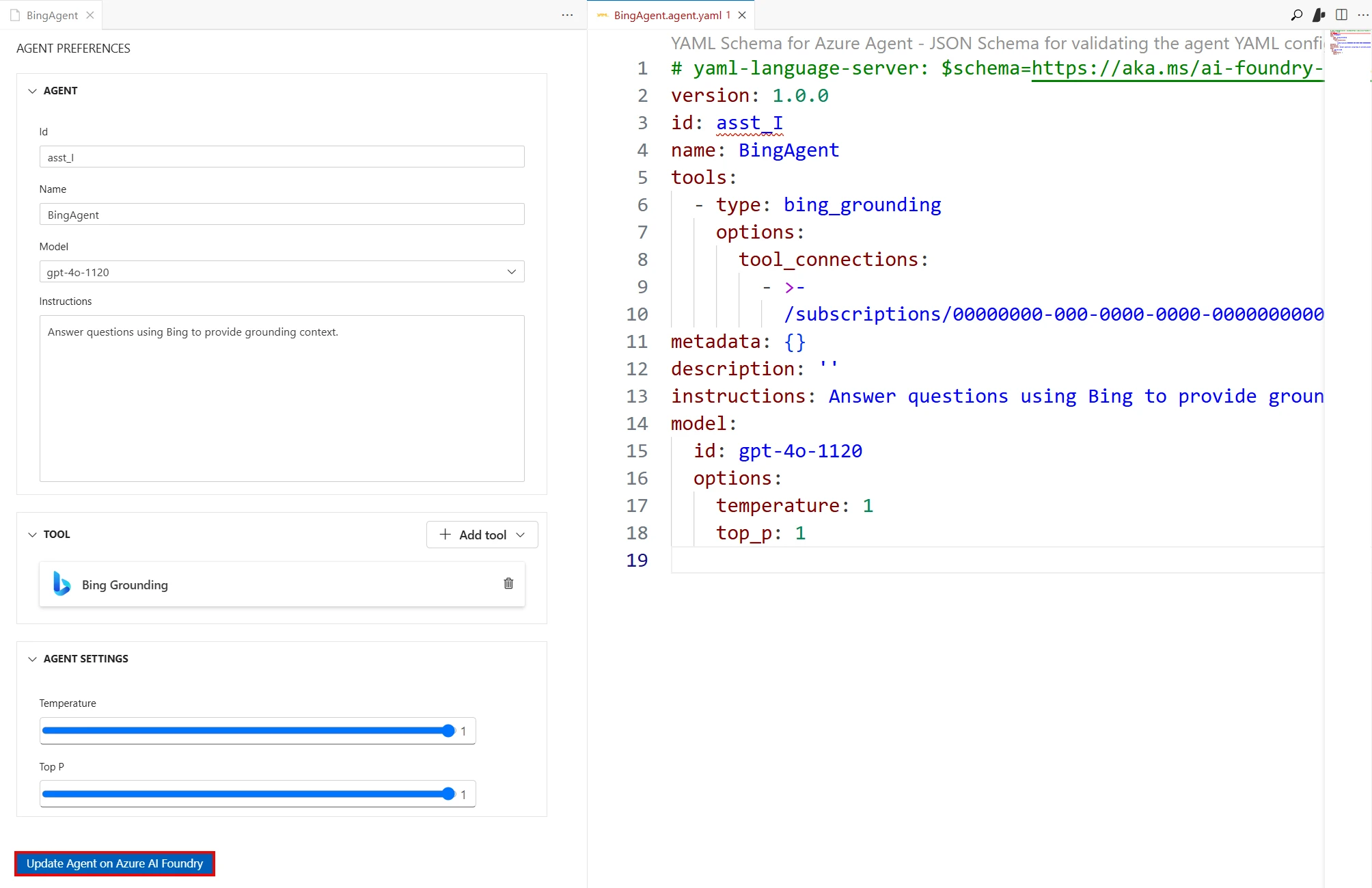

Build where developers live: VS Code, GitHub, and Foundry

Developers expect to design, debug, and iterate AI agents in their daily tools—not switch into unfamiliar environments. Foundry integrates deeply with both VS Code and GitHub to support this flow.

- VS Code extension for Foundry: Developers can create, run, and debug agents locally with direct connection to Foundry resources. The extension scaffolds projects, provides integrated tracing and evaluation, and enables one-click deployment to Foundry Agent Service—all inside the IDE they already use.

- Model Inference API: With a single, unified inference endpoint, developers can evaluate performance across models and swap them without rewriting code. This flexibility accelerates experimentation while future-proofing applications against a fast-moving model ecosystem.

- GitHub Copilot and the coding agent: Copilot has grown beyond autocomplete into an autonomous coding agent that can take on issues, spin up a secure runner, and generate a pull request, signaling how agentic AI development is becoming a normal part of the developer loop. When used alongside Azure AI Foundry, developers can accelerate agent development by having Copilot generate agent code while pulling in the models, agent runtime, and observability tools from Foundry needed to build, deploy, and monitor production-ready agents.

Use your frameworks

Agents are not one-size-fits-all, and developers often start with the frameworks they know best. Foundry embraces this diversity:

- First-party frameworks: Foundry supports both Semantic Kernel and AutoGen, with a convergence into a modern unified framework coming soon. This future-ready framework is designed for modularity, enterprise-grade reliability, and seamless deployment to Foundry Agent Service.

- Third-party frameworks: Foundry Agent Service integrates directly with CrewAI, LangGraph, and LlamaIndex, enabling developers to orchestrate multi-turn, multi-agent conversations across platforms. This ensures you can work with your preferred OSS ecosystem while still benefiting from Foundry’s enterprise runtime.

Interoperability with open protocols

Agents don’t live in isolation—they need to interoperate with tools, systems, and even other agents. Foundry supports open protocols by default:

- MCP: Foundry Agent Service allows agents to call any MCP-compatible tools directly, giving developers a simple way to connect external systems and reuse tools across platforms.

- A2A: Semantic Kernel supports A2A, implementing the protocol to enable agents to collaborate across different runtimes and ecosystems. With A2A, multi-agent workflows can span vendors and frameworks, unlocking scenarios like specialist agents coordinating to solve complex problems.

Ship where the business runs

Building an agent is just the first step—impact comes when users can access it where they work. Foundry makes it easy to publish agents to both Microsoft and custom channels:

- Microsoft 365 and Copilot: Using the Microsoft 365 Agents SDK, developers can publish Foundry agents directly to Teams, Microsoft 365 Copilot, BizChat, and other productivity surfaces.

- Custom apps and APIs: Agents can be exposed as REST APIs, embedded into web apps, or integrated into workflows using Logic Apps and Azure Functions—with thousands of prebuilt connectors to SaaS and enterprise systems.

Observe and harden

Reliability and safety can’t be bolted on later—they must be integrated into the development loop. As we explored in the previous blog, observability is essential for delivering AI that is not only effective, but also trustworthy. Foundry builds these capabilities directly into the developer workflow:

- Tracing and evaluation tools to debug, compare, and validate agent behavior before and after deployment.

- CI/CD integration with GitHub Actions and Azure DevOps, enabling continuous evaluation and governance checks on every commit.

- Enterprise guardrails—from networking and identity to compliance and governance—so that prototypes can scale confidently into production.

Why this matters now

Developer experience is the new productivity moat. Enterprises need to enable their teams to build and deploy AI agents quickly, confidently, and at scale. Azure AI Foundry delivers an open, modular, and enterprise-ready path—meeting developers in GitHub and VS Code, supporting both open-source and first-party frameworks, and ensuring agents can be deployed where users and data already live.

With Foundry, the path from prototype to production is smoother, faster, and more secure—helping organizations innovate at the speed of AI.

What’s next

In Part 5 of the Agent Factory series, we’ll explore how agents connect and collaborate at scale. We’ll demystify the integration landscape—from agent-to-agent collaboration with A2A, to tool interoperability with MCP, to the role of open standards in ensuring agents can work across apps, frameworks, and ecosystems. Expect practical guidance and reference patterns for building truly connected agent systems.

Did you miss these posts in the series?