Lots of people have been talking about how we’ll reach AGI (artificial general intelligence) or ASI (artificial super intelligence) with current technology. Meta has recently announced that they’re building a top-secret “superintelligence” lab with billions of dollars of funding. OpenAI, Anthropic, and Google DeepMind have all said in one way or another that their goal is to build superintelligent machines.

Sam Altman in particular has stated that superintelligence is simply a problem of engineering:

This implies that researchers at OpenAI know how to build superintelligence, and simply have to put in time and effort to build the systems required to get there.

Now, I’m an AI researcher, and it’s not clear to me how to build superintelligence – I’m not even sure it’s possible. So in this post I want to think through the details a little bit and speculate how someone might try to build superintelligence from first principles.

We’re going to assume that the fundamental building blocks of the technology is decided: we’re going to build superintelligence with neural networks and train it with backpropagation and some form of machine learning.

I don’t really believe that architecture (the shape of the neural network) matters much. So we’ll gloss over that architectural detail and make a strong assumption: superintelligence will be built using Transformers, by far the most popular architecture for training these systems on large datasets.

So then we already know a lot: superintelligence will be a transformer neural network, it will be trained via some machine learning learning objective and gradient-based backpropagation. There are still two major open questions here. What learning algorithm do we use, and what data?

Let’s start with the data.

The data: it’s gotta be text

Most of the big breakthroughs that led to chatGPT inevitably came from leveraging the treasure trove of human knowledge inside The Internet to learn things. The true scale of this enterprise is mind-boggling and mostly hidden by modern engineering, but let’s spend a second trying to get to the bottom of it all.

The best systems we have right now all learn from Internet text As of writing this (June 2025) I don’t think there has been any demonstrated overall improvement from integrating non-text data into a model. This includes images, video, audio, and extrasensory data from robotics – we don’t know how to use any of these modalities to make chatGPT smarter.

Why is this, by the way? It’s possible this is just a science or engineering challenge, and we’re not doing things the right way; but it’s certainly also possible that there’s just something special about text. After all, every bit of text on the internet (before LLMs, anyway) is a reflection of a human’s thought process. In a sense, huma-written text is preprocessed to have very high information content.

Take this in contrast to images, for example, which are are raw views of the world around us, captured with no human intervention. It’s certainly possible that the fact text written by actual people carries some intrinsic value that pure sensory inputs from the world around us never will.

So until someone demonstrates otherwise, let’s operate under this assumption that only text data is important.

Ok, so how much text do we have?

A next question is how large this dataset might be.

Lots of people have written about what to do if we’re running out of text data. Dubbed the “data wall” or “token crisis”, people have written about what to do and how to scale our models if we really run out of data.

And it seems that might really be happening. Many engineers at the big AI labs have spend countless hours dutifully scraping every last useful bit of text from the darkest corners of the Web, going so far as to transcribe a million hours of YouTube videos and purchasing large troves of news stories to train on.

Luckily there might be another data source available here (verifiable environments!) but we’ll get to hat later.

The learning algorithm

Above we discovered another important principle: the best path we have toward superintelligence lies in text data. In other words, AGI is probably just LLMs or nothing. Some other promising areas are learning from video and robotics, but neither of those seem nearly far along enough to produce independent intelligent systems by at least 2030. They’re also far more data-hungry; learning from text is naturally extremely efficient.

Now we have to confront the most important question. What’s the learning algorithm for superintelligence?

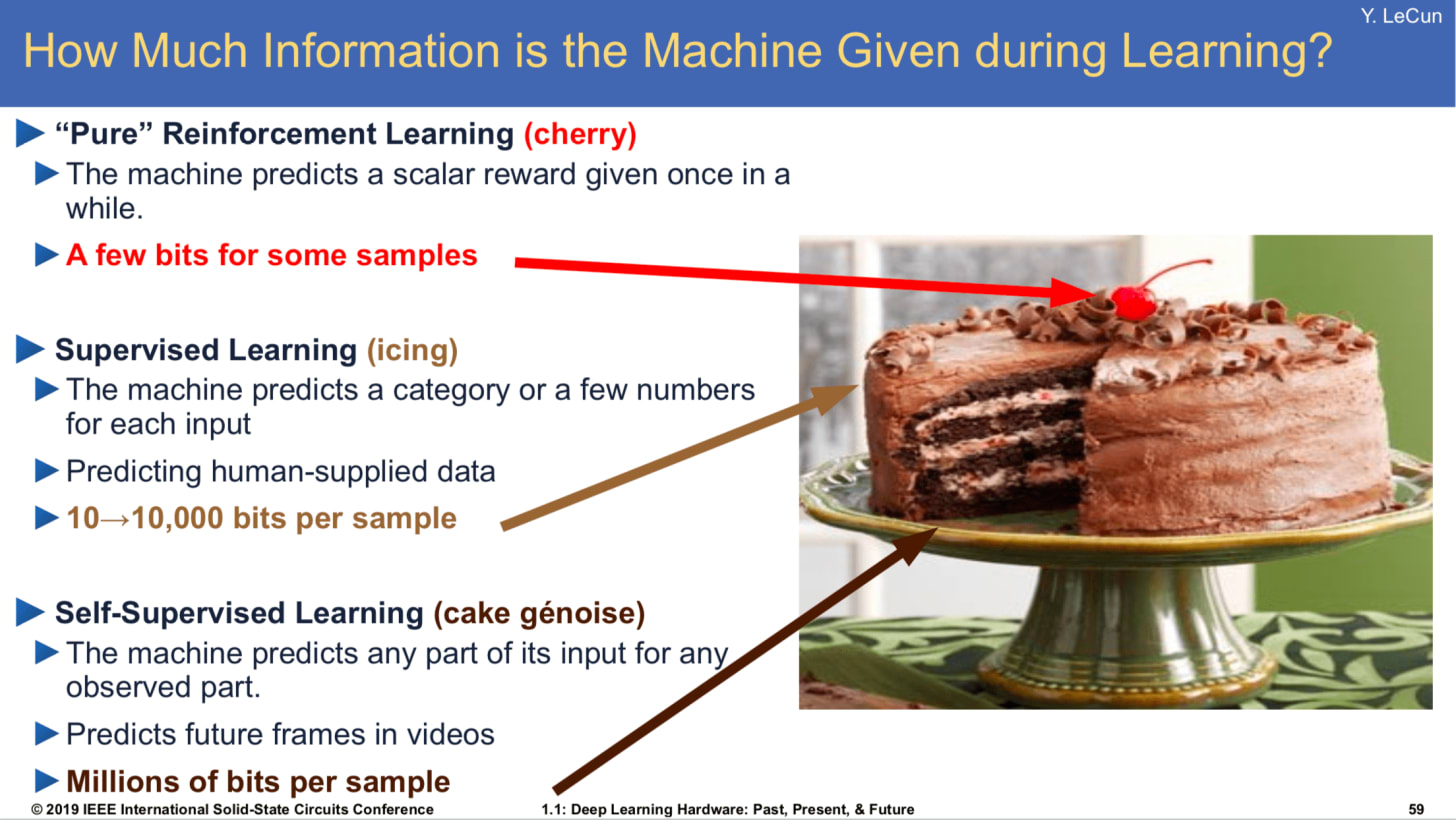

In the field of machine learning, there are basically two tried-and-true ways to learn from large datasets. One is supervised learning, training a model to increase the likelihood of some example data. The other is reinforcement learning, which involves generating from a model and rewarding it for taking “good” actions (for some user-written definition of “good”).

Now that we know this taxonomy, it becomes clear that any potential superintelligent system as written has to be trained using either supervised or reinforcement learning (or both).

Let’s think through both options.

Hypothesis 1: Superintelligence arises from supervised learning

Remember 2023? That was around when people really became excited about scaling laws; GPT-4 came out, and people were worried that if models continued to scale, they would become dangerous.

There was a prevailing opinion for some time that lots of supervised learning, specifically in the form of “next-token prediction”, could lead to superintelligent AI. Notably Ilya Sutskever gave a talk about how next-token prediction is simply learning to compress the Universe, since doing this well requires simulating all possible programs (or something like that).

I think the argument went something like this:

Accurate next-token prediction requires modeling what any human would write in any scenario

The better you can do this for a single person, the more closely you approximate the intelligence of that person

Since the internet contains text written by many people, to this well on e.g. a large text pretraining corpus requires accurately modeling the intelligence of many people

Accurately modeling the intelligence of many people is superintelligence

The vibes argument: can we even reach superintelligence by modeling humans?

Personally I think there are a few flaws in this logic, starting with the fact that we seem to have already created systems that are far above human-level in next-token prediction but still fail to exhibit human-level general intelligence. Somehow we’ve built systems that learned what we told them to learn (to predict the next token) but still can’t do what we want them to do (answer questions without making things up, follow instructions perfectly, etc.).

This might be simply a failure of machine learning. We’re training a model to predict the average human outcome in every situation. The learning objective disincentives giving too-low a probability to any possible outcome. This paradigm often leads to something called mode collapse, where a model gets very good at modeling average outcomes without learning the tails of distribution.

It’s possible that these issues would go away at scale. Billion-parameter models, like LLAMA, hallucinate. But this is only 10^9. What happens when we train models with 10^19 parameters? Perhaps that’s enough capacity for a single LLM to independently model all eight billion humans and provide independent data-driven predictions for each.

The infra argument: we won’t be able to scale up our models and/or our data

But it turns out it’s a moot point, because we may never scale up to 10^19 parameters. This hypothesis basically arose from the 2022-or-so-era deep learning school of thought driven by the wild success of language model scaling laws that continually scaling model and data size would lead to perfect intelligence.

It’s 2025 now. The theoretical argument remains unchallenged and the scaling laws have held faithful. But it turns out that scaling models gets really hard above a certain size (and in 2022 we were already really close to the line of what we could do well). Companies are already far, far beyond what we can do with a single machine – all the latest models are trained on giant networks of hundreds of machines.

The continued effort at scaling model size toward trillions of parameters is causing a hardware shortage (see: NVIDIA stock) and actually an electricity shortage too. Bigger models would draw so much power that they can’t be located in a single place; companies are looking into research to distribute model-training over multiple far-flung datacenters and even buying old nuclear power plants in an effort to resuscitate them and use them to train the next generation of bigger AI models. We’re living in wild times.

In addition to model size, we are potentially running out of data. No one knows how much of the Internet was used to train each model, but it’s safe to say that it’s quite a lot. And great engineering effort over the last few years at big AI labs has gone into scraping the proverbial barrel of Internet text data: OpenAI has apparently transcribed all of Youtube, for example, and high-signal sites like Reddit have been scraped time and time again.

Scaling model size orders of magnitude beyond 100B parameters looks to be hard, as is scaling data size beyond 20T tokens or so. These factors seem to indicate that it will be hard to scale supervised learning much more than 10x further in the next three years or so – so attempts at superintelligence might have to come from somewhere else.

Hypothesis 2: Superintelligence from a combination of supervised and reinforcement learning

So maybe you bought one of the arguments above: either we won’t be able to scale up pre-training by orders of magnitude for a long time, or even if we did, doing really well at predicting human tokens won’t produce systems that are any smarter than humans.

Whatever your issue with supervised learning, there’s another way. The field of reinforcement learning offers a suite of methods to learn from feedback instead of demonstrations.

⚠️ Why do we need supervised learning?

Well, RL is hard. You might be wondering why we can’t just use reinforcement learning all the way down. From a practical perspective, there are a lot of drawbacks to RL. The short explanation is that SL is a lot more stable and efficient than RL. An easy-to-understand reason is that because RL works by letting the model generate “actions” and rating them, a randomly initialized model is basically terrible and all of its actions are useless, and it has to accidentally do something good to get any semblance of a reward. This is called the cold start problem, and it’s just one of many issues with reinforcement learning. Supervised learning from human data turns out to be a great way to get around the cold start problem.

So let’s reiterate the RL paradigm: the model tries stuff, and we tell it whether it did well or not. This can come in two ways: either human raters tell the model it did well (this is more or less how typical RLHF works) or an automated system does that instead.

Hypothesis 2A: RL from human verifiers

Under this first paradigm, we provide the model with human-based rewards. We want our model to be superintelligent, so we want to reward it for producing text that looks closer to superintelligence (as judged by humans).

In practice, this data is really expensive to collect. A typical reinforcement learning from human feedback (RLHF) setting involves training a reward model to emulate the human feedback signal here. Reward models are necessary because they allow us to give much more feedback than we can feasibly collect from actual humans. In other words, they’re a computational crutch. We’re going to treat reward models as an engineering detail and ignore them for now.

So we’re imagining a world where we have infinite humans available to label data for an LLM and provide arbitrary rewards, where higher rewards indicate getting the model closer to superintelligence.

Ignore all the logistic complexity. Assume this approach is possible to run at scale (as it could be some day, even if it’s not today). Would it work? Could a machine learning purely from human reward signals climb the intelligence ladder and pass humans?

Another way of phrasing this question is: can we verify superintelligence when seeing it, even if we can’t generate examples of it ourselves? Remember that humans by definition aren’t superintelligent. But can we recognize superintelligence when shown to us? And can we do so reliably enough to provide useful gradient signal to an LLM which could gather lots of feedback of this type and bootstrap its way to superintelligence?

Some people like to point out that “generation is naturally harder than verification”. you know a good movie when you watch one, but that doesn’t mean you’re able to go out there and produce one yourself. This dichotomy pops up a lot in machine learning. It’s computationally much easier to differentiate cat photos from dog photos than it is to generate entire cats.

Similarly, if humans can verify superintelligence, then it may be possible to train a superintelligent model based using reinforcement learning on human feedback. As a concrete example, you could have an LLM write many novels, reward it based on a human notion of the ones that are good, and repeat this many many times, until you have a superintelligent novel-writing machine.

Do you notice any problems with this logic?

Hypothesis 2B: RL from automated verifiers

More recently people have been excited about using similar approaches to train better language models.

And when we let the computer rate the intermediate performance of our RL algorithms, we can either do this with a model or an automated verifier. For automated verifiers, think of chess, or coding. We can write rules that check if the computer won a chess game and issue a reward if a checkmate is reached. In coding, we can run unit tests that reward the computer for writing code that correctly passes some specifications.

Using a verifier would be much more practical – it would allow us to remove humans from the loop here entirely (besides the fact that they were used to write the entire Internet). A recipe for superintelligence using verifiers would be something like:

Pretrain an LLM using supervised learning on a large amount of Internet text

Plug it into some verification system that can provide rewards for good LLM outputs

Run for a long time

Achieve superintelligence

Is this a good idea? Would it even work?

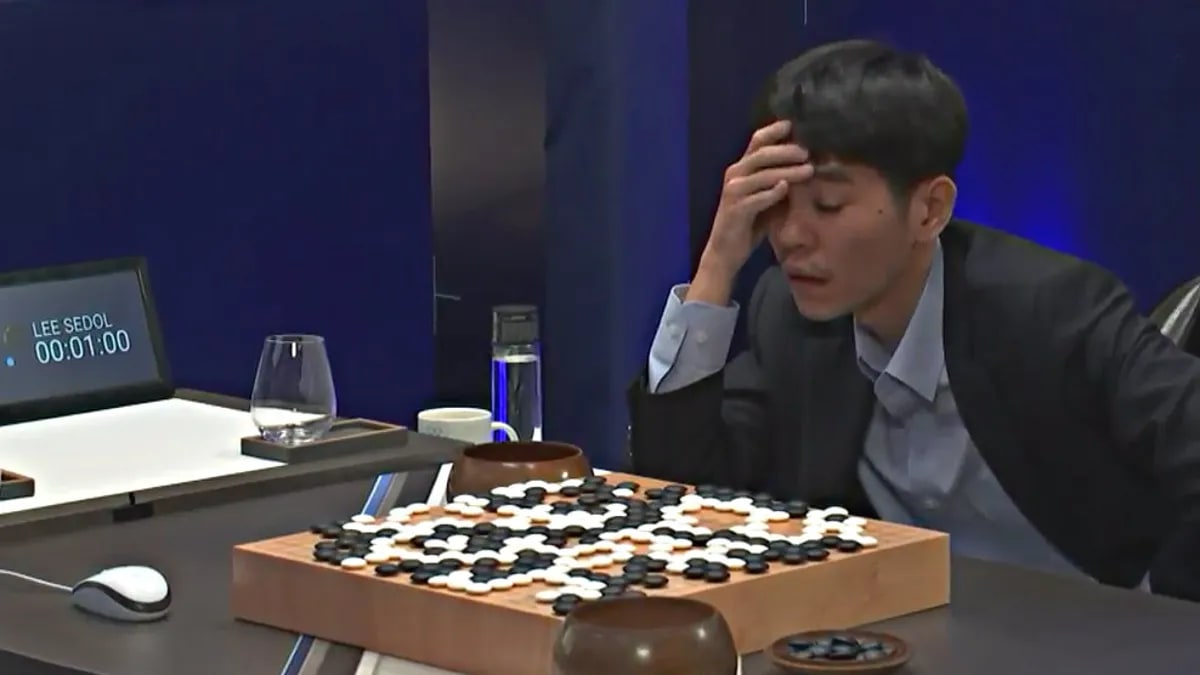

Famously, DeepMind’s AlphaGo achieved “Go supremacy” (read: beat all the humans, even the ones who trained for decades) by a combination of reinforcement and supervised learning. The second version of AlphaGo, known as AlphaGo Zero, learned by playing against itself for forty straight days.

Note that Go has a very important property that many real-world tasks do not. Go is naturally verifiable. By this I mean that we can plug my game of Go into a rule-based computer program and receive a signal indicating whether I won or not. Extrapolating this over a long time horizon, you can tell whether an individual move is “good” or not based on how it affects the probability of the game ending with a win. This is more or less how RL works.

And using this verifiability, AlphaGo was able to achieve something very important, something that the AI labs have aspired to for a while: AlphaGo gets better when it thinks for longer. Language models, by default, cannot do this.

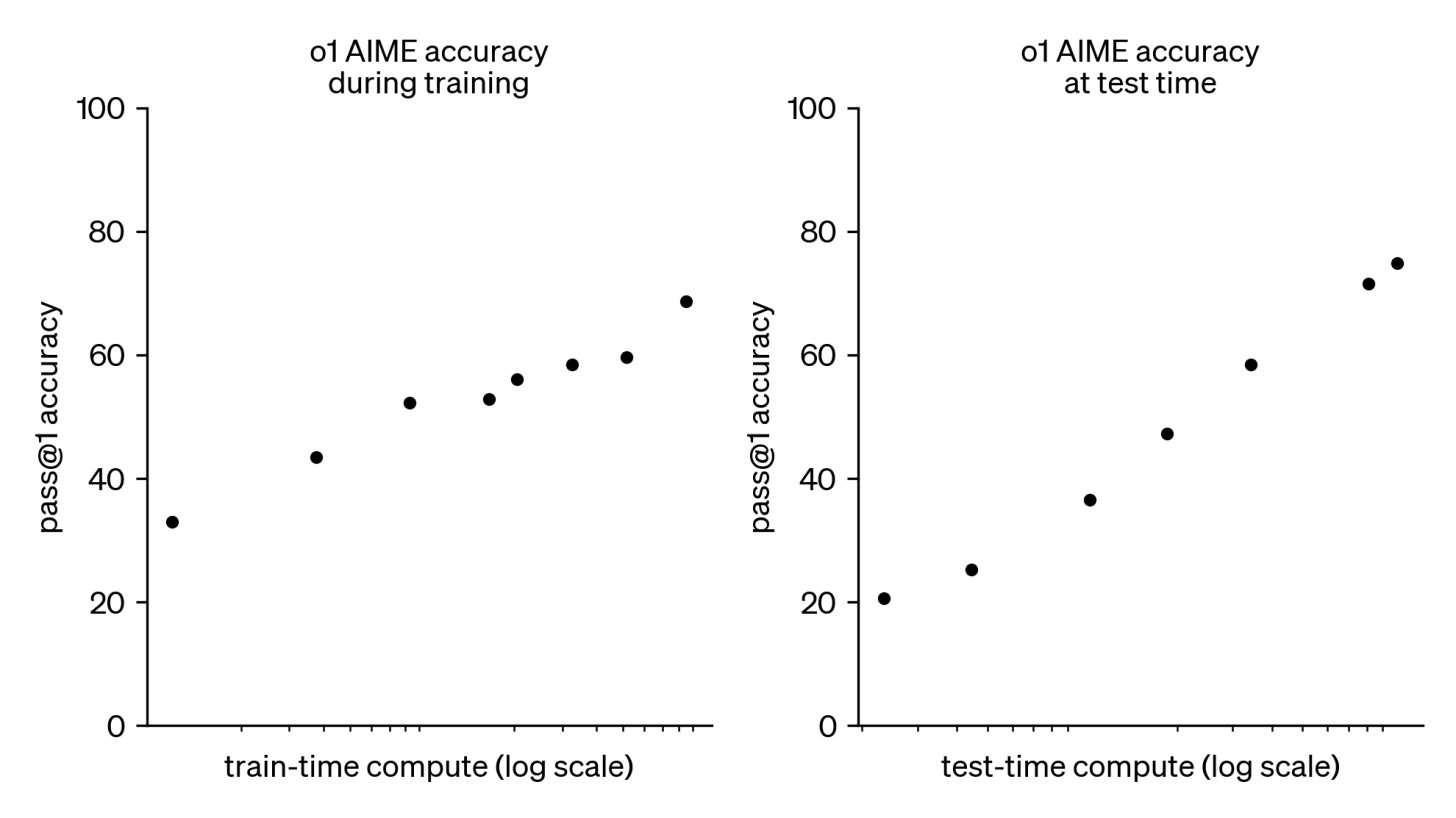

But this was essentially the breakthrough that OpenAI announced last fall. They used this paradigm of reinforcement learning from verifiable rewards to train O1, a model that, like AlphaGo, can think for longer and produce better outputs:

Gazing at the beautiful graphs above (and noting the log x-axis!) we observe that o1 is indeed getting better with more thinking time. But take a look at the title: this is on AIME, a set of extremely difficult math problems with integer solutions. Read: it’s not an open-ended task. It’s a task with verifiable training data, because you can check if an LLM generated the proper answer or not and reward the model accordingly.

It turns out that with current LLMs, pretrained to do arbitrary tasks pretty well, they can make a decent guess at AIME problems, and we can use this to train them via RL to make better and better guesses with time. (And the coolest part, which we won’t talk about here, is that they generate more and more “thinking tokens” to do so, giving us the test-time compute graph from the o1 blog post shown above.)

Is RLVR a path to superintelligence?

It is clear that OpenAI, Google, and the other AI labs are very excited about this type of RL on LLMs and think it might just give them superintelligence. It also seems likely to me that this paradigm is what Sam A. was mentioning in his vague tweet above. The “engineering problem” of superintelligence is to build lots of RL environments for many different types of tasks and train an LLM to do them all at the same time.

Let’s think through the bull case here. The verifiable tasks we know of are coding (you can check if code is correct by running it) as well as math (not proofs, really, but stuff with numeric solutions). If we were able to gather all the verifiable things in the world and train on them all at the same time (or separately, and then do model merging) – would this really produce general superintelligence?

There are a few logical leaps here. The most important is that we don’t know how well RL on verifiable task transfers out-of-domain. Does training a model to do math problems somehow inherently teach it how to book a flight? Or would training a model to code better in verifiable environments even make it a better software engineer overall?

Let’s pretend for a second that this does turn out to be true, and RL transfers neatly to all sorts of tasks. This would be huge. It would put the AI companies in an arms race to produce the most diverse, useful, and well-engineered set of tasks to RL their LLMs on. There would likely be multiple companies producing a “superintelligent LLM” in this fashion.

But this outcome seems unlikely to me. I’d guess that if RL did transfer extremely well to other domains then we’d know about it by now. My humble prediction is that LLMs will continue to get better at things within their training distribution. As we gather more and more types of tasks and train on them, this will produce more and more useful LLMs on a wide array of tasks. But it won’t give us a single superintelligent model.