Get the guide to build your first MCP server >

When we build software, we’re not just shipping features. We’re shipping experiences that surprise and delight our users — and making sure that we’re providing natural and seamless experiences is a core part of what we do.

In my last post, I wrote about an MCP server that we started building for a turn-based-game (like tic-tac-toe, or rock, paper, scissors). While it had the core capabilities, like tool calls, resources, and prompts, the experience could still be improved. For example, the player always took the first move, the player could only change the difficulty if they specified in their initial message, and a slew of other papercuts.

So on my most recent Rubber Duck Thursdays stream, I covered a feature that’s helped improve the user experience: elicitation. See the full stream below 👇

Elicitation is kind of like saying, “if we don’t have all the information we need, let’s go and get it.” But it’s more than that. It’s about creating intuitive interactions where the AI (via the MCP server) can pause, ask for what it needs, and then continue with the task. No more default assumptions that provide hard-coded paths of interaction.

👀 Be aware: Elicitation is not supported by all AI application hosts. GitHub Copilot in Visual Studio Code supports it, but you’ll want to check the latest state from the MCP docs for other AI apps. Elicitation is a relative newcomer to the MCP spec, having been added in the June 2025 revision, and so the design may continue to evolve.

Let me walk you through how I implemented elicitation in my turn-based game MCP server and the challenges I encountered along the way.

Enter elicitation: Making AI interactions feel natural

Before we reached the livestream, I had put together a basic implementation of elicitation, which asked for required information when creating a new game, like difficulty and player name. For tic-tac-toe, it asks which player goes first. For rock, paper, scissors, it asked how many rounds to play.

But rather than completely replacing our existing tools, I implemented these as new tools, so we could clearly see the behavior between the two approaches until we tested and standardized the approach. As a result, we began to see sprawl in the server with some duplicative tools:

create-tic-tac-toe-game and create-tic-tac-toe-game-interactivecreate-rock-paper-scissors-game and create-rock-paper-scissors-game-interactiveplay-tic-tac-toe and play-rock-paper-scissorsThe problem? When you give AI agents like Copilot tools with similar names and descriptions, it doesn’t know which one to pick. On several occasions, Copilot chose the wrong tool because I had created this confusing landscape of overlapping functionality. This was an unexpected learning experience, but an important one to pick up along the way.

The next logical step was to consolidate our tool calls, and make sure we’re using DRY (don’t repeat yourself) principles throughout the codebase instead of redefining constants and having nearly identical implementations for different game types.

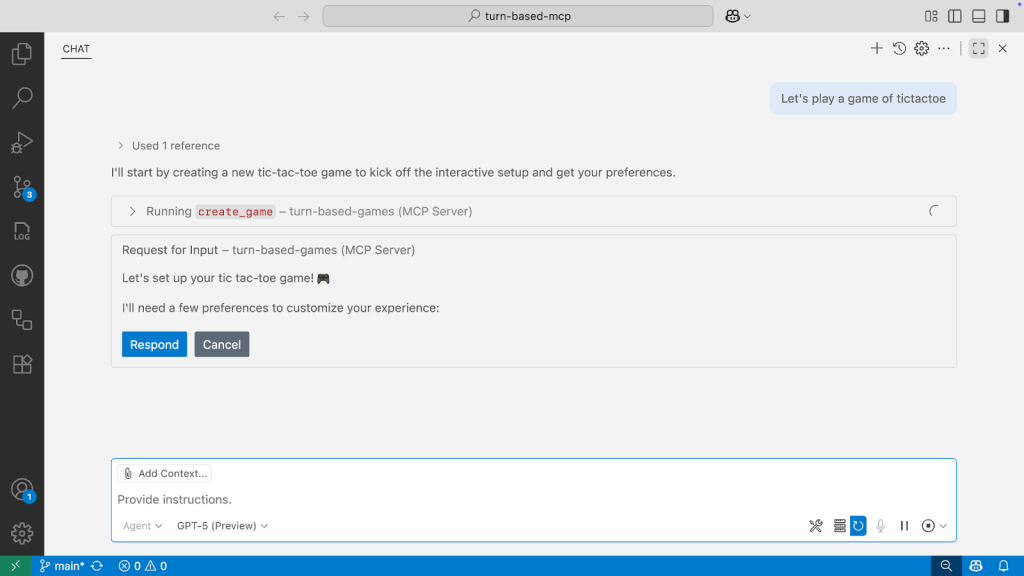

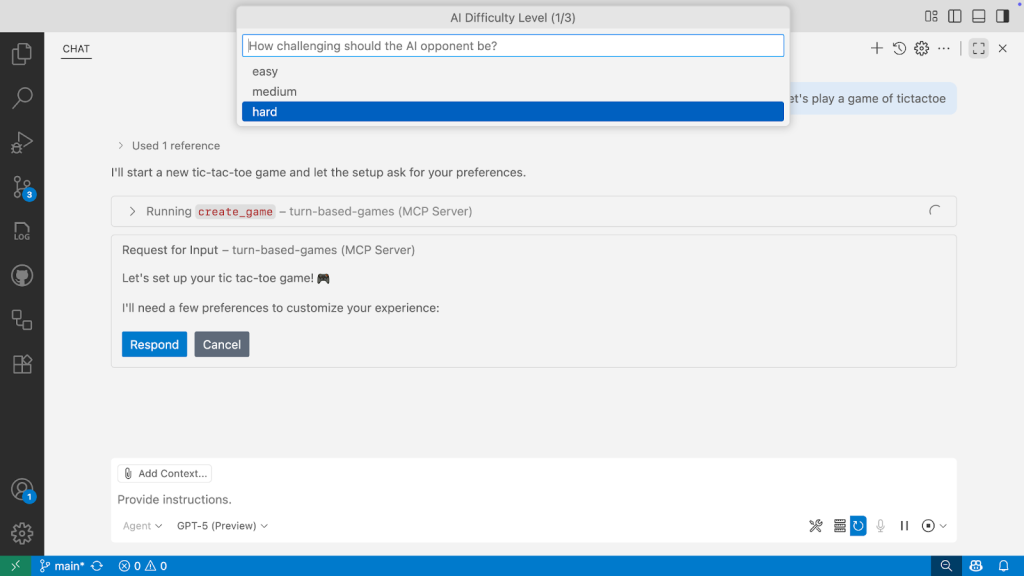

After a lot of refactoring and consolidation, when someone prompts “let’s play a game of tic-tac-toe,” the tool call identifies that more information is needed to ensure the user has made an explicit choice, rather than creating a game with a pre-determined set of defaults.

The user provides their preferences, and the server creates the game based upon those, improving that overall user experience.

It’s worth adding that my code (like I’m sure many of us would admit?) is far from perfect, and I noticed a bug live on the stream. The elicitation step triggered for every invocation of the tool, regardless of whether the user had already provided the needed information.

As part of my rework after the livestream, I added some checks after the tool was invoked to determine what information had already been provided. I also aligned the property names between the tool and elicitation schemas, bringing a bit more clarity. So if you said “Let’s play a game of tic-tac-toe, I’ll go first,” you would be asked to confirm the game difficulty and to provide your name.

How my elicitation implementation now works under the hood

The magic happens in the MCP server implementation. As part of my up-to-date implementation, when the MCP server invokes the create_game tool, it:

- Checks for required parameters: Do we know which game the user wants to play, or did they specify an ID?Passes the optional identified arguments to a separate method: Are we missing difficulty, player name, or turn order?Initiates elicitation: If information is missing, it pauses the tool execution and gathers only the missing information from the user. This was an addition that I made after the stream to further improve the user experience.Presents schema-driven prompts: The user sees formatted questions for each missing parameter.Collects responses: The MCP client (VS Code in this case) handles the UI interaction.Completes the original request: Once the server collects all the information, the tool executes the createGame method with the user’s preferences.

Here’s what you see in the VS Code interface when elicitation kicks in, and you must provide some preferences:

The result? Instead of “Player vs AI (Medium)”, I get “Chris vs AI (Hard)” with the AI making the opening move because I chose to go second.

What I learned while implementing elicitation

Challenge 1: Tool naming confusion

Problem: Tools with similar names and descriptions confuse the AI about which one to use.

Solution: Where it’s appropriate, merge tools and use clear, distinct names and descriptions. I went from eight tools down to four:

create-game (handles all game types with elicitation)play-game (unified play interface)analyze-game (game state analysis)wait-for-player-move (turn management)Challenge 2: Handling partial information

Problem: What if the user provides some information upfront? (“Let’s play tic-tac-toe on hard mode”)

Observation: During the livestream, we saw that the way I built elicitation asked for all of the preferences each time it was invoked, which is not an ideal user experience.

Solution: Parse the initial request and only elicit the missing information. This was fixed after the livestream, and is now in the latest version of the sample.

Key lessons from this development session

1. User experience is still a consideration with MCP servers

How often do you provide all the needed information straight up? Elicitation provides this capability, but you need to consider how this is included as part of your tool calling and overall MCP experience. It can add complexity, but is it better to ask users for their preferences than force them to work around poor defaults?

2. Tool naming matters more than you think

When building (and even using) tools in MCP servers, naming and descriptions are critical. Ambiguous tool names and similar descriptions can lead to unpredictable behavior, where the “wrong” tool is called.

3. Iterative development wins

Rather than trying to build the perfect implementation upfront, I iterated to:

- Build basic functionality firstIdentify pain points through usageAdd elicitation to improve the user experienceUse Copilot coding agent and Copilot agent mode to help cleanup

Try it yourself

Want to see how elicitation works in an MCP server? Or seeking inspiration to build your own MCP server?

- Fork the repository: gh.io/rdt-blog/game-mcpSet up your dev environment by creating a GitHub CodespaceRun the sample by building the code, starting the MCP server and running the web app / API server.

Take this with you

Building better AI tools isn’t all about the underlying models — it’s about creating experiences that can interpret context, ask good questions, and deliver exactly what users need. Elicitation is a step in that direction, and I’m excited to see how the MCP ecosystem continues to evolve and support even richer interactions.

Join us for the next Rubber Duck Thursdays stream where we’ll continue exploring the intersection of AI tools and developer experience.

The post Building smarter interactions with MCP elicitation: From clunky tool calls to seamless user experiences appeared first on The GitHub Blog.