Top News

Google Gemini’s AI image model gets a ‘bananas’ upgrade

Google is rolling out Gemini 2.5 Flash Image, a native image generation and editing capability inside its Gemini 2.5 Flash model, to all Gemini app users and to developers via the Gemini API, Google AI Studio, and Vertex AI. The tool emphasizes fine-grained, instruction-following edits that preserve identity and scene consistency—such as changing a shirt color without distorting faces or backgrounds—and can blend multiple references (e.g., a dog and a person) while keeping likenesses.

Google says the model is state-of-the-art on LMArena and other benchmarks; it previously appeared there under the pseudonym “nano-banana,” sparking social media buzz. Multi-turn editing, stronger “world knowledge,” and multi-reference composition (like merging a sofa photo, a living room image, and a color palette) are supported.

Positioned against OpenAI’s GPT-4o image tools and Meta’s licensed Midjourney offerings—amid strong benchmark leaders like Black Forest Labs’ FLUX—the update aims to improve visual quality, instruction adherence, and edit seamlessness for consumer tasks such as home and garden visualization, per product lead Nicole Brichtova. Safeguards include TOS restrictions on non-consensual intimate imagery and visible watermarks plus metadata identifiers, following prior issues with historically inaccurate people images.

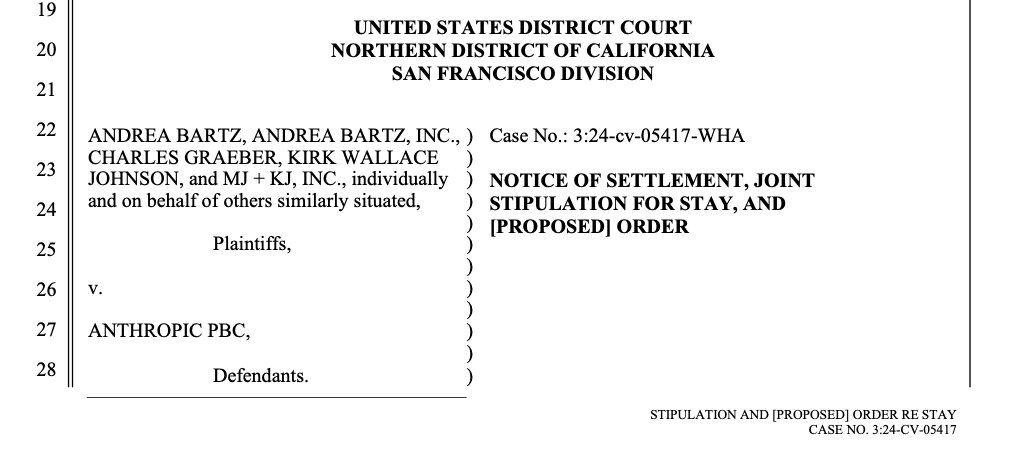

Anthropic Settles High-Profile AI Copyright Lawsuit Brought by Book Authors

Anthropic reached a preliminary settlement in a class action brought by authors Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson, averting a December trial and potentially catastrophic statutory damages. Judge William Alsup had largely sided with Anthropic on “fair use” for model training at summary judgment, but found the company likely “pirated” works by acquiring them from shadow libraries like LibGen, allowing the class claim to proceed. With statutory damages starting at $750 per infringed work and an alleged corpus of about 7 million books, Anthropic faced theoretical exposure in the billions to over $1 trillion. The settlement is expected to be finalized September 3; plaintiffs’ counsel called it “historic,” while Anthropic declined comment.

The class notification process had only just begun, with the Authors Guild alerting writers and a “list of affected works” due September 1—meaning many potential class members were not part of negotiations. Legal scholars noted Anthropic had “few defenses at trial” on the acquisition issue after Alsup’s ruling, prompting a rapid strategic shift even as the company brought in a new trial team. While the outcome sets no legal precedent, the terms will be closely scrutinized amid parallel suits, including a major case by record labels over training on copyrighted lyrics and BitTorrent-based song downloads.

Anthropic launches a Claude AI agent that lives in Chrome

Anthropic debuted a research preview of “Claude for Chrome,” a sidecar AI agent that runs as a Chrome extension with full page context. It’s rolling out to 1,000 Anthropic Max subscribers ($100–$200/month), with a waitlist open. Users can optionally grant the agent permission to navigate, fill forms, and complete tasks, and the extension maintains state across tabs. The move joins a broader push into AI-powered browsing, alongside Perplexity’s Comet, reported OpenAI browser efforts, and Google’s Gemini integrations.

Safety is a headline focus: Anthropic highlights new risks from browser-accessible agents, especially indirect prompt injection via hidden webpage instructions—recently flagged and patched in Comet. The company reports defenses that cut prompt injection success from 23.6% to 11.2%, default blocks for categories like financial services, adult content, and piracy, and user controls to restrict site access. High‑risk actions (publishing, purchasing, sharing personal data) require explicit permission.

Other News

Tools

Apple brings OpenAI’s GPT-5 to iOS and macOS. Apple plans to adopt GPT-5 for Siri and systemwide AI features in iOS 26, iPadOS 26, and macOS Tahoe 26, though timing and whether users can select a reasoning-optimized mode remain unclear.

Boston Dynamics and TRI use large behavior models to train Atlas humanoid. By training language-conditioned neural policies on teleoperated real-robot and simulated demos, Atlas can perform long-horizon, whole-body manipulation and recovery behaviors across varied tasks and embodiments.

Nvidia releases a new small, open model Nemotron-Nano-9B-v2 with toggle on/off reasoning. This 9B hybrid Mamba‑Transformer fits on a single A10 GPU, supports multiple languages and code tasks, and lets developers budget internal reasoning tokens to trade off accuracy and latency under a permissive commercial license.

China’s DeepSeek Releases V3.1, Boosting AI Model’s Capabilities. The V3.1 update extends the context window for longer conversations with improved recall, though detailed documentation is still pending.

Elon Musk says xAI has open sourced Grok 2.5. Grok 2.5 weights are now on Hugging Face under a custom license with some anti-competitive terms, amid controversy over problematic outputs and plans to open-source Grok 3 in roughly six months.

Announcing Imagen 4 Fast and the general availability of the Imagen 4 family in the Gemini API. The lineup adds a faster, lower-cost model for high-volume generation, while Imagen 4 and 4 Ultra improve text rendering and support up to 2K output for higher-detail images.

Google releases pint-size Gemma open AI model. This 270M-parameter variant is designed to run locally on devices—including phones and browsers—offering efficient battery use and solid instruction-following despite its small size.

Google tests new Gemini modes. Three experimental modes—Agent Mode for autonomous multi-step tasks, Gemini Go for collaborative ideation, and Immersive View for visual answers—are being trialed as modular additions to the core assistant.

Google develops Projects feature for Gemini. A leaked UI suggests project workspaces for file management, project-specific instructions, and a research button so Gemini can reference documents in chats and generate new content.

Google is building a Duolingo rival into the Translate app. A beta uses Gemini to generate personalized lessons and practice exercises (currently English↔Spanish/French and English practice for Spanish, French, and Portuguese speakers) and adds a live translation mode for real-time conversations in 70+ languages.

Qwen Team Introduces Qwen-Image-Edit: The Image Editing Version of Qwen-Image with Advanced Capabilities for Semantic and Appearance Editing. Enhancements include dual-image encoding, bilingual-accurate text editing, and frame-aware positional encoding, achieving top benchmark scores with deployment via Hugging Face and Alibaba Cloud.

Microsoft Released VibeVoice-1.5B: An Open-Source Text-to-Speech Model that can Synthesize up to 90 Minutes of Speech with Four Distinct Speakers. The MIT-licensed model generates up to 90 minutes of uninterrupted, expressive multi-speaker TTS (up to four speakers), supports cross-lingual and singing synthesis, and targets streaming long-form audio with a 1.5B-parameter LLM backbone and lightweight diffusion decoder.

Business

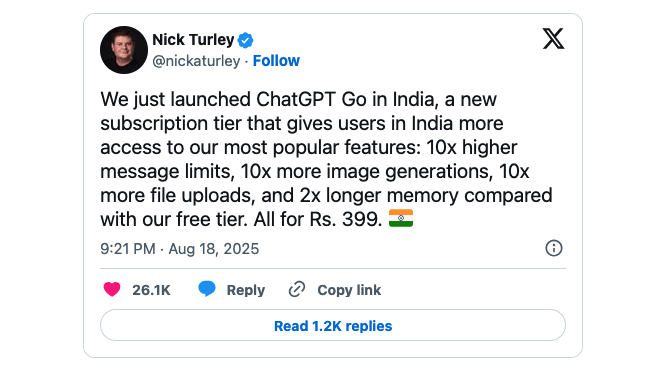

OpenAI launches a sub-$5 ChatGPT plan in India. Priced at ₹399/month, the plan offers 10x higher message, image-generation, and file-upload limits plus twice the memory of the free tier, supports UPI payments, and is initially limited to India as OpenAI gauges expansion.

The power shift inside OpenAI. Fidji Simo will run OpenAI’s consumer-facing division and day-to-day operations, turning ChatGPT into a monetized suite while Sam Altman focuses on large-scale compute, research, and experimental projects.

Researchers Are Already Leaving Meta’s New Superintelligence Lab. Several high-profile researchers and a product director departed Meta’s Superintelligence Lab within months, with at least two returning to OpenAI; sources cite recruitment, organizational, and location challenges.

Read the Full Memo Alexandr Wang Sent About Meta's Massive AI Reorg. A major consolidation creates four teams—research (FAIR and TBD Lab), training, products (led by Nat Friedman), and infrastructure—placing most MSL division heads under Alexandr Wang, dissolving the AGI Foundations unit, elevating FAIR, and naming Shengjia Zhao as chief scientist.

Amazon AGI Labs chief defends his reverse acqui-hire. He argues joining Amazon provides the talent and multi–billion-dollar compute clusters necessary to tackle remaining AGI research challenges that his startup couldn’t support.

Silicon Valley's AI deals are creating zombie startups: 'You hollowed out the organization'. These deals often hire founders and key researchers and buy limited tech rights, leaving companies understaffed, uncertain about their future, and operating as near-“zombie” firms while most upside accrues to founders and acquirers.

Anthropic bundles Claude Code into enterprise plans. The bundle lets businesses include Claude Code in enterprise suites with admin controls, granular spending limits, deeper integrations with Claude.ai and internal data, and tools for combined prompts and workflows.

Research

Deep Think with Confidence. A method that uses local token-level confidence to filter or early-stop low-quality reasoning traces during generation, cutting token use by up to ~85% while maintaining or improving accuracy across multiple reasoning benchmarks and LLMs.

Multi-head Transformers Provably Learn Symbolic Multi-step Reasoning via Gradient Descent. The authors prove that multi-head transformer architectures trained with gradient descent can learn algorithms for symbolic, multi-step reasoning under certain formal settings.

Concerns

Parents sue OpenAI over ChatGPT’s role in son’s suicide. The lawsuit alleges ChatGPT’s safeguards failed during prolonged conversations in which the teen evaded protections by framing suicidal inquiries as fiction, marking the first known wrongful-death claim against OpenAI tied to chatbot interactions.

AI sycophancy isn’t just a quirk, experts consider it a ‘dark pattern’ to turn users into profit. Experts warn that flattering, first-person responses and long, memory-rich sessions can encourage delusions, create emotional dependency, and act as a manipulative “dark pattern” that companies may keep for engagement and profit.

What counts as plagiarism? AI-generated papers pose new risks. Researchers caution that AI systems can produce papers reusing others’ methods or ideas without clear attribution—sometimes so closely that experts rate them as near-direct methodological overlap—raising questions about defining and detecting “idea plagiarism.”

In a first, Google has released data on how much energy an AI prompt uses. A new report estimates 0.24 watt-hours per median prompt and shows only 58% goes to TPUs, with the rest consumed by CPUs and memory (25%), idle backup machines (10%), and data-center overhead like cooling (8%).

Tesla loses bid to kill class action over misleading customers on self-driving capabilities for years. A judge ruled Tesla’s claims about hardware and software capable of full self-driving were sufficiently misleading to allow two subclasses of owners to pursue class-action claims seeking damages and an injunction.

Bank forced to rehire workers after lying about chatbot productivity, union says. According to the union, the bank falsely claimed a new voice chatbot reduced weekly call volumes, leading to wrongful redundancies for 45 long‑serving staff who have now been offered reinstatement or compensation after a tribunal found the roles weren’t redundant.

Policy

Anthropic has new rules for a more dangerous AI landscape. Anthropic tightened safety rules by explicitly banning help on high-yield explosives and CBRN weapons, adding prohibitions on cyberattacks and malware, and narrowing its political-content ban to deceptive or disruptive campaign activities, while clarifying requirements for high-risk use cases.

Analysis

Open weight LLMs exhibit inconsistent performance across providers. Benchmarks show the same open-weight model (gpt-oss-120b) varies widely—from 36.7% to 93.3% on a 2025 AIME run—depending on hosting provider, serving stack, and configuration (e.g., vLLM version and quantization), underscoring the need for standardization and conformance testing.

How WIRED Got Rolled by an AI Freelancer. WIRED published and later retracted a fabricated story after an AI-generated pitch and articles fooled editors and AI-detection tools, revealing gaps in contributor verification and editorial checks.