Published on August 21, 2025 6:14 PM GMT

Current plans for AI alignment (examples) come from a narrow, implicitly filtered, and often (intellectually, politically, and socially) liberal standpoint. This makes sense, as the vast majority of the community, and hence alignment researchers, have those views. We, the authors of this post, belong to the minority of AI Alignment researchers who have more conservative beliefs and lifestyles, and believe that there is something important to our views that may contribute to the project of figuring out how to make future AGI be a net benefit to humanity. In this post, and hopefully series of posts, we want to lay out an argument we haven’t seen for what a conservative view of AI alignment would look like.

We re-examine the AI Alignment problem through a different, and more politically conservative lens, and we argue that the insights we arrive at could be crucial. We will argue that as usually presented, alignment by default leads to recursive preference engines that eliminate disagreement and conflict, creating modular, adaptable cultures where personal compromise is unnecessary. We worry that this comes at the cost of reducing status to cosmetics and eroding personal growth and human values. Therefore, we argue that it’s good that values inherently conflict, and these tensions give life meaning; AGI should support enduring human institutions by helping communities navigate disputes and maintain norms, channeling conflict rather than erasing it. This ideal, if embraced, means that AI Alignment is essentially a conservative movement.

To get to that argument, the overarching question isn’t just the technical question of “how do we control AI?” It’s “what kind of world are we trying to create?” Eliezer’s fun theory tried to address this, as did Bostrom’s new “Deep Utopia.” But both of these are profoundly liberal viewpoints, and see the future as belonging to “future humans,” which often envisions a time when uploaded minds and superintelligence exist, and humanity as it traditionally existed will no longer be the primary mover of history. We want to outline an alternative which we think is more compatible with humanity as it exists today, and not incidentally, one that is less liable to failure.

This won’t be a treatise of political philosophy, but it’s important as background, so that is where we will start. We’ll start by explaining our lens on parts of the conservative-liberal conceptual conflict, and how this relates to the parent-child relationship, and then translate this to why we worry about the transhuman vision of the future.

In the next post, we want to outline what we see as a more workable version of humanity's relationship with AGI moving forward. And we will note that we are not against the creation of AGI, but want to point towards the creation of systems that aren’t liable to destroy what humanity values.

Conservatives and liberals

"What exactly are conservatives conserving?" – An oft-repeated liberal jab

The labels "conservative" and "liberal" don't specify what the contested issues are. Are we talking about immigration, abortion rights or global warming? Apparently it doesn't matter - especially in modern political discourse, there are two sides, and this conflict has persisted. But taking a fresh look, it might be somewhat surprising that there are even such terms as "conservatives" and "liberals" given how disconnected the views are from particular issues.

We propose a new definition of "conservative" and "liberal" which explains much of how we see the split. Conservatives generally want to keep social rules as they are, while liberals want to change them to something new. Similarly, we see a liberal approach as aspiring to remove social barriers, unless (perhaps) a strong-enough case can be made for why a certain barrier should exist. The point isn’t the specific rule or norm. A conservative approach usually celebrates barriers, and rules that create them, and ascribes moral value to keeping as many barriers as possible. For instance, the prohibition on certain dress codes in formal settings seems pointless from a liberal view—but to a conservative, it preserves a shared symbolic structure.

It's as if the people who currently say "No, let's not allow more people into our country" and the people who say "No, let's not allow schools to teach our children sex education" just naturally tend to be good friends. They clump together under the banner "keep things as they are", building a world made of Chesterton’s fences, while the people who say "let's change things" clump in another, different world, regardless of what those things are. Liberals, however, seem to see many rules, norms, or institutions as suspect until justified, and will feel free to bend or break them; conservatives tend to see these as legitimate and worth respecting until proven harmful. That instinct gap shapes not just what people want AI to do—but how they believe human societies work in the first place.

Disagreement as a Virtue

It's possible that the school of thought of agonistic democracy (AD) could shed some light on the above. AD researchers draw the distinction between two kinds of decision-making:

- Deliberative decision-making, in which the multiple parties have a rational discussion about the pros and cons of the various options; andAgonistic decision-making, in which the power dynamics and adversity between the multiple parties determine the final decision.

AD researchers claim that while agonistic decision-making may seem like an undesirable side-effect, it is a profoundly important ingredient in a well-functioning democracy. Applying this logic to the conservative-liberal conflict, we claim that much of the conflict between a person who says "Yes!" and a person who says "No!" is more important for discussing the views than what the question even was. Similarly, one side aims for convergence of opinions , the other aims for stability and preserving differences.

Bringing this back to alignment, it seems that many or most proposals to date are aiming for a radical reshaping of the world. They are pushing for transformative change, often in their preferred direction. Their response to disagreement is to try to find win-win solutions, such as the push towards paretotopian ideals where disagreements are pushed aside in the pursuit of radically better worlds. And this is assuming that increasingly radical transformation will grow the pie and provide enough benefits to override the disagreement - because this view assumes the disagreement was never itself valuable.

Along different but complementary lines, the philosopher Carl Schmitt argued that politics begins not with agreement, or deliberation, but with the friend–enemy distinction. Agonistic democracy builds on a similar view: real social life isn’t just cooperation, it’s structured disagreement. Not everyone wants the same thing, and they shouldn't have to. While Schmitt takes the primacy of ineliminable conflict ever farther, Mouffe and others suggest that the role of institutions is not to erase conflict, but to contain it. They agree, however, that conflict is central to the way society works, not something to overcome.

Parents and children

We can compare the conflict between conservatives and liberals to the relationship between parents and children[1]. In a parent-child relationship, children ask their parents for many things. They then often work to find the boundaries of what their parents will allow, and lead long-term campaigns to inch these boundaries forward.

Can I have an ice cream? Can I stay another 5 minutes before going to bed? Can I get my own phone when I'm 9? When could I get a tattoo? Try one argument, and if it fails, try another until you succeed. If mom says no, ask dad. You can get a hundred nos, but if you get one yes, you have now won the debate permanently, or at least set a precedent that will make it easier to get a yes next time. If you have an older sibling, they may have done much of the work for you. There is already a mass of "case law" that you can lean on. Parents may overturn precedents, but that requires them to spend what little energy they have left after their workday to get angry enough for the child to accept the overturn.

One extreme view of this, which is at least prevalent in the effective altruist / rationalist communities, is that children should be free to set the course of their own lives. I know of one parent that puts three dollars aside each time they violate the bodily sovereignty of their infant - taking something out of their mouth, or restricting where they can go. Eliezer similarly said that the dath-ilani version is that "any time you engage in an interaction that seems net negative from the kid's local perspective you're supposed to make a payment that reflects your guarantee to align the nonlocal perspective." This view is that all rules are “a cost and an [unjustified, by default,] imposition.” Done wisely and carefully, this might be fine - but it is setting the default against having rules.

And if a parent is discouraged from making rules, the dynamic ends up being similar to a ratchet; it can mostly only turn one way. Being on the conservative side of this war is terrifying. You can really only play defense. Any outpost you lose, you have lost permanently. Once smartphones became normal at age 10, no future parent can ‘undo’ that norm without being perceived as abusive or weird. As you gaze across the various ideals that you have for what you want your family life to look like, you're forced to conclude that for most of these, it's a question of when you'll lose them rather than if.

AGI as humanity's children

We would like to expand the previous analogy, and compare the relationship between humanity and AGI to the relationship between parents and their children. We are not the first to make this comparison; however, we argue that this comparison deserves to be treated as more than just a witty, informal metaphor.

We argue that the AI alignment problem, desperately complicated as it is, is a beefed-up version of a problem that's barely tractable in its own right: The child alignment problem. Parents want their children to follow their values: moral, religious, national, interpersonal, and even culinary and sports preferences, or team allegiances. However, children don't always conform to their parents' plans for them. Of course, as we get to the later categories, this is less worrisome - but there are real problems with failures.

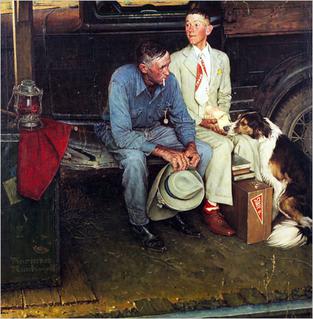

The child alignment problem had tormented human parents throughout known history, and thus became an evergreen motif in art, from King Lear to Breaking Home Ties to The Godfather to Pixar's Up. Children's divergence is not just an inconvenience; it's an integral, profound component of the human condition. This statement might even be too human-centric, as animal parents reprimand their offspring, repeatedly, with the same kind of visible frustration and fatigue that we observe in human parents. Self-replication is an essential property of life; thus the desire to mold another being in one's image, and in turn to resist being molded by another, are not merely challenges, but likely contenders for an answer to the question, "what is the meaning of life?"

Let's descend from the height of the previous paragraph, and ask ourselves these questions: "How does this connect with AI alignment? And what useful lessons can we take from this comparison?"

The answer to the first question is straightforward: The AI alignment problem is a supercharged version of the child alignment; it has all of the complexities of the latter, compounded with our uncertainty of the capabilities and stability of this new technology. We struggle to mold AGI in our image, not knowing how insistent or resourceful it will be in resisting our molding.

We offer the following answer to the second question: The child alignment problem is decidedly not an optimization problem. One does not win parenthood by producing a perfect clone of oneself, but rather by judiciously allowing the gradual transformation of one's own ideals from subject to object. A cynical way to put the above in plain words is "you can't always get what you want"; more profoundly, that judicious transformation process becomes the subject. The journey becomes the destination.

People ask what an AI-controlled autonomous vehicle will do when faced with a trolley problem-like situation; stay on course and kill multiple people, or veer and kill one person. We care so much about that, but we're complacent with humans killing thousands of other humans on the road every day. We care intensely about what choice the AI will make, though we'll get angry at it regardless of which choice it'll make. There isn't really a right choice, but we have an intense desire to pass our pain forward to the AI. We want to know that the AI hurts by making that choice.

In this framing, we aren’t just interested in the safety of AI systems. We want to know that it values things we care about. Maybe we hope that seeing bad outcomes will “hurt” even when we're not there to give it a negative reward signal. Perhaps we want the AI to have an internal conflict that mirrors the pain that it causes in the world. Per agonistic democracy, we don't want the AI to merely list the pros and cons of each possible choice; we want the conflict to really matter, and failure to tear its insides to shreds, in the same way it tears ours. Only then will we consider AI to have graduated from our course in humanity.

Holism and reductionism

Another related distinction is between holism ("the whole is greater than the sum of its parts") and reductionism ("the whole is the sum of its parts") . When children argue for activities to be allowed, they take a reductionist approach, while parents tend to take a holistic approach. Children want to talk about this specific thing, as separate from any other things. Why can't I stay up late? Because you have school tomorrow. Okay, but why can't I stay up late on the weekend? Because… It's just too similar to staying up late on a weekday, and it belongs to a vague collection of things that children shouldn't do. If you argue for each of these items separately, they will eventually get sniped one-by-one, until the collection dissolves to nothing. The only way they can stay safe is by clumping together. That is a holistic approach.

In the struggle between a conservative and a liberal, the conservative finds that many of the items they're defending are defined by pain. If we align an AI along what we call the liberal vision, to avoid or eliminate pain, in the extreme we have an AI that avoids moral discomfort, and will avoid telling hard truths—just like a parent who only ever says yes produces spoiled kids. And in a world of AI aligned based on avoiding anything bad, where it optimizes for that goal, a teenager might be offered tailored VR challenges that feel deeply meaningful without ever being told they can’t do anything.

This means that the conservative involuntarily becomes the spokesperson for pain, and in the liberal view, their face becomes the face of a person who inflicts pain on others. This point connects to the previously-mentioned agonistic democracy, as "agonistic" derives from the latin "agōn", which also evolved into "agony". And if we add the utilitarian frame where pleasure is the goal, this pain is almost definitionally bad - but it’s also what preserves value in the face of optimization of (inevitably) incompletely specified human values.

Optimizers destroy value, conservation preserves pain

Optimizers find something simple enough to maximize, and do that thing. This is fundamentally opposed to any previously existing values, and it’s most concerning when we have a strong optimizer with weak values. But combining the idea of alignment as removing pain, and optimizing for this goal means that “avoidance of pain” will erase even necessary friction—because many valuable experiences (discipline, growth, loyalty) involve discomfort. And in this view, the pain discussed above has a purpose.

Not suffering for its own sake, or trauma, but the kind of tension that arises from limits, from saying no, from sustaining hard-won compromises. In a reductionist frame, every source of pain looks like a bug to fix, any imposed limit is oppression, and any disappointment is something to try to avoid. But from a holistic view, pain often marks the edge of a meaningful boundary. The child who’s told they can’t stay out late may feel hurt. But the boundary says: someone cares enough to enforce limits. Someone thinks you are part of a system worth preserving. To be unfairly simplistic, values can’t be preserved without allowing this pain, because limits are painful.

And at this point, we are pointing to a failure that we think is uncontroversial. If you build AI to remove all the pain, it starts by removing imposed pain - surely a laudable goal. But as with all unconstrained optimizers, this will eventually run into problems, in this case, the pain imposed by limitations[2] - and one obvious way to fix this is by removing boundaries that restrict, and then removing any painful consequences that would result. That’s not what we think alignment should be. That’s cultural anesthesia.

But we think this goes further than asking not to make a machine that agrees with everyone. We need a machine that disagrees in human ways—through argument, ritual, deference, even symbolic conflict. But what we’re building is very much not that - it’s sycophantic by design. But the problems AI developers admitted in recent sycophantic models wasn’t that they realized they were mistaken about the underlying goal, it was that they were doing it ham-handedly.

Again, most AI alignment proposals want to reduce or avoid conflict, and the default way for this to happen is finding what to maximize - which is inevitable in systems with specific goals - and optimize for making people happy. The vision of “humanity’s children” unencumbered by the past is almost definitionally a utopian view. But that means any vision of a transhuman future built along these lines is definitionally arguing for the children’s liberalism instead of the parent’s conservatism. If you build AI to help us transcend all our messy human baggage, it’s unclear what is left of human values and goals.

Concretely, proposals’ like Eliezer’s Coherent Extrapolated Volition, or Bostrom’s ideas about Deep Utopia assume or require value convergence, and see idealization as desirable and tractable. Deep Utopia proposes a world where we’ve “solved” politics, suffering, and scarcity—effectively maximizing each person's ability to flourish according to their own ideals. Eliezer’s Fun Theory similarly imagines a future where everyone is free to pursue deep, personal novelty and challenge. Disagreement and coordination problems are abstracted away via recursive preference alignment engines and “a form of one-size-fits-all ‘alignment’”. No one tells you no. But this is a world of perfect accommodation and fluid boundaries. You never need to compromise because everything adapts to you. Culture becomes modular and optional. Reductionism succeeds. Status becomes cosmetic. Conflict is something that was eliminated, not experienced nor resolved. And personal growth from perseverance, and the preservation of now-vestigial human values are effectively eliminated.

In contrast, a meta-conservative perspective might say: values don’t converge—they conflict, and the conflicts themselves structure meaning. Instead of personalized utopias, AGI helps steward enduring human institutions. AI systems help communities maintain norms, resolve disputes, and manage internal tensions, but without flattening them. This isn’t about eliminating conflict. Instead, it supposes that the wiser course is to channel it. This isn’t about pessimism. It’s about humility and the recognition that the very roughness we want to smooth over might be essential to the things holding everything together[3].

And the critical question isn’t which of these is preferable - it’s which is achievable without destroying what humans value. It seems at least plausible, especially in light of the stagnation of any real robust version of AI alignment, that the liberal version of alignment has set itself unachievable goals. If so, the question we should be asking now is where the other view leads, and how it could be achieved.

That is going to include working towards understanding what it means to align AI after embracing this conservative view, and seeing status and power as a feature, not a bug. But we don’t claim to have “the” answer to the question, just thoughts in that direction - so we’d very much appreciate contributions, criticisms, and suggestions on what we should be thinking about, or what you think we are getting wrong.

Thanks to Joel Leibo, Seb Krier, Cecilia Elena Tilli, and Cam Allen for thoughts and feedback on a draft version of this post

- ^

In an ideal world, this conflict isn’t acrimonious or destructive, but the world isn’t always ideal - as should be obvious from examples on both sides of the analogy.

- ^

Eliezer’s fun theory - and the name is telling - admits that values are complex, but then tries to find what should be optimized for, rather than what constraints to put in place.

- ^

Bostrom argues (In Deep Utopia, Monday - Disequilibria) that global externalities require a single global solution. This seems to go much too far, and depends on, in his words, postulating utopia.

Discuss