In the domain of multimodal AI, instruction-based image editing models are transforming how users interact with visual content. Just released in August 2025 by Alibaba’s Qwen Team, Qwen-Image-Edit builds on the 20B-parameter Qwen-Image foundation to deliver advanced editing capabilities. This model excels in semantic editing (e.g., style transfer and novel view synthesis) and appearance editing (e.g., precise object modifications), while preserving Qwen-Image’s strength in complex text rendering for both English and Chinese. Integrated with Qwen Chat and available via Hugging Face, it lowers barriers for professional content creation, from IP design to error correction in generated artwork.

Architecture and Key Innovations

Qwen-Image-Edit extends the Multimodal Diffusion Transformer (MMDiT) architecture of Qwen-Image, which comprises a Qwen2.5-VL multimodal large language model (MLLM) for text conditioning, a Variational AutoEncoder (VAE) for image tokenization, and the MMDiT backbone for joint modeling. For editing, it introduces dual encoding: the input image is processed by Qwen2.5-VL for high-level semantic features and the VAE for low-level reconstructive details, concatenated in the MMDiT’s image stream. This enables balanced semantic coherence (e.g., maintaining object identity during pose changes) and visual fidelity (e.g., preserving unmodified regions).

The Multimodal Scalable RoPE (MSRoPE) positional encoding is augmented with a frame dimension to differentiate pre- and post-edit images, supporting tasks like text-image-to-image (TI2I) editing. The VAE, fine-tuned on text-rich data, achieves superior reconstruction with 33.42 PSNR on general images and 36.63 on text-heavy ones, outperforming FLUX-VAE and SD-3.5-VAE. These enhancements allow Qwen-Image-Edit to handle bilingual text edits while retaining original font, size, and style.

Key Features of Qwen-Image-Edit

- Semantic and Appearance Editing: Supports low-level visual appearance editing (e.g., adding, removing, or modifying elements while keeping other regions unchanged) and high-level visual semantic editing (e.g., IP creation, object rotation, and style transfer, allowing pixel changes with semantic consistency).Precise Text Editing: Enables bilingual (Chinese and English) text editing, including direct addition, deletion, and modification of text in images, while preserving the original font, size, and style.Strong Benchmark Performance: Achieves state-of-the-art results on multiple public benchmarks for image editing tasks, positioning it as a robust foundation model for generation and manipulation.

Training and Data Pipeline

Leveraging Qwen-Image’s curated dataset of billions of image-text pairs across Nature (55%), Design (27%), People (13%), and Synthetic (5%) domains, Qwen-Image-Edit employs a multi-task training paradigm unifying T2I, I2I, and TI2I objectives. A seven-stage filtering pipeline refines data for quality and balance, incorporating synthetic text rendering strategies (Pure, Compositional, Complex) to address long-tail issues in Chinese characters.

Training uses flow matching with a Producer-Consumer framework for scalability, followed by supervised fine-tuning and reinforcement learning (DPO and GRPO) for preference alignment. For editing-specific tasks, it integrates novel view synthesis and depth estimation, using DepthPro as a teacher model. This results in robust performance, such as correcting calligraphy errors through chained edits.

Advanced Editing Capabilities

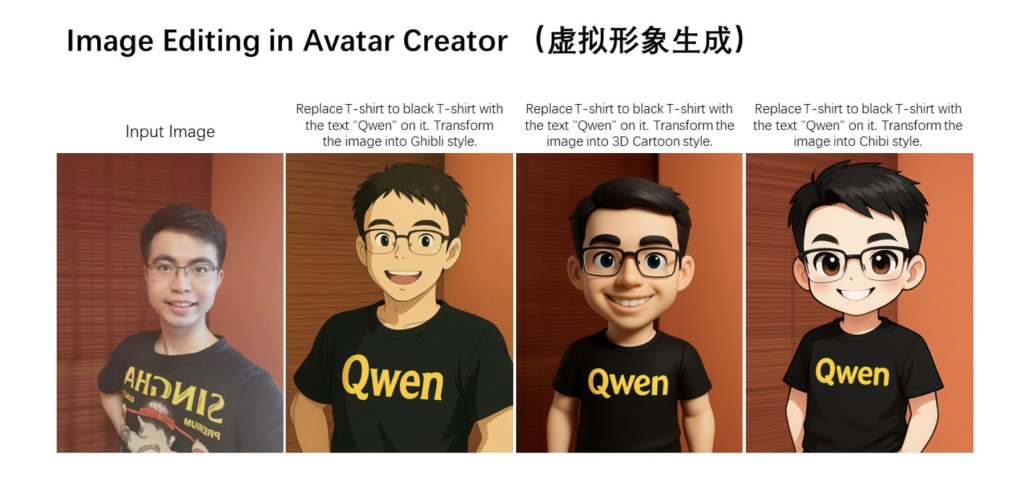

Qwen-Image-Edit shines in semantic editing, enabling IP creation like generating MBTI-themed emojis from a mascot (e.g., Capybara) while preserving character consistency. It supports 180-degree novel view synthesis, rotating objects or scenes with high fidelity, achieving 15.11 PSNR on GSO—surpassing specialized models like CRM. Style transfer transforms portraits into artistic forms, such as Studio Ghibli, maintaining semantic integrity.

For appearance editing, it adds elements like signboards with realistic reflections or removes fine details like hair strands without altering surroundings. Bilingual text editing is precise: changing “Hope” to “Qwen” on posters or correcting Chinese characters in calligraphy via bounding boxes. Chained editing allows iterative corrections, e.g., fixing “稽” step-by-step until accurate.

Benchmark Results and Evaluations

Qwen-Image-Edit leads editing benchmarks, scoring 7.56 overall on GEdit-Bench-EN and 7.52 on CN, outperforming GPT Image 1 (7.53 EN, 7.30 CN) and FLUX.1 Kontext [Pro] (6.56 EN, 1.23 CN). On ImgEdit, it achieves 4.27 overall, excelling in tasks like object replacement (4.66) and style changes (4.81). Depth estimation yields 0.078 AbsRel on KITTI, competitive with DepthAnything v2.

Human evaluations on AI Arena position its base model third among APIs, with strong text rendering advantages. These metrics highlight its superiority in instruction-following and multilingual fidelity.

Deployment and Practical Usage

Qwen-Image-Edit is deployable via Hugging Face Diffusers:

from diffusers import QwenImageEditPipelineimport torchfrom PIL import Imagepipeline = QwenImageEditPipeline.from_pretrained("Qwen/Qwen-Image-Edit")pipeline.to(torch.bfloat16).to("cuda")image = Image.open("input.png").convert("RGB")prompt = "Change the rabbit's color to purple, with a flash light background."output = pipeline(image=image, prompt=prompt, num_inference_steps=50, true_cfg_scale=4.0).imagesoutput.save("output.png")Alibaba Cloud’s Model Studio offers API access for scalable inference. Licensed under Apache 2.0, the GitHub repository provides training code.

Future Implications

Qwen-Image-Edit advances vision-language interfaces, enabling seamless content manipulation for creators. Its unified approach to understanding and generation suggests potential extensions to video and 3D, fostering innovative applications in AI-driven design.

Check out the Technical Details, Models on Hugging Face and Try the Chat here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post Qwen Team Introduces Qwen-Image-Edit: The Image Editing Version of Qwen-Image with Advanced Capabilities for Semantic and Appearance Editing appeared first on MarkTechPost.