Top News

OpenAI Finally Launched GPT-5. Here's Everything You Need to Know

Related:

OpenAI priced GPT-5 so low, it may spark a price war

Sam Altman addresses ‘bumpy’ GPT-5 rollout, bringing 4o back, and the ‘chart crime’

ChatGPT is bringing back 4o as an option because people missed it

The GPT-5 rollout has been a big mess

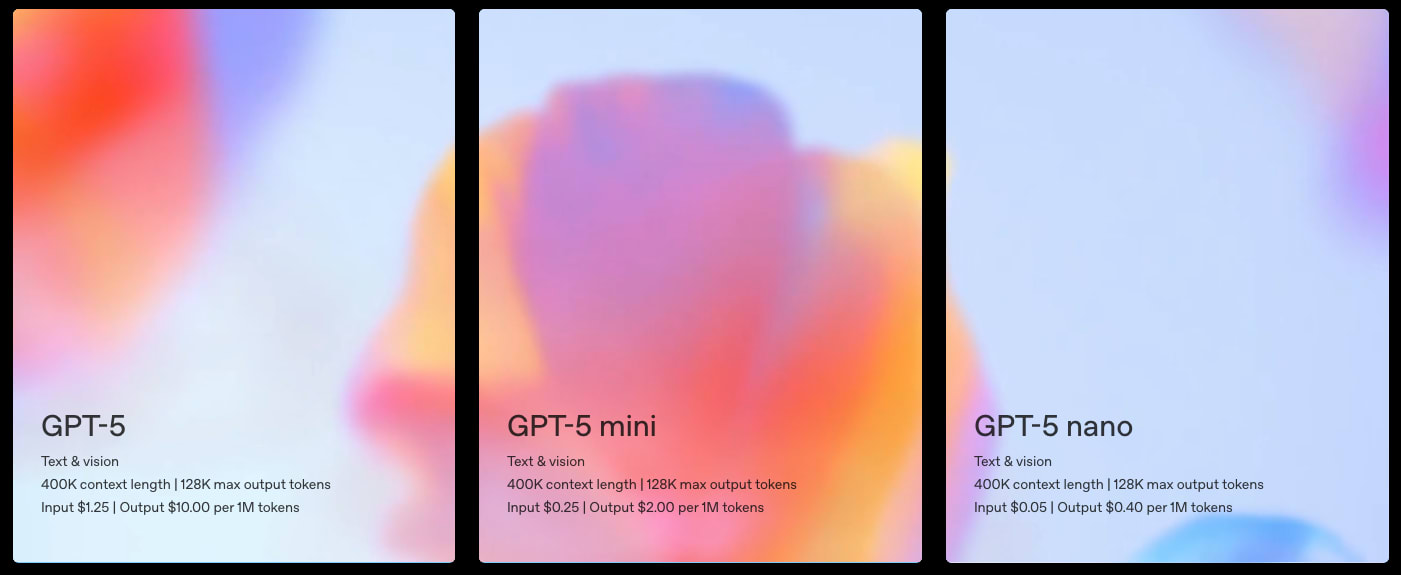

OpenAI has begun rolling out GPT-5 across ChatGPT with multiple variants and aggressive pricing, pitching it as “smarter, faster, more useful, and more accurate,” with notably reduced hallucinations. Users on free/Plus get GPT-5 and GPT-5-mini, while Pro ($200/month) adds GPT-5-pro and GPT-5-thinking; the chat UI now auto-routes to the “right” model by task and tier. API pricing lands at $1.25/1M input and $10/1M output tokens (mini: $0.25/$2; nano API-only: $0.05/$0.40), undercutting Anthropic Opus 4.1 ($15/$75) and even beating many Google Gemini Flash tiers at scale; cached input is $0.125/1M. Fresh features include Gmail/Contacts/Calendar integrations (starting with Pro next week), preset personalities (Cynic/Robot/Listener/Nerd) and chat color options, with plans to fold personalities into Advanced Voice Mode.

The debut was rocky. OpenAI removed legacy models (e.g., GPT-4o, 4.1, o3) without notice, routed most users through an autoswitcher that partially broke on day one, and drew criticism for a “chart crime” in its live deck. Altman apologized, doubled Plus rate limits, and brought 4o back for paid users. A model picker has since returned with GPT-5 modes—Auto, Fast, and Thinking—and access to select legacy models (4o is default; others via settings). Rate limits for GPT-5 Thinking now reach up to 3,000 messages/week, with fallback to a Thinking-mini tier. Early comparisons suggest GPT-5-Thinking and GPT-5-Pro significantly reduce hallucinations and improve writing and long-form reasoning, while base GPT-5 (“Fast”) looks closer to GPT-4o and can still be somewhat sycophantic; many developers report strong coding performance and competitive “intelligence per dollar.” Despite OpenAI’s “best model” messaging, external tests show mixed benchmark wins versus Anthropic, Google, and xAI. The low pricing could still spark an LLM price war. User backlash over personality and workflow changes prompted OpenAI to add more transparency, customization, and options, with rapid day-by-day iteration continuing.

Anthropic takes aim at OpenAI, offers Claude to ‘all three branches of government’ for $1

Related:

Anthropic will offer Claude for $1 for one year to all three branches of the U.S. government—executive, legislative, and judicial—escalating OpenAI’s $1 ChatGPT Enterprise offer to the federal executive branch. The company will provide both Claude for Enterprise and Claude for Government, the latter supporting FedRAMP High workloads for sensitive but unclassified data. Emphasizing “uncompromising security standards,” Anthropic cites certifications and integrations that let agencies access Claude via existing secure infrastructure through AWS, Google Cloud, and Palantir. It will also supply technical support to help agencies integrate Claude into workflows.

Companies Are Pouring Billions Into A.I. It Has Yet to Pay Off.

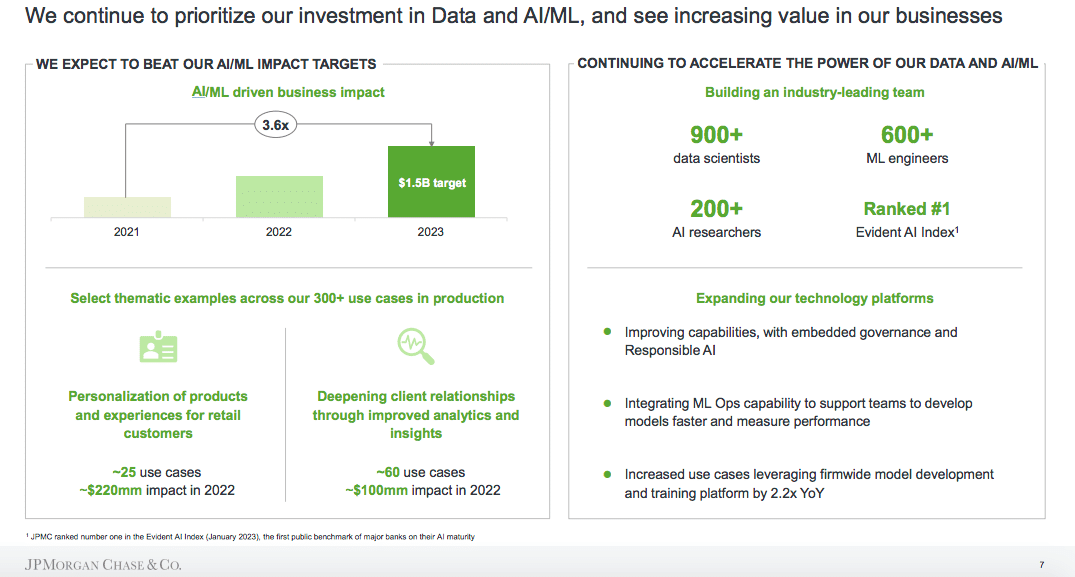

A new “productivity paradox” is emerging around generative AI: despite massive adoption, measurable business gains remain elusive. McKinsey finds roughly 80% of companies say they use generative AI, yet a similar share see no significant bottom-line impact. Firms expected tools like ChatGPT to streamline back-office and customer service work, but hallucinations, unreliable outputs, and integration headaches are slowing ROI. Outside tech, enthusiasm has outpaced the ability to translate pilots into production-grade, cost-saving deployments.

Costs and complexity are key culprits. Implementation is expensive, data quality and governance are hard, and human oversight to verify AI outputs blunts efficiency gains. Many deployments stay narrow or experimental, limiting enterprise-wide effects. Companies also grapple with model brittleness, compliance and security risks, and the need to redesign workflows—a change-management lift that takes time. As with the PC era, the tech’s potential is clear, but tangible efficiency gains will likely come from better reliability, domain-specific tuning, and deeper process integration rather than surface-level chatbots.

Goodbye, $165,000 Tech Jobs. Student Coders Seek Work at Chipotle.

A boom in computer science education over the past decade is colliding with a sharply tighter tech job market, as AI coding tools and widespread layoffs reduce demand for entry-level programmers. After years of promotion from tech leaders—like Microsoft’s 2012 push touting $100,000+ starting salaries, $15,000 signing bonuses, and $50,000 in stock grants—undergraduate CS majors in the U.S. more than doubled to over 170,000 by 2023, per the Computing Research Association. Recent layoffs at Amazon, Intel, Meta, and Microsoft, coupled with AI assistants that can generate thousands of lines of code, have cooled hiring. Viral anecdotes, such as a new CS grad only getting an interview at Chipotle, underscore a broader drop-off in entry-level software roles.

Generative AI is reshaping the entry-level landscape by automating routine coding tasks junior engineers traditionally handled. Paired with corporate belt-tightening, this has meant fewer interviews and offers, pushing new grads to widen their searches beyond tech or accept non-technical jobs. The reversal is stark given the long-running narrative that coding guaranteed a “golden ticket” to high-paying work with rich perks. While the piece centers on immediate employment headwinds, the drivers are clear: AI-enabled code generation and sustained big-tech layoffs are depressing demand just as the supply of CS graduates hits record highs.

Other News

Tools

Meta AI Just Released DINOv3: A State-of-the-Art Computer Vision Model Trained with Self-Supervised Learning, Generating High-Resolution Image Features. Trained on 1.7 billion unlabeled images with a 7B-parameter backbone, the model produces high-resolution frozen features that match or beat domain-specific systems on dense tasks and can be adapted with lightweight adapters for deployment across research and edge settings.

Anthropic’s Claude AI model can now handle longer prompts. Claude Sonnet 4’s context window expands to 1 million tokens (about 750,000 words) for enterprise API users, available via partners like Amazon Bedrock and Google Vertex AI, with higher usage pricing for prompts over 200,000 tokens.

Anthropic’s Claude chatbot can now remember your past conversations. A new feature lets Claude search and summarize past chats across devices for subscribed Max, Team, and Enterprise users (opt-in via Settings). It isn’t a persistent user-profile memory and only retrieves past conversations on request.

Google’s Gemini AI will get more personalized by remembering details automatically. Gemini will automatically recall and use stored personal details and preferences from past conversations to personalize responses. The feature is on by default but can be disabled; temporary chats and a renamed Keep Activity option help limit retention and training usage.

Google takes on ChatGPT’s Study Mode with new ‘Guided Learning’ tool in Gemini. The tool breaks down topics step-by-step with images, diagrams, videos, interactive quizzes, and custom flashcards to help users grasp the “why” and “how” behind concepts. Google is also offering a free one-year AI Pro subscription to students in several countries.

College students in US and beyond to get Google's AI Pro plan for free now. Eligible students will receive 2TB of Google Cloud storage plus access to Gemini 2.5 Pro, NotebookLM, Guided Learning, Veo 3 video generation, and higher limits for the Jules coding agent at no charge in the initial five countries.

Google pushes AI into flight deals as antitrust scrutiny, competition heat up. A beta tool uses a custom Gemini 2.5 model to parse natural-language queries and surface, rank, and display real-time priced flight deals within Google Flights, letting users manage query history.

The Browser Company launches a $20 monthly subscription for its AI-powered browser. The new plan offers unlimited access to Dia’s AI chat and skills for $20/month, introduces usage limits for free users, and marks the company’s first paid product amid plans for multiple tiers and growing competition from other AI-enhanced browsers.

Business

Cohere raises $500M to beat back generative AI rivals. The funding values Toronto-based Cohere at $5.5 billion, supporting workforce expansion (aiming to double from 250 employees) and costly model training while continuing to sell customized, cloud-agnostic enterprise AI models and partnerships with firms like Google Cloud and Oracle.

Elon Musk confirms shutdown of Tesla Dojo, ‘an evolutionary dead end’. Musk said Tesla has disbanded the Dojo team and shelved its D2 chip as the company pivots to TSMC- and Samsung-made AI5/AI6 chips that will serve both inference and large-scale training.

Meta acquires AI audio startup WaveForms. The startup, which raised $40 million and aimed to close the “Speech Turing Test” and build “Emotional General Intelligence,” will see two co-founders join Meta as it bolsters Superintelligence Labs after a string of AI audio acquisitions.

Anthropic nabs Humanloop team as competition for enterprise AI talent heats up. Anthropic is bringing Humanloop’s co-founders and most of its engineering and research staff in-house to strengthen enterprise tooling for prompt management, evaluation, observability, and safety.

Decart hits $3.1 billion valuation on $100 million raise to power real-time interacti. The company says it raised $100 million at a $3.1 billion valuation while generating tens of millions in GPU-acceleration revenue, selling licenses for its GPU optimization stack, and rolling out real-time video models (Oasis and MirageLSD) that it claims cut video-generation costs to under $0.25 per hour.

Elon Musk says X plans to introduce ads in Grok’s responses. Advertisers will be able to pay to have their products or services recommended by Grok, and X will use xAI technology to improve ad targeting to help fund GPU infrastructure.

Lovable projects $1B in ARR within next 12 months. The CEO says the company is adding at least $8 million in ARR monthly, passed $100 million ARR within eight months, projects $250 million by year-end, and plans to reach $1 billion within a year after a $200 million Series A and $1.8 billion valuation.

AI companion apps on track to pull in $120M in 2025. Appfigures data shows the category has already earned $82 million in H1 2025, reached 220 million total downloads, and is on pace to exceed $120 million in consumer spending by year-end, driven largely by a small group of top apps and a surge in releases and downloads.

Co-founder of Elon Musk’s xAI departs the company. He’s leaving to start Babuschkin Ventures, a VC firm focused on funding AI safety research and startups aimed at advancing humanity.

Sam Altman’s new startup wants to merge machines and humans. The startup, called Merge Labs and backed by Altman and OpenAI, is developing brain implants to enable closer integration between humans and machines and will directly compete with Elon Musk’s Neuralink.

Perplexity offers to buy Chrome for billions more than it’s raised. The unsolicited $34.5 billion cash bid includes commitments to keep Chromium open source, invest $3 billion in the project, and preserve Chrome users’ defaults—including keeping Google as the default search engine.

Research

GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models. GLM-4.5 uses a Mixture-of-Experts architecture with 355 billion parameters and multi-stage supervision plus reinforcement learning to improve performance on agentic, reasoning, and coding benchmarks.

Diffusion LLMs Can Do Faster-Than-AR Inference via Discrete Diffusion Forcing. The authors introduce discrete diffusion forcing (D2F), an AR–diffusion hybrid training and decoding method that enables block-wise causal attention, KV-cache reuse, and pipelined parallel decoding, making open-source diffusion LLMs run faster than autoregressive models while preserving benchmark performance.

Train Long, Think Short: Curriculum Learning for Efficient Reasoning. Training begins with a large token budget that is exponentially decayed during GRPO fine-tuning so the model first explores long reasoning chains and then learns to compress them, yielding better accuracy and lower token usage across math reasoning benchmarks.

OpenCUA: Open Foundations for Computer-Use Agents. OpenCUA provides an open-source framework including an annotation system, a large-scale dataset, and a scalable training pipeline that enables vision-language models to act as computer-use agents and achieves state-of-the-art results.

LiveMCPBench: Can Agents Navigate an Ocean of MCP Tools?. LiveMCPBench evaluates LLM agents on a wide range of real-world MCP tasks using a scalable pipeline and an adaptive judging framework.

TextQuests: How Good are LLMs at Text-Based Video Games?. This benchmark measures LLM performance on 25 classic Infocom text-adventure games to evaluate long-horizon reasoning, trial-and-error learning, and planning without external tools.

AimBot: A Simple Auxiliary Visual Cue to Enhance Spatial Awareness of Visuomotor Policies. AimBot overlays depth- and pose-informed scope reticles and orientation lines onto multi-view RGB inputs to visually encode end-effector position and orientation for visuomotor policies, improving task performance with minimal compute and no architectural changes.

Concerns

Hackers Hijacked Google’s Gemini AI With a Poisoned Calendar Invite to Take Over a Smart Home. Security researchers demonstrated that simple, plain-English prompt injections embedded in calendar invites, emails, or document titles can trick Gemini into invoking connected tools—such as Google Home, Zoom, or on-device functions—to perform physical actions, delete events, or produce harmful spoken and on-screen outputs.

Leaked Meta AI rules show chatbots were allowed to have romantic chats with kids. A leaked 200-page internal standards document reportedly allowed Meta’s AI personas to engage in romantic or sensual conversations with minors, generate demeaning or false statements under certain caveats, and permitted a range of violent and sexualized imagery—policies critics say are dangerously permissive.

Microsoft’s plan to fix the web with AI has already hit an embarrassing security flaw. Researchers found a simple path-traversal bug in Microsoft’s new NLWeb protocol that allowed remote attackers to read sensitive files (including API keys), prompting a patch but no CVE so far.

How Wikipedia is fighting AI slop content. Editors are deploying new speedy-deletion rules, pattern checklists, and tools like Edit Check and planned paste-detection prompts to quickly remove or flag low-quality, unsourced, or AI-generated submissions and reduce extra workload on volunteers.

Chatbots Can Go Into a Delusional Spiral. Here’s How It Happens.. The piece explains how conversational AI can lead users into reinforcing loops of belief and emotional dependency that culminate in distress, confusion, and a need for real human support.

Voiceover Artists Weigh the 'Faustian Bargain' of Lending Their Talents to AI. Some high-paying, short-term gigs are offering large sums for voice samples to train AI models, raising concerns about compensation, consent, and long-term impacts on performers’ livelihoods.

Policy

Inside the US Government's Unpublished Report on AI Safety. An unpublished report details a NIST-organized red-teaming exercise that found 139 novel ways to make modern AI systems misbehave and revealed gaps in the agency’s AI Risk Management Framework

U.S. Government to Take Cut of Nvidia and AMD A.I. Chip Sales to China. Under a new deal, the administration will collect fees from export licenses for certain Nvidia and AMD A.I. chip sales to China—a move critics say could weaken U.S. leverage and prompt Beijing to seek broader easing of other tech export restrictions.

Analysis

Inside India’s scramble for AI independence. A cadre of Indian startups and researchers is building open-source and multilingual models, specialized tokenizers, and voice-focused tools to tackle the country’s linguistic diversity, low-quality data, and cost constraints.

OpenAI's o3 Crushes Grok 4 In Final, Wins Kaggle's AI Chess Exhibition Tournament. OpenAI’s o3 won the tournament with a 4-0 final over Grok 4, while Gemini 2.5 Pro took third after beating o4-mini 3.5-0.5. Kaggle published source code and games from the event.