Every time a user opens an app, browses a product page, or scrolls a social feed, there’s a system somewhere trying to serve that request fast. Mostly, the goal is to serve the request predictably fast, even under load, across geographies, and during traffic spikes.

This is where caching comes in.

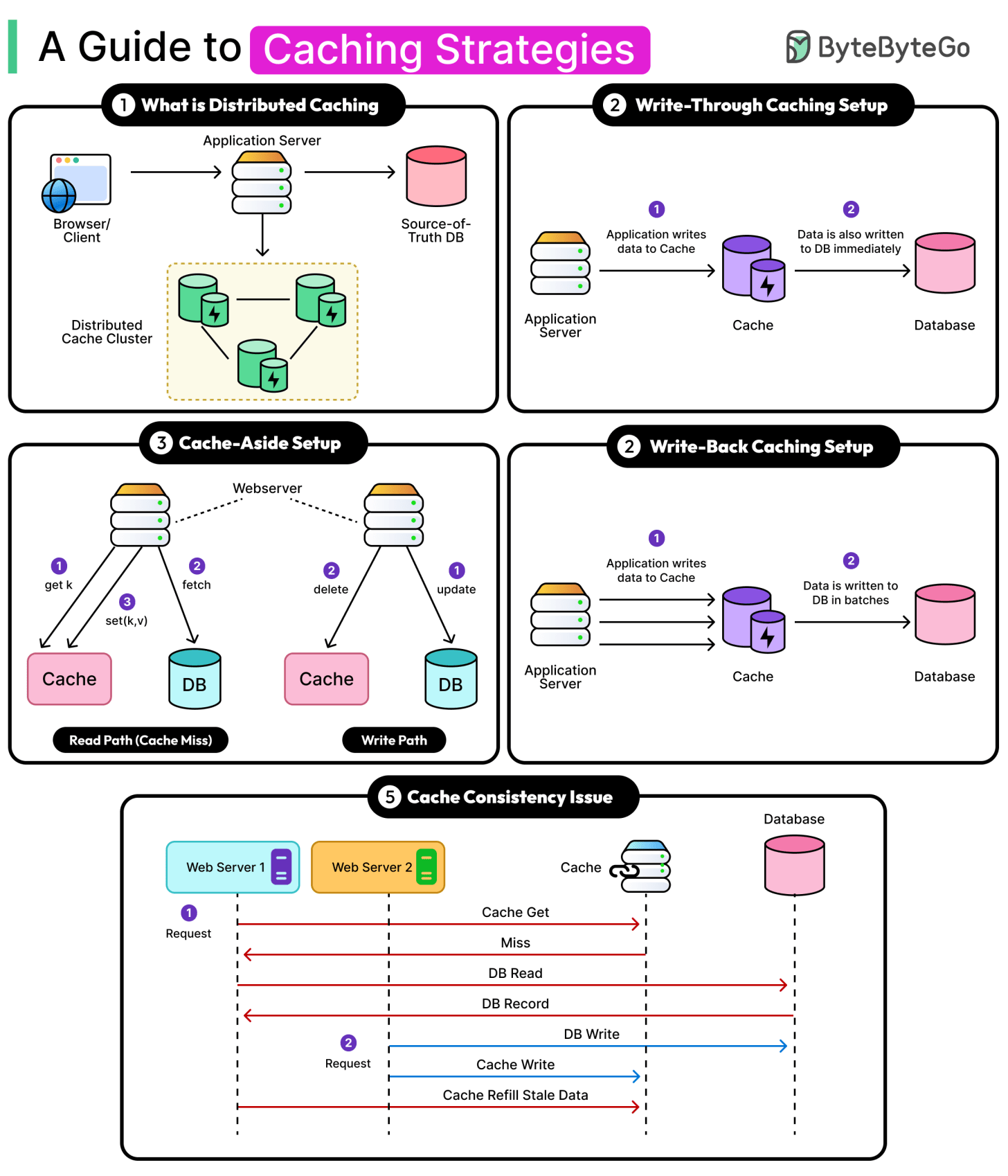

Caching is a core architectural strategy that reduces load on the source databases, reduces latency, and creates breathing room for slower, more expensive systems like databases and remote services.

If done correctly, caching can deliver great gains in performance and scalability. However, if implemented incorrectly, it can also cause bugs, stale data, or even outages.

Most modern systems rely on some form of caching: local memory caches to avoid repeat computations, distributed caches to offload backend services, and content delivery networks (CDNs) to push assets closer to users around the world.

However, caching only works if the right data is stored, invalidated, and evicted at the right time.

In this article, we will explore the critical caching strategies that enable fast, reliable systems. We will cover cache write policies like write-through, cache-aside, and write-back that decide what happens when data changes. Each one optimizes for different trade-offs in latency, consistency, and durability. We will also look at other distributed caching concerns around cache consistency and cache eviction strategies.