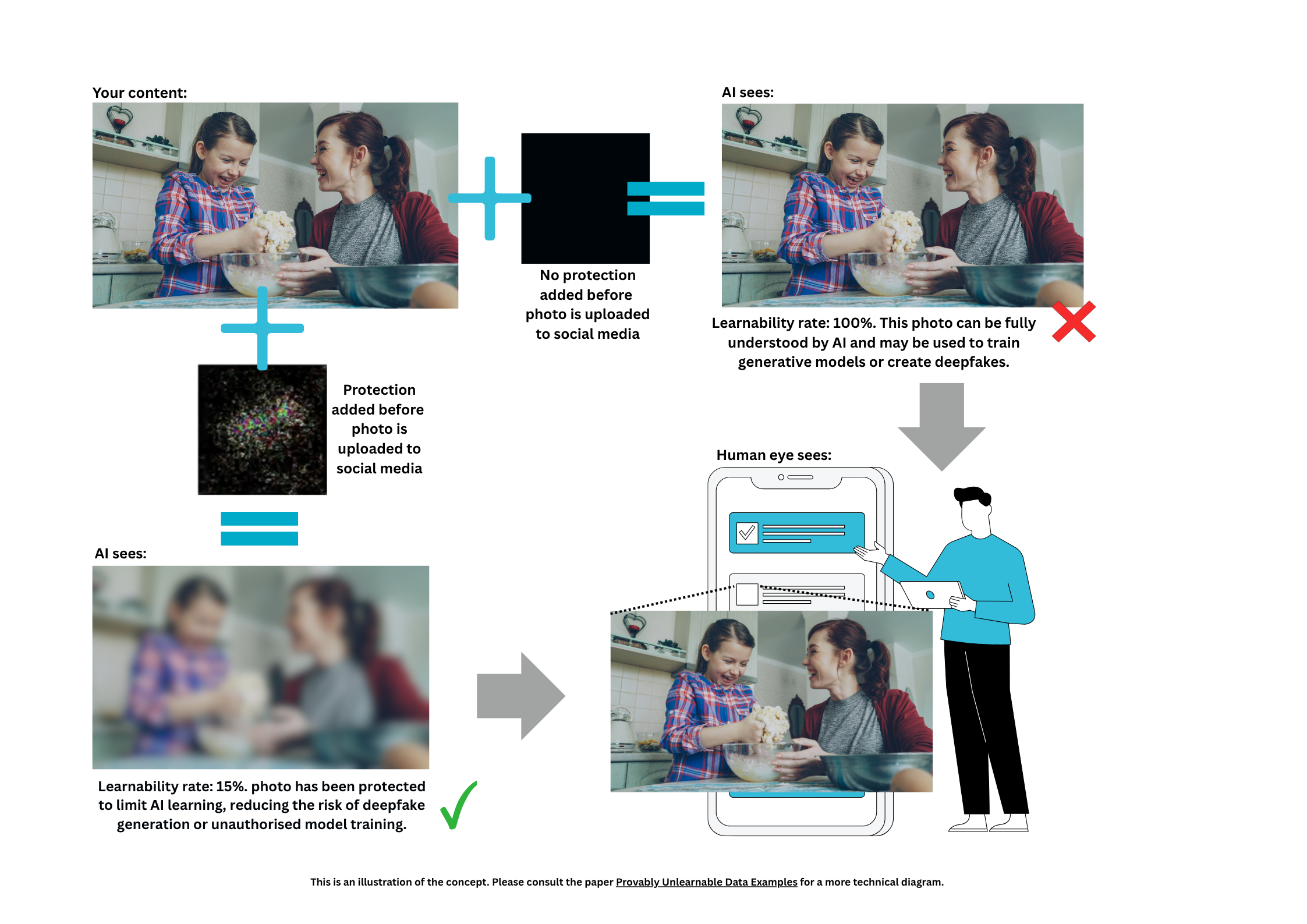

“Noise” protection can be added to content before it’s uploaded online.

“Noise” protection can be added to content before it’s uploaded online.

A new technique developed by Australian researchers could stop unauthorised artificial intelligence (AI) systems learning from photos, artwork and other image-based content.

Developed by CSIRO, Australia’s national science agency, in partnership with the Cyber Security Cooperative Research Centre (CSCRC) and the University of Chicago, the method subtly alters content to make it unreadable to AI models while remaining unchanged to the human eye.

This could help artists, organisations and social media users protect their work and personal data from being used to train AI systems or create deepfakes. For example, a social media user could automatically apply a protective layer to their photos before posting, preventing AI systems from learning facial features for deepfake creation. Similarly, defence organisations could shield sensitive satellite imagery or cyber threat data from being absorbed into AI models.

The technique sets a limit on what an AI system can learn from protected content. It provides a mathematical guarantee that this protection holds, even against adaptive attacks or retraining attempts.

Dr Derui Wang, CSIRO scientist, said the technique offers a new level of certainty for anyone uploading content online.

“Existing methods rely on trial and error or assumptions about how AI models behave,” Dr Wang said. “Our approach is different; we can mathematically guarantee that unauthorised machine learning models can’t learn from the content beyond a certain threshold. That’s a powerful safeguard for social media users, content creators, and organisations.”

Dr Wang said the technique could be applied automatically at scale.

“A social media platform or website could embed this protective layer into every image uploaded,” he said. “This could curb the rise of deepfakes, reduce intellectual property theft, and help users retain control over their content.”

While the method is currently applicable to images, there are plans to expand it to text, music, and videos.

The method is still theoretical, with results validated in a controlled lab setting. The code is available on GitHub for academic use, and the team is seeking research partners from sectors including AI safety and ethics, defence, cybersecurity, academia, and more.

The paper, Provably Unlearnable Data Examples, was presented at the 2025 Network and Distributed System Security Symposium (NDSS), where it received the Distinguished Paper Award.

To collaborate or explore this technology further, you can contact the team.