In our previous tutorial, we built an AI agent capable of answering queries by surfing the web and added persistence to maintain state. However, in many scenarios, you may want to put a human in the loop to monitor and approve the agent’s actions. This can be easily accomplished with LangGraph. Let’s explore how this works.

Setting Up the Agent

We’ll continue from where we left off in the last lesson. First, set up the environment variables, make the necessary imports, and configure the checkpointer.

pip install langgraph==0.2.53 langgraph-checkpoint==2.0.6 langgraph-sdk==0.1.36 langchain-groq langchain-community langgraph-checkpoint-sqlite==2.0.1import osos.environ['TAVILY_API_KEY'] = "<TAVILY_API_KEY>"os.environ['GROQ_API_KEY'] = "<GROQ_API_KEY>"from langgraph.graph import StateGraph, ENDfrom typing import TypedDict, Annotatedimport operatorfrom langchain_core.messages import AnyMessage, SystemMessage, HumanMessage, ToolMessage, AIMessagefrom langchain_groq import ChatGroqfrom langchain_community.tools.tavily_search import TavilySearchResultsfrom langgraph.checkpoint.sqlite import SqliteSaverimport sqlite3sqlite_conn = sqlite3.connect("checkpoints.sqlite",check_same_thread=False)memory = SqliteSaver(sqlite_conn)# Initialize the search tooltool = TavilySearchResults(max_results=2)Defining the Agent

class Agent: def init(self, model, tools, checkpointer, system=""): self.system = system graph = StateGraph(AgentState) graph.add_node("llm", self.call_openai) graph.add_node("action", self.take_action) graph.add_conditional_edges("llm", self.exists_action, {True: "action", False: END}) graph.add_edge("action", "llm") graph.set_entry_point("llm") self.graph = graph.compile(checkpointer=checkpointer) self.tools = {t.name: t for t in tools} self.model = model.bind_tools(tools) def call_openai(self, state: AgentState): messages = state['messages'] if self.system: messages = [SystemMessage(content=self.system)] + messages message = self.model.invoke(messages) return {'messages': [message]} def exists_action(self, state: AgentState): result = state['messages'][-1] return len(result.tool_calls) > 0 def take_action(self, state: AgentState): tool_calls = state['messages'][-1].tool_calls results = [] for t in tool_calls: print(f"Calling: {t}") result = self.tools[t['name']].invoke(t['args']) results.append(ToolMessage(tool_call_id=t['id'], name=t['name'], content=str(result))) print("Back to the model!") return {'messages': results}Setting Up the Agent State

We now configure the agent state with a slight modification. Previously, the messages list was annotated with operator.add, appending new messages to the existing array. For human-in-the-loop interactions, sometimes we also want to replace existing messages with the same ID rather than append them.

from uuid import uuid4def reduce_messages(left: list[AnyMessage], right: list[AnyMessage]) -> list[AnyMessage]: # Assign IDs to messages that don't have them for message in right: if not message.id: message.id = str(uuid4()) # Merge the new messages with the existing ones merged = left.copy() for message in right: for i, existing in enumerate(merged): if existing.id == message.id: merged[i] = message break else: merged.append(message) return mergedclass AgentState(TypedDict): messages: Annotated[list[AnyMessage], reduce_messages]Adding a Human in the Loop

We introduce an additional modification when compiling the graph. The interrupt_before=[“action”] parameter adds an interrupt before calling the action node, ensuring manual approval before executing tools.

class Agent: def init(self, model, tools, checkpointer, system=""): # Everything else remains the same as before self.graph = graph.compile(checkpointer=checkpointer, interrupt_before=["action"]) # Everything else remains unchangedRunning the Agent

Now, we will initialize the system with the same prompt, model, and checkpointer as before. When we call the agent, we pass in the thread configuration with a thread ID.

prompt = """You are a smart research assistant. Use the search engine to look up information. \You are allowed to make multiple calls (either together or in sequence). \Only look up information when you are sure of what you want. \If you need to look up some information before asking a follow up question, you are allowed to do that!"""model = ChatGroq(model="Llama-3.3-70b-Specdec")abot = Agent(model, [tool], system=prompt, checkpointer=memory)messages = [HumanMessage(content="Whats the weather in SF?")]thread = {"configurable": {"thread_id": "1"}}for event in abot.graph.stream({"messages": messages}, thread): for v in event.values(): print(v)Responses are streamed back, and the process stops after the AI message, which indicates a tool call. However, the interrupt_before parameter prevents immediate execution. We can also get the current state of the graph for this thread and see what it contains and it also contains what’s the next node to be called (‘action’ here).

abot.graph.get_state(thread)abot.graph.get_state(thread).nextTo proceed, we call the stream again with the same thread configuration, passing None as input. This streams back results, including the tool message and final AI message. Since no interrupt was added between the action node and the LLM node, execution continues seamlessly.

for event in abot.graph.stream(None, thread): for v in event.values(): print(v)Interactive Human Approval

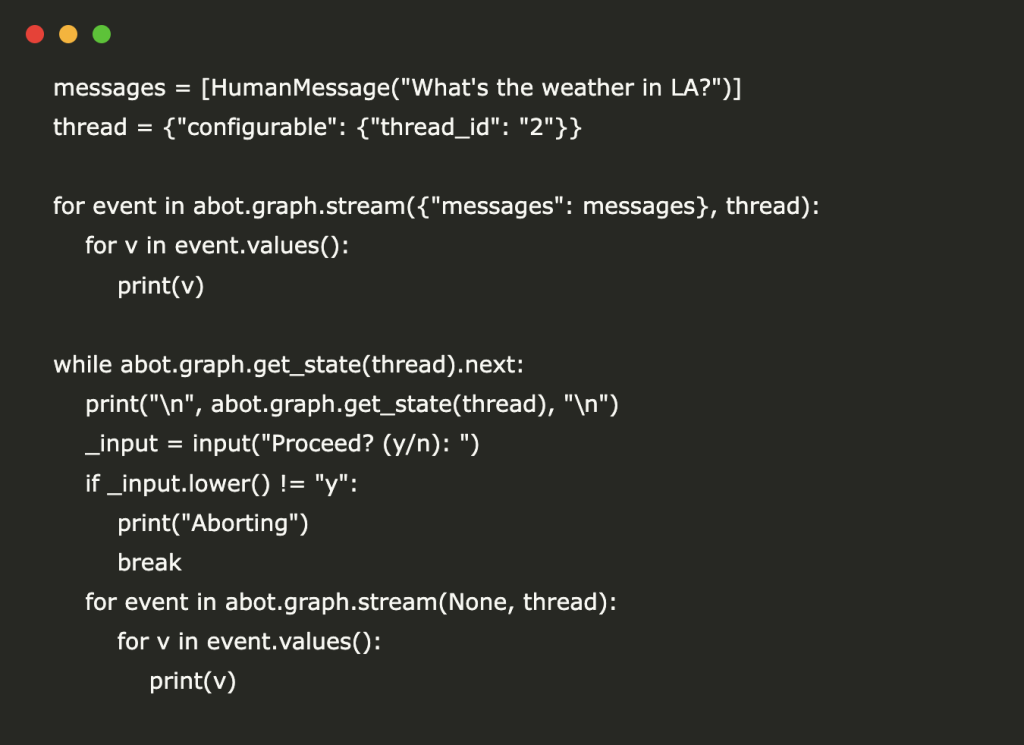

We can implement a simple loop prompting the user for approval before continuing execution. A new thread ID is used for fresh execution. If the user chooses not to proceed, the agent stops.

messages = [HumanMessage("What's the weather in LA?")]thread = {"configurable": {"thread_id": "2"}}for event in abot.graph.stream({"messages": messages}, thread): for v in event.values(): print(v)while abot.graph.get_state(thread).next: print("\n", abot.graph.get_state(thread), "\n") _input = input("Proceed? (y/n): ") if _input.lower() != "y": print("Aborting") break for event in abot.graph.stream(None, thread): for v in event.values(): print(v)Great! Now you know how you can involve a human in the loop. Now, try experimenting with different interruptions and see how the agent behaves.

References: DeepLearning.ai (https://learn.deeplearning.ai/courses/ai-agents-in-langgraph/lesson/6/human-in-the-loop)

The post Creating an AI Agent-Based System with LangGraph: Putting a Human in the Loop appeared first on MarkTechPost.