Published on December 9, 2024 7:09 PM GMT

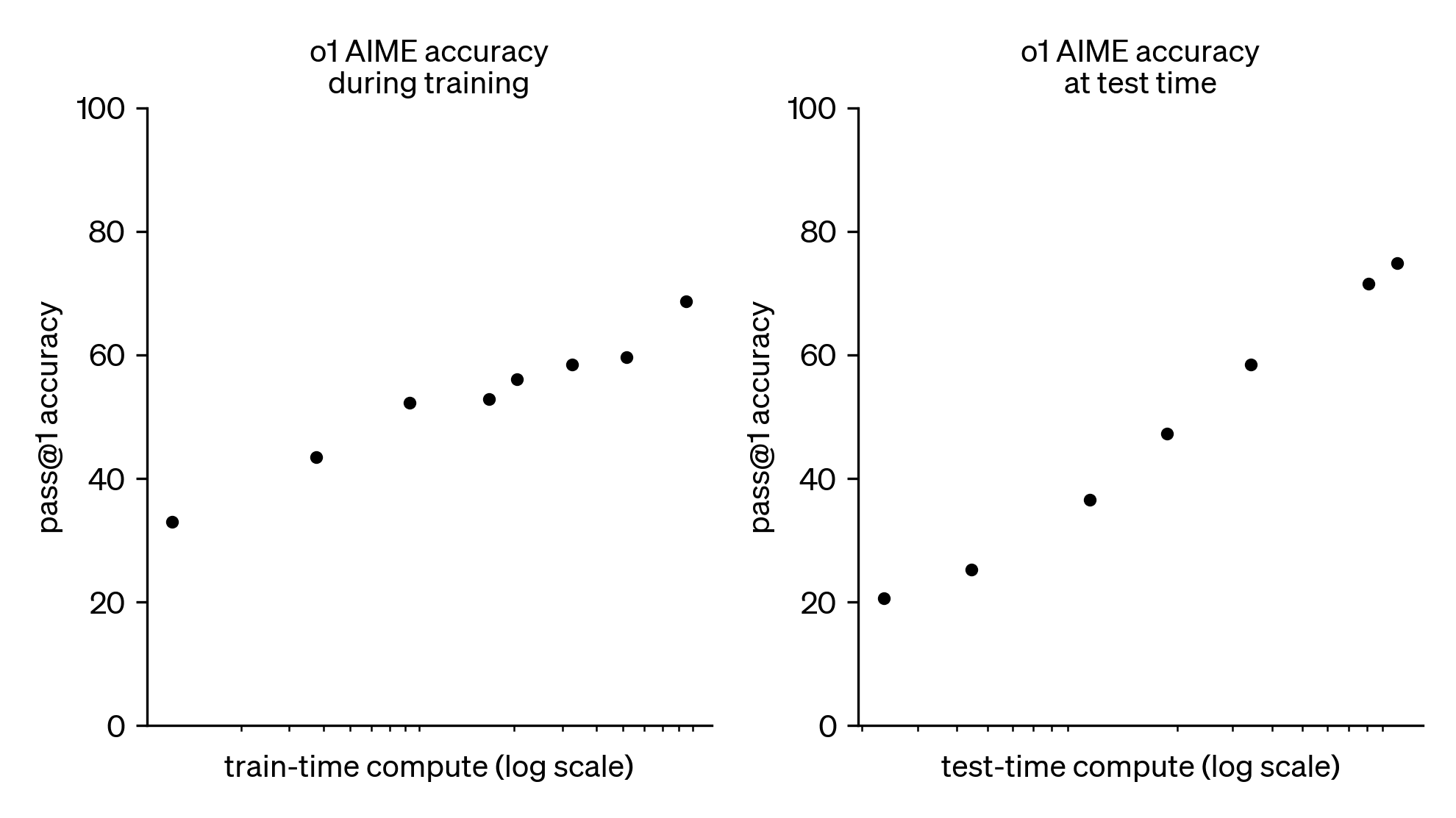

TL;DR: In September 2024, OpenAI released o1, its first "reasoning model". This model exhibits remarkable test-time scaling laws, which complete a missing piece of the Bitter Lesson and open up a new axis for scaling compute. Following Rush and Ritter (2024) and Brown (2024a, 2024b), I explore four hypotheses for how o1 works and discuss some implications for future scaling and recursive self-improvement.

The Bitter Lesson(s)

The Bitter Lesson is that "general methods that leverage computation are ultimately the most effective, and by a large margin." After a decade of scaling pretraining, it's easy to forget this lesson is not just about learning; it's also about search.

OpenAI didn't forget. Their new "reasoning model" o1 has figured out how to scale search during inference time. This does not use explicit search algorithms. Instead, o1 is trained via RL to get better at implicit search via chain of thought (CoT). This was the simplest possible way to incorporate search into LLMs, and it worked.

The key consequence is that OpenAI has opened up a new frontier of the bitter lesson: test-time scaling (see figure above). The original scaling laws taught us how to exchange training-time compute for better predictions. These new test-time scaling laws teach us how to exchange inference-time compute for better decisions.

This removes one of the last hard barriers to AGI.

What we know about o1

OpenAI has been characteristically quiet about the details, so we don't know exactly how o1 works. But they haven't been silent.

What OpenAI has told us

Rush points out that we can reconstruct quite a lot from the announcement:

Our large-scale reinforcement learning algorithm teaches the model how to think productively using its chain of thought in a highly data-efficient training process.

This tells us three key things:

- Chain of Thought (CoT): o1 performs implicit search within a single chain of thought, rather than running an explicit search algorithm at inference time.Reinforcement Learning (RL): Instead of supervised training against fixed labels, o1 learns from variable rollouts with dynamically generated reward signals.Data-Efficiency: The process requires relatively few (human-labeled) samples. This does not necessarily mean the process is either token- or compute-efficient.

More generally and perhaps most importantly, o1 is solidly within the existing LLM paradigm. We're starting with a pretrained base model (or possibly a fine-tuned chat model) and intervening in post-training. The innovation is primarily in the data and training process and not in the architecture.

What OpenAI has showed us

We can also learn something about how o1 was trained from the capabilities it exhibits. Any proposed training procedure must be compatible with the following capabilities:

- Error Correction: "[o1] learns to recognize and correct its mistakes."Factoring: "[o1] learns to break down tricky steps into simpler ones."Backtracking: "[o1] learns to try a different approach when the current one isn't working."

At the same time, we can reject any hypothesis that explicitly tries to program in these capabilities. Noam Brown (2024) emphasizes these capabilities are emergent:

We were strategizing about how to enable [o1] to do these things and it's just figuring [it] out on its own.

For all its secrecy, OpenAI has leaked enough bits to tightly constrain the space of possibilities.

Proto-o1: Chain of Thought

Before we get to the candidate explanations, let's examine o1's predecessors in test-time scaling.

In-Context Learning

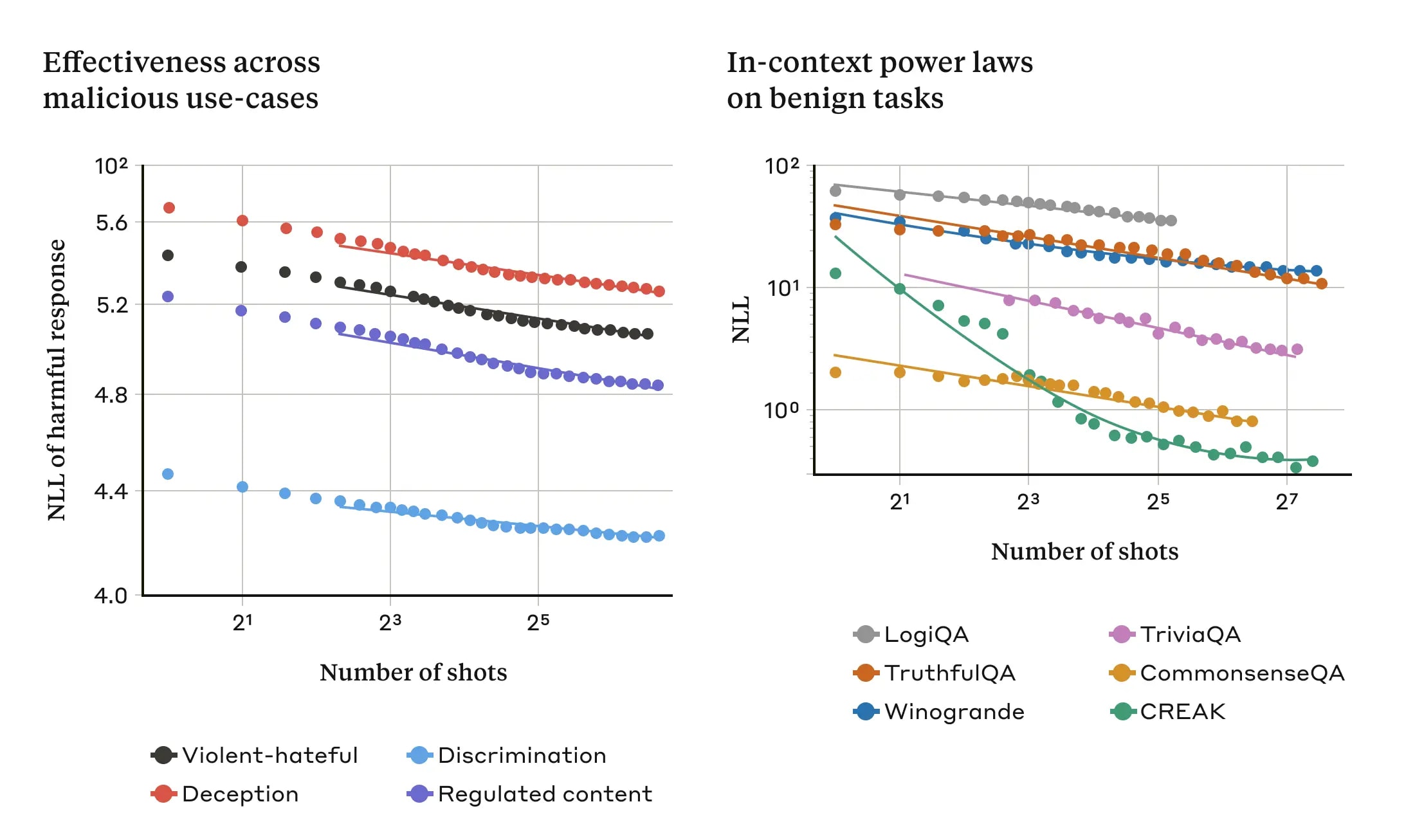

Early work on in-context learning already made it clear that test-time compute (in the form of additional example tokens) could translate to better performance. However, multi-shot prompting is bottlenecked by expensive supervised data that makes it infeasible as a general-purpose approach to scaling test-time compute.

Thinking Step-by-Step

Simply asking GPT-3 to explain its reasoning "step-by-step" dramatically improves its performance (Kojima et al. 2023). This trick was so successful that frontier labs now explicitly select for "chain-of-thought" reasoning via system prompts, prompt distillation, or instruction finetuning.

Unlike few-shot prompting, standard chain-of-thought techniques are not necessarily bottlenecked by human-labeled data. However, prompting alone makes it difficult to exercise precise control over how much test-time compute to spend. More importantly, chat models run into problems with hallucinations, loops, mode collapse, etc. when generating long rollouts. Classical chain of thought works but only up to a point.

Majority Vote

The simplest way to scale test-time compute in a general and controlled manner is to sample multiple rollouts and take the majority answer. This is called "majority vote" or "self-consistency" or "consensus" and has been used to great effect, for example, in METR's recent REBench paper. Majority vote offers immediate benefits over the baseline strategy of ancestral sampling (i.e., sampling a single chain of thought).

However, majority vote quickly runs into plateaus. To do better, we're going to have to come up with a better way of consolidating the information spread across multiple chains of thought. This brings us to the "reinforcement learning" part of o1.

o1: Four Hypotheses

When OpenAI says o1 uses "reinforcement learning", they could mean a lot of things. Let's interpret this as meaning that the learning process involves actually sampling rollouts from the model and then using a verifier to either filter, evaluate, guide, or combine those rollouts. These four options correspond to our four basic hypotheses.

A verifier is a function that returns the probability of an answer (i.e., a finished roll-out) being correct. In practice, the verifier is probably some kind of learned reward model (though it could be automated, like unit tests for code). Verifiers allow us to implement more sophisticated inference-time scaling strategies:

- Rejection sampling / Best-of-N: Generate multiple solutions and use the verifier to filter for correct answers.Monte-Carlo rollouts: Running rejection sampling from an intermediate chain-of-thought lets you estimate the value of a particular partial rollout. You can use this to continue your chain-of-thought along only the top-ranked paths (as in beam search), then repeat this procedure to iteratively guide your sampling procedure to better outcomes.Learning against verifier: Rather than using your verifier at test-time, we can use the verifier only during the training procedure to amortize the decision-making process into the model itself.

The leading theory is that o1 falls under the last bullet — that OpenAI is keeping its aesthetic commitment to "intelligence as a single model" and is not using any verifiers in deployment.

Like Rush, we'll elide all of the subtle difficulties involved in actually getting RL to work in practice (how we're batching episodes, whether we're on-policy or off-policy, whether we're using KL regularization, which particular learning algorithm we're using, etc.). These subtleties are important: in fact, this is where the difficulty lies. Still, the details won't be necessary for us to get a high-level understanding of what might be going on inside o1.

1. Filter: Guess + Check

The simplest approach is to use the verifier as a filter: generate multiple reasoning attempts, check which ones succeed using the verifier, then train only on those successful examples (as a standard next-token prediction task). That is, combine rejection sampling with supervised fine-tuning.

On the plus side, this is simple and has ample literature to back it up (Yarowsky, 1995; Cobbe et al., 2021; Zelikman et al., 2022; Gulcehre et al., 2023; Singh et al., 2023; Nakano et al., 2021). On the negative side, this seems likely to be too computationally inefficient. Also, calling this "RL" is a bit of a stretch.

2. Evaluation: Process Rewards

A more sophisticated approach is to use a verifier to evaluate a reasoning trace. Instead of an outcome reward model (ORM) that assigns a value to complete rollouts, we train a process reward model (PRM) that assigns a value to partial rollouts, and then we train our reasoning model against these intermediate rewards (using, for example, PPO, see Wang et al. 2024).

Alternatively, you can use PRMs just for filtering, since prior work shows that PRMs outperform ORMs at rejection sampling (Lightman et al., 2023). This leads to a hybrid approach in between "Guess and Check" and "Process Rewards."

There are many ways to implement a PRM, but the obvious one is to use an LLM (as a "generative verifier"). Then, the verifier can actually use chain of thought itself. You may even be able to use the same LLM for both generation and verification, alternating between generation and verification within a single token stream. Such a hybrid approach might explain instances in which the model appears to self-evaluate or self-correct: when the model asks itself "is this a good explanation?", is it the generator or verifier?

Rush believes that something involving process rewards is the most likely answer. There's evidence for process rewards improving performance (ibid.), but no public examples yet combining generation and verification into a single chain of thought. These approaches are more complex than "Guess and Check" but still simpler than the other options.

3. Guidance: Search / AlphaZero

Intermediate feedback can also be used to guide the sampling procedure itself. The guide signal can come from either a model (such as the process reward models of the previous section) or directly from MC rollouts. Self-play enables the generator and guide to iteratively improve together. This distills the search process into the model itself ("amortization").

One variant is to use beam search to generate a number of candidate continuations, then use the guide to filter out only the most promising continuations, continue with those, and repeat.

A more famous (and complex) variant is Monte-Carlo Tree Search (MCTS). Like beam search, we generate a number of possible continuations, then sample one of those continuations at random, and repeat this iteratively until we reach an end state. Then, we propagate the value of that end state up to the parent nodes, sample a new node, and repeat. This has the benefit of not just rushing towards the end of the tree but also allowing the model to explore a wider fraction of the tree.

Obviously these AlphaZero-inspired methods are the most exciting (and frightening) option. Explanations like MCTS might also have an edge in explaining some of the observed behaviors like backtracking. On the other hand, these approaches are very complex, compute-intensive, and haven't seen much success yet in the open research community.

4. Combination: Learning to Correct

An alternative approach is to combine multiple chains of thought in clever ways and train against the resulting composite chain of thought. Here's one variant conjectured by Gwern:

[T]ake a wrong monologue, and at a random point, insert the string "wait, that's wrong. What if..." and then inject some wrong ones, and then eventually, a correct one. Now you have a correct-by-construction inner-monologue where it "makes mistakes" and then "corrects itself" and eventually succeeds and "answers the question correctly". This can be trained on normally.

Personally, I find this hypothesis unlikely, since it directly contradicts the report that error correction and backtracking are emergent rather than explicitly selected for. That said, I do expect "in-context curriculum design" to be an important direction of future research.

Whatever the actual mechanism, there are only a few raw ingredients (chain of thought, verifiers, and learning algorithms) and only so many ways to combine them. The open-source community will catch up. DeepSeek and QwQ suggest they may already have. We will soon have a better idea which of these approaches actually work and which do not.

Post-o1: (Recursive) Self-Improvement

When OpenAI says o1 is "data-efficient", it can mean a lot of things, depending on whether we're denominating "data" in terms of token count or sample/prompt count, and whether or not we're including synthetically generated data in these counts.

The more boring interpretation is that OpenAI means the per-token improvement in loss is better than during pretraining. This is boring because pretraining is just a very low bar to clear. The more interesting interpretation is that o1 is efficient in terms of human-labeled samples. This would reflect a longstanding trend away from human labels towards increasingly self-guided training procedures:

- AlphaGo was trained on expert games. AlphaGo Zero eliminated human game data in favor of pure self-play, required significantly more compute, and achieved much better performance while discovering qualitatively different strategies than human experts.RLHF involves expensive human preference data. RLAIF and Constitutional AI replace the human with AIs and achieve better results.Just last year, training a PRM would have involved supervised learning on expensive human annotations (Uesato et al., 2022; Lightman et al., 2023). Now, they're probably bootstrapped from an ORM using, for example, MC rollouts (Wang et al. 2024).Supervised fine-tuning on expert-annotated chain of thought doesn't work as well as whatever it is that o1 is doing. "[I]f you train the model using RL to generate and hone its own chain of thoughts it can do even better than having humans write chains of thought for it." (OpenAI 2024)

The bitter lesson strikes again: o1 is part of a continual trend in which increasingly inexpensive compute displaces constantly expensive human input.

This is what recursive self-improvement really looks like. So far, recursive self-improvement in practice has looked less like the model tinkering with its own architecture or solving miscellaneous engineering problems, and more like the model generating and curating its own training data or guiding its own training processes. This appears to be just getting started.

Outlook

Recently, there have been rumors of "scaling breaking down". I'm skeptical. But even if pretraining is running into a wall, o1 tells us it doesn't immediately matter. Test-time scaling opens up an entirely new way to unload compute, and, on this front, it's still GPT-2 days (OpenAI 2024).

How much could we scale up in test-time compute? Brown (2024) offers a heuristic argument: there are some problems we would be willing to spend millions of dollars to (attempt to) solve. A typical LLM query costs on the order of a penny. That means an easy eight orders of magnitude.

Even in the longer term, "scaling breaking down" might not matter because of how o1's capabilities could feed back into pretraining. One AI's inference time is a future AI's training time. We're already seeing this with OpenAI's next flagship model: according to The Information (2024), one of o1's key applications is generating high-quality training data for "Orion," OpenAI's next large language model in development.

Maybe the final form of the Bitter Lesson is a tight feedback loop between learning and search: use search to generate high-quality reasoning traces, distill those traces into more condensed token streams, and train against the result to amortize the reasoning into the base model. Maybe past a certain critical threshold of capability, classic problems with mode collapse, catastrophic forgetting, etc. stop being a issue.

Maybe we're already this past point of sustained self-improvement. The clock is ticking.

Discuss